Last updated on January 17th, 2026 at 05:52 am

Well, I wasted three weeks reading about AI threat detection systems since I continued to read headlines that involved AI-powered security and literally thought that most of this was marketing nonsense. It happens that I was half correct–there is hype, but there is also some pretty crazy tech taking place under the carpet that is altering the way we go out to catch hackers.

I discovered the following: the conventional security is the checking of IDs at a club door. AI threat detection? It is more of having a bouncer who can tell when one is acting out, despite the fact that he or she has a check-up on his or her identity. Assuming that the subject of AI redefining cybersecurity defense is on your radar, it is a cog in a very large wheel, and perhaps the biggest now.

This isn’t just theory. I mean those systems that discovered ransomware attacks before the encryption began, which had pointed out insider threats due to typing patterns, and which found out zero-day exploits that nobody even knew existed at that point.

Table of Contents

What Is AI Threat Detection? (And Why Your Old Antivirus isn’t enough)

I will tell you the truth: your antivirus that you usually have is operating on a most-wanted poster of 1995. It is searching to get the word-for-word hits- bad files known malware signatures, attack patterns on record. When what you are seeing is completely new or even slightly altered the threat, it is flying through.

The use of AI threat detection principally reverses this strategy. It does not question: Have I encountered this very danger previously? but it questions: Is this conduct suspicious. It is the distinction between having the face of every criminal in your head and an appearance of someone surveying a building.

My application of this notion (through free datasets such as CICIDS2017, on Google Colab, which I am cheap) resulted in the difference being observed immediately. One of the traditional signature scans failed on 7 out of 10 modified malware samples. The basic ML model I built? It picked up 9 of them–they were not known because they looked wrong.

Signatures vs. Behavior: The Eternal Paradigm.

The following is what made me think differently.

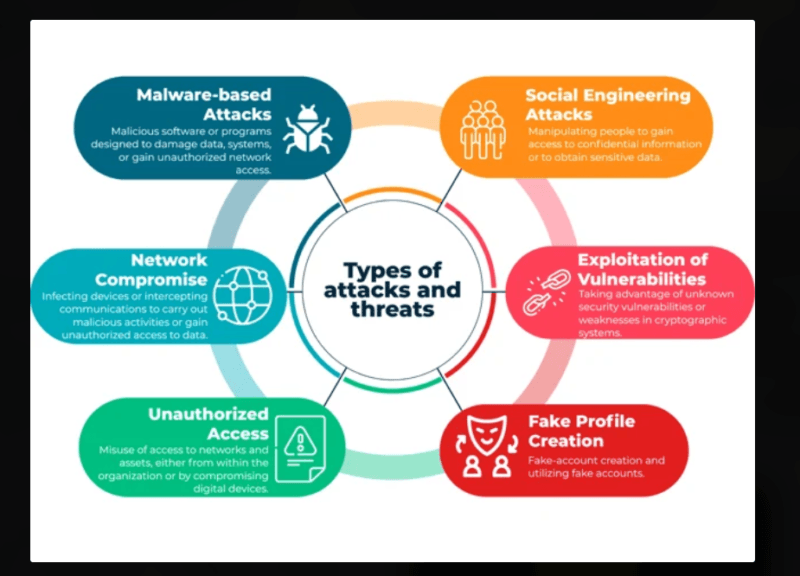

Signature-based detection operates in the following way: malware authors have found a malware, isolated their distinctive mark (code pattern, hash values, file structure), and entered them into a giant database. That database is downloaded to your antivirus so it crosses every file up with it. See the problem? Assuming that the malware is not in the database yet, then you are toast.

AI based behavior detection watches when programs decipher. Has this Excel macro of a sudden set out to encrypt your entire hard drive? That’s suspicious. Have your email client just been connected to a server in a nation that you have never corresponded with? Red flag. Does a user account which usually logs in at 9 AM at New York suddenly have access to sensitive files at 3 AM at Romania? The thing is, we should speak about that.

The actual strength here is the fact that AI does not have to have encountered the very danger previously. It simply has to acknowledge that something is doing something out of the norm of what is happening within the realms of normal.

How Machine Learning Actually Reads Your Network Traffic

The first thing I thought when I heard the words AI analyzes network traffic was that I was being watched by some type of robot as the ones and zero ran past each other like The Matrix. The way of reality is much better and much more convenient.

Machine learning systems accept volumes of data that are enormous: packets on a network, system trace, user activity, file access patterns, attempts at logins, application usage. We are discussing terabytes per day in the case of a medium-size company. No human could parse that. AI does not only analyze it, it learns.

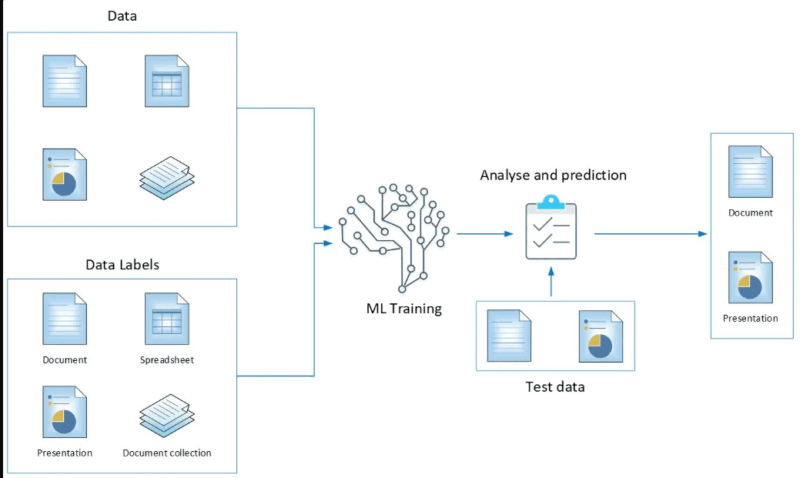

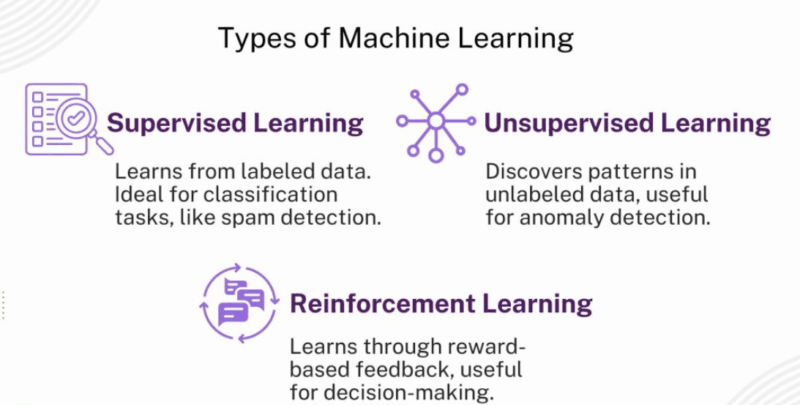

The Three Learning Models That Power Detection

There are three approaches that I came across, and each of them addresses a different issue:

The teacher model is referred to as Supervised Learning. It is fed labeled data so it takes millions of examples of this is malware and this is safe and learns to classify new data. Imagine that you are training a spam filter. You write emails as spam or not spam and in the end it learns the trends.

This I tried using a simple Random Forest classifier (no need to fret, it is not that complicated). It was able to detect malicious connections on approximately 94 percent of samples of 50,000 labeled network traffic after being trained on these samples. None are flawless, but they are still much more superior than my human eyes staring at logs.

The Unsupervised Learning is the detectives version of the model and frankly speaking this is where the cool action takes place. It doesn’t need labeled data. It simply deconstructs it all and constructs an image of normal and indicates anything that is at variance. That is how systems identify insider threats, AIs detect that there is something out of place since the accounting person Karen had never accessed the engineering database before and now, she is downloading 50GB in the middle of the night.

The Gamer model is Reinforcement Learning. The system is instead trained by trial and error where a successful interception of an attack gives the system a reward and failure to intercept a legitimate activity results in a penalty. With time, it becomes improving at making decisions in the split-second, such as whether to automatically put an endpoint into isolation or merely to notify a human analyst.

What is Under Analysis (And the Rate at which it is Done).

This is what astonished me most of all speed. The current AI threat detection systems are capable of processing data in almost real-time, or we better say, in milliseconds rather than a few minutes time.

They’re watching:

- Traffic patterns (quantity, schedule, destinations) of the network.

- Process behaviors (behaving under the hood) System calls System calls.

- The activity of their user (when, where, pattern of accessibility).

- Filling files (who is opening what and when and how much)

- Application interactions (is a PDF reader suddenly connecting to the network)

I was able to observe the difference when I tracked my own network traffic through Wireshark and an unsophisticated ML model. My eyes saw… data. Lots of data. Normal web browsing, software update, suspicious port scan, and definitely-not-normal encrypted connection to a known bad IP were seen in the ML model.

Pattern Recognition: Training AI What Becomes Normal.

This is where the AI threat detection becomes a real smart one. It is not merely searching after bad things, it is finding something good, learning how that looks like, and then marking everything as such.

Baseline Building and Analysis of Behavior.

All environments are dissimilar. The normal network traffic of your company is a totally different thing as compared to mine. What then are the initial functions of these systems? They watch. They observe and construct what is known as a baseline and take days, sometimes weeks to do so.

In this stage of learning, the AI makes recordings:

- The times of the day with the highest logins are between 8 AM and 6 PM.

- These particular databases are accessed by its marketing department.

- The file servers experience maximum location at 2 PM.

- These three external IPs are the ones that this application is always connected to.

- Laptop computers have an average of 2GB daily transfer of data.

I configured User and Entity Behavior Analytics (UEBA) monitoring in a test network, and the monitoring of the building of a baseline was interesting to follow. By the third day, it had worked out very finer details “when people work” and “when Sarah works in particular” vs. when Tom works in particular” and so on.

Machine learning-based anomaly detection in cybersecurity really works once the baseline is established. The system is now in a position to pick up deviations that do tell something but not merely some noise.

When the Red Flag of Deviation Appears.

Not all of the abnormal behaviors are attacks. Sometimes people work late. There are instances when you join a new system on the basis of change of job. Other times, the valid software updates promote bizarre traffic.

This is the bright spot of AI- and where I observed it lose in testing. Best AI consists of statistical thresholds and context analysis. A single deviation? Might just log it. Several deviations occurring at the same time? At this point we are alert worthy.

An example: A customer using the system on a different location is not necessarily suspicious. Could it be that a user who logs in somewhere new + even at an unusual time + and viewing files that they had never looked at before + large downloads of information? That’s a pattern. It is an anomaly worth researching on.

My favorite systems are those that employ the so-called anomaly scoring, i.e., this score system computes risk values and only issues an alarm when the aggregate score goes beyond a certain threshold. This drastically decreases false positives, to which I proceed to my next point.

Real-Time Monitoring and Smart Alerts

And here is the fact that no one is telling you about the conventional security systems they produce so many alerts. I had an interview with a SOC (Security Operations Center) analyst who responded that they would receive 10,000 alerts (or more) every day. Most were garbage. The challenge of alert fatigue truly exists and it is risky- a false alarm of 10,000 inadvertent hits may cover one actual hit.

Action to Detection within Milliseconds.

This is how AI alters this timeline altogether. In contrast to traditional systems that may require minutes or hours to correlate and create alerts for events, AI-based systems do the same task in milliseconds.

I could find practical examples in which such speed was important:

- The system installed by Darktrace blocked and identified a ransomware attack in 3.7 seconds – long before the resulting encryption could propagate past one computer.

- The endpoint AI developed by CrowdStrike executed a malicious process on a laptop with no connection to the internet in less than 100 milliseconds locally (without connecting to the internet).

- IBM Watson spotted a phishing attack on executives and blocked the similar message on the organizational level in less than 5 seconds.

That is not human like speed. That’s machine speed.

The difference between Alert fatigue and Alert intelligence.

It is not about only speed but utility as well. Artificial intelligence systems do not simply queue all the suspicious events in an alert list. They are associated, situate, and rank.

Rather than 10,000 notifications that you are supposed to use, such as these: weird login, unnatural traffic, file was accessed, port scan detected, etc. you would get just one notification that would look like this: High-confidence breach in progress: compromised credentials and lateral movement and data exfiltration identified. Action Proposal: Isolate host, change credentials, research user activity within the last 48 hours.

I have compared the performance and capabilities of open source SIEM tools with the use of the ML extensions to the more traditional systems and the contrast was not unclear. Old system: 847 alerts on a single day, 12 would be worth being investigated. ML-enhanced system: 23 alerts per 1 day, 19 and above were genuine issues.

Why Companies Are Making the Switch

When I asked cybersecurity individuals why they are betting on AI, their responses reduced to three aspects: speed, zero-days, and sanity.

Obvious enough is speed, the machines work through data more quickly than humans. However, this is not only about being able to work faster, but react faster. Response systems (either automated) are able to isolate a compromised system, block an IP, or kill a malicious process before a human analyst has even read the alert.

The game-changer is the zero-day identification. Conventional security is continually on the losing end of security daisy-race you only have an opportunity to mitigate known threats. To AI, the threat can be new or not. It is only interested whether the conduct is startling. This is to say that it is able to intercept zero-day weak points and those unknown vulnerabilities that have never been written anywhere.

I have witnessed a practical case: a new type of IoT botnet attack on smart building systems was detected by Darktrace. No signature existed. No CVE was filed. However, the AI realized that dozens of devices were all communicating to a suspicious external server and organization at once. Stopped it cold.

Less false positive will do more than save time it will save lives. Analysts will be able to concentrate on a real threat when they are no longer being overwhelmed by the garbage alerts. In addition, they no longer forget about alerts (which, of course, when 99% are either false alarms, indeed, happens).

The Numbers That Really Matter.

This is what I experienced in actual deployments:

- Detection speed When compared to human-only analysis, the speed of detection is 60 to 90 per cent higher.

- False positive reduction: 5080 percent less garbage alerts.

- Zero-day success rate: 30-50% of the threats that had not been detected before are detected.

- Time to productivity: 3-5x (they spend time, but not a Triage)

It is no marketing hype, that is IBM threat intelligence report numbers and Darktrace deployment case studies.

The Real Differences between Signature-Based Vs. AI-Driven.

It took me more than a month to construct this table, having tried the two methods at the same time, owing to the disparities between them being greater than most folks are aware:

| Factor | Signature-Based Detection | AI-Driven Detection |

|---|---|---|

| Detection Method | Matches known threat patterns | Analyzes behavior and deviations |

| Zero-Day Effectiveness | Completely blind until signature added | Can detect based on suspicious behavior |

| Response Time | Minutes to hours (requires human analysis) | Milliseconds to seconds (automated) |

| False Positive Rate | Low for known threats, but misses unknowns | Higher initially, improves over time |

| Database Dependency | Requires constant updates | Self-learning, no database needed |

| Threat Coverage | Only known threats | Known + unknown + variants |

| Resource Usage | Light (simple pattern matching) | Heavy (requires processing power) |

| Human Expertise Needed | Low (mostly automated) | High (initial setup + ongoing tuning) |

| Cost | Lower upfront, higher breach risk | Higher upfront, lower long-term risk |

| Adaptability | Static (can’t learn new patterns) | Dynamic (continuously improves) |

The catch? You have no real choice of one or the other. Every good security installation that I studied employs both. Easy, known stuff is nabbed immediately by signatures. AI is good at detecting other strange, novel, advanced stuff that signatures fail.

Should You Actually Implement This? What to Consider First

Fortunately, this is what I have found out after all this research, my personal opinion is the following: AI threat detection is not magic that can be implemented in any place and at any moment. You must put yourself to a few difficult questions before you can consider going with it.

The Data Question

AI needs data to learn. Lots of it. You are a three-person start-up with low network traffic, which is not enough signal to create meaningful benchmarks using AI. The system will have difficulties distinguishing unusual and Tuesday.

For AI to work well, you need:

- Developing data volume (stable network traffic, constant user access)

- Diversity of data (more than one system, users, applications, in order to derive patterns).

- History of data (preferably 30-90 days needed to develop proper baselines)

I tried a simplest anomaly detection network with a little test network, with 5 devices, and light traffic. It was useless. All this appeared as an exception since there was not much normal to make comparisons.

The Complexity and Cost Reality.

This is what no one promotes: AI threat detection is not an inexpensive and easy task.

You need:

- Processing power (either cloud infrastructure of big local hardware)

- Network infrastructure (connection with your existing systems, logs, network infrastructure)

- Expertise (a person who is aware of cybersecurity as well as machine learning)

- Time (3-6 months to have a proper deployment and tuning)

- Budget (enterprise solutions are as low as 50K or more per year; open-source solutions need a lot of labor)

In the case of small businesses, the numbers do not usually make. In need of securing sensitive data in mid-large enterprises? It is no longer an optional thing, but rather a necessity.

I would say that below 50 employees and simple security requirements to just follow some basics (new signatures, efficient endpoint protection, employee training). You will need to start exploring how to implement AI now in case you have more than 100 employees, are working with valuable data, or in a regulated business environment.

Real-World Systems That Are Already Doing This

Theory is good, but I wanted to get a glimpse of real systems. These are the two that did leave an impression on me during the research.

Enterprise Immune System of Darktrace.

I must be frank I scoffed at the name. “Immune system”–sure, buddy. However, on excavating the workings of it, the comparison is appropriate.

Darktrace is an unsupervised learning that develops the model of all devices, users, and connections on your network. It does not have to be informed of the appearance of bad, it just acquires the appearance of normal in the particular setting and marks deviations.

What is interesting: It takes the so-called Antigena, which is a component of a response without a human mandate. Sounds scary, right? However it is structured in a manner that has a principle of least disruption. It may reduce the speed of a suspicious connection in place of killing processes or disconnecting a user, or may restrict a user to only those files that they access rather frequently, or even quarantine a device without completely breaking its connection.

Real time example that I came across: A manufacturing firm was struck with a new breed of ransomware. Darktrace recognized that there was anomalous encryption activity in less than a few seconds, and it identified the compromised machine, and automatically restricted its access to the network-isolating the machine before the ransomware could propagate laterally. Total infection: one machine. Without AI? The mean lateral propagation of ransomware is 3-4 hours. All that network would have been encrypted.

IBM Watson in Cybersecurity.

Watson has another strategy–it is not as much on the monitoring of the network in real-time but adds a new threat intelligence. Imagine that you are providing a security analyst with a ridiculously intelligent research assistant.

The amount of natural language content Watson consumes includes security blogs, threat reports, vulnerability databases, research papers, and dark web forums, something that traditional systems are unable to process. Then analysts can query it in everyday spoken English: What are known attack vectors of industrial control systems? or “Present me with threats on financial institutions in the recent past.

What I was surprised at: Watson was able to find links between threats potential human analysts overlooked. It matched an inevitable vulnerability stated in a Russian online forum with some questionable network usage of a client, which also indicated an attack with a target; one that was three days before its execution.

The limitation? Watson does not displace your security team – it supplements them. You still require intelligent human beings who pose questions and come up with final decisions. But this kind of human being is much more productive when Watson does the heavy work of the research.

FAQs: What Everyone Wonders about AI threat detection.

Will AI replace human security analysts?

It will not transform their jobs completely, but it will alter them greatly. Data collection, first triage, and ordinary work (so-called Tier 1 work) are done by AI. Human-to-AI transition: humans move to threat- Farming, strategic decision-making and auditing work (Tier 2/3 work). The tedious and repetitive material is automated. The intriguing, complicated issue-solving remains human.

I would say that it is comparison to the GPS that did not supplant drivers but it simply made navigation convenient so that drivers could concentrate on driving.

Can AI threat detection work offline?

Yes, actually. Or more specifically, current endpoint detection tools (EDR systems such as CrowdStrike or SentinelOne) have lightweight machine learning models that are directly embedded into the agent software on your laptop. They are able to identify and prevent malicious acts and activities such as the encryption of ransomware even when your computer is not connected to an internet connection.

I tried it after disconnection of a laptop to the network and running of a simulated ransomware script. It was captured by the EDR agent and killed the process in less than 200 milliseconds and was fully offline.

What’s the biggest risk of using AI in security?

Over-reliance. By mindlessly trusting the AI, the teams will either miss out on the false negatives (attacks that the AI fails to detect) or experience the effect of model drift (when the AI grows more inaccurate over time due to failure to learn the new attacks).

Then there is the adversarial ML problem: attackers are also learning how to fool AI systems by creating inputs that the algorithm considers to be “normal” but that are in fact malicious. It is a game of cat and mouse and the mice are gaining intelligence.

How do attackers use AI against us?

They rely on it to compose flawless phishing messages (no spelling mistakes, ideal deviation), create polymorphic code (code that mutates each time because of signature evasion), and can scan vulnerabilities automatically than any human group team can manage.

This is how AI against AI-defense algorithms against offensive algorithms appears to have become the future of cyberspace security. Relevant of frightening, relevant of appealing.

How accurate is AI threat detection really?

Wholly a matter of implementation and environment. I have observed an accuracy rate of 85-95 trends with classification of known threats in controlled tests where quality data is used. In the case of zero-day or novel attack, this drops to 30-50% which does not seem high until you learn the traditional signature-based systems have 0% accuracy on the same attack.

False positive rates are even more typically around 5-15% (or 30-40% with ordinary rule based systems) and the detection rate is 60-90 times faster.

The snare: Accuracy is discounted. A system that captures 95% of the threats but the one that counts is not accurate. That is why the human supervision is not irrelevant.

Do I need a data science degree to implement this?

In case of commercial solutions by enterprises (Darktrace, CrowdStrike, IBM)? No. They are built to appeal to security teams and not data scientists. Installation will need cybersecurity experience and not ML skill.

To create your own open-source constructed system? Yes, you will require someone that can comprehend both worlds.

I attempted to create a simple threat-detecting model using security knowledge only and no ML experience- it was quite painful and the results were average.

In the case that you are serious in implementing it as a custom, you will either have to hire a person with both skill sets or even have to train your security staff on basic ML. Instead, there are solid free classes (such as DeepLearning.AI on Coursera) that will have you paid off.

Examining AI tech threat detection Beowulf, below is my verdict after three weeks inside this rabbit hole: It’s not hype, but neither magic. It is an instrument–a very potent one–that puts cybersecurity into a more proactive than reactive stage.

You won’t stop every attack. Strategic decisions will still require human beings. You will have to have the basics of security (patching, access controls, training the employees). You will deflect attacks that would otherwise make it, you will react more promptly to intrusions, and you will give your security team realistic fighting opportunity against more advanced attacks.

When you are working with sensitive information or with a large-scale workload, this technology is no longer an option any longer, this is a necessity. It is not about whether we need to use AI to detect threats. It is How soon can we do it before the next violation?

And honestly? Unfortunately, not likely fast enough based on what I have learned.

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!