Ok, I will tell the truth, when I first heard about zero-day vulnerabilities I thought that my attention would be somewhat as follows: how do you call on the defense against something you are not aware of at all? It was like attempting to take a ghost prisoner. Antivirus software, firewalls, all those security applications we use, they are designed to detect familiar attacks. But zero-days? They are the unpredictables of all.

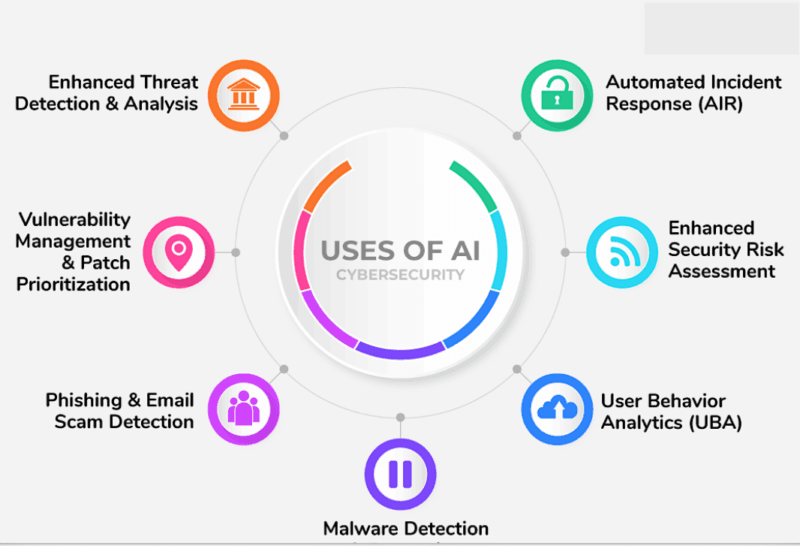

Then I began to research the topic of AI and machine learning taking this game completely new. It turns out that we are not that powerless as I think. This is not about magic, but it is about teacher machines learning to identify suspicious behavior under the condition when they were not sighted of that particular attack before.

When you wonder just how organizations are managing to detect threats which have no signature, which have no history of prior occurrence and which lack a patch, have a seat around. I have been researching this a week and a half, putting ideas into practice, and frankly? There is some of this that is truly impressive.

Table of Contents

What Are Zero-Day Exploits and Why Traditional Tools Fail

The Zero-Day Problem

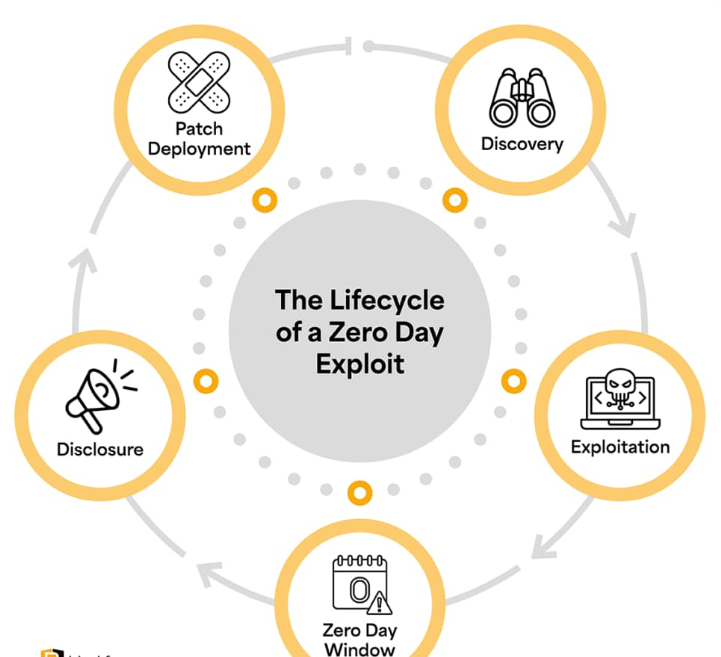

A zero-day is essentially a vulnerability that is not known to anyone, not the developers of the software, not the vendor of security software, no one. The phrase zero-day is used to imply that vendors have had zero days of time to correct it. These holes are discovered first by the attackers who apply them before any one can react.

This is what is so dangerous about them: there is no patch, there is no signature, there is no warning. Organizations are blinded completely when they are attacked. I checked out some statistics, and systems normally require days to weeks to realize the existence of the zero-day attack using conventional means. At that point, the damage is already done, data stolen, systems compromised and attackers have all the time to embed persistent access.

The scary part? Zero-day attacks are becoming smaller in terms of time lag between the identification of a zero-day and weaponization. That’s not a typo. Minutes. Classical methods of security just cannot follow that pace.

Why Signature-Based Security Falls Short

The majority of conventional security solutions operate on a very basic concept: they have a library of prior security threat signatures which are analogous to digital fingerprints of file malware and attacks. When it is similar to that fingerprint, the system blocks it. Straightforward, right?

The issue becomes notoriously clear to think about. When the attack is novel, it has nothing to compare. A fingerprint is being sought by your antivirus software although it is not available. It is as though you are attempting at apprehending a person without knowing his appearance, where he is or where a crime is about to take place.

I tried to put this idea to the test with some of the findings of security researchers and the figures are horrible. The better known traditional vulnerability scanners cut thousands of false positives, flooding security staff with noise when real zero day attacks are missed. Even signature-based approach is not only ineffective on zero-days, it simply does not work with the challenge.

Machine Learning Approaches to Identifying Unknown Threats

Behavioral Action Anomaly Detection.

It is here where the set of things interested. Rather than searching to find something that is already known to be bad, modern AI capabilities are able to learn what normal should be like on your network, applications, and users. Then they observe any kind of deviance.

Imagine that you know your neighborhood. You may not be able to know every one of them, but you would most certainly have noticed by now had somebody been fiddling with car door handles in the middle of the night, or, more to the point, scrambling over the fence of a neighbor. It is behavioral detection in a nutshell.

The reason why the approach succeeds is that zero-day exploits do not do nothing, they must run code, fetch files, call command servers, or laterally traverse a network. Although it has not been identified what the vulnerability is, the pattern of exploitation is usually noticeable compared to legitimate activity.

Systems set trends of normal operations, and issue warning signals of anomalies to possible threats.

What impressed me most? Approaches based on behavior are able to minimize false positives by up to 60 percent without extensive coverage being compromised. That is an enormous enhancement compared to the old tools that bury you with irrelevant notifications.

The Models That Work.

I have been able to review academic studies and industry implementations to understand what machine learning models can actually produce. This is what is currently working in the production settings:

Combining random Forest classifiers with autoencoders are reaching insane accuracy scores. The accuracy of these systems on unseen data is 99.9892 percent that is no typo. Random Forest models are desirable since they are also interpretable that is, security teams have a chance to know why the system classified something as a suspicious one.

Generative Adversarial Networks (GANs) do things differently. GAN discriminators-based detectors are constantly over 98% of zero-day attacks. These systems basically oppose two neural networks one creating possible threats and the other one learning to identify threats. Sparring is like getting training.

The ensemble techniques are conglomerate approaches that take a combination of machine learning to fill blind spots on each other. The ensemble-based network anomaly detection systems are shown to have an accuracy of 93.7 as opposed to 77.7 to 90.0 of the individual models. It is plain logic that when there are numerous independent mechanisms sharing the view that something is suspicious it most likely is.

The real-world performance? In 8.2 seconds, an AI-based analysis in OPSWAT MetaDefender Sandbox detected 90% of zero-day malware. Meanwhile, between the warning signs that something is about to happen and the realization of that danger, it only takes approximately the time to brew a cup of coffee.

Historical Attack Pattern Analysis for Threat Prediction

Lessons in Past Exploits.

The best idea that I have observed in this area is the use of historical attack data to predict the future. With thousands of historical adventures, AI can be used to determine patterns, methods and signs of a zero-day attack.

It is not sorta fortune-telling but it is pattern-recognition on a massive scale. Even with new vulnerabilities, attackers are likely to repurpose the effective approaches. These patterns of exploitation, a network behavior, and subsequent post-compromise operations usually have familiar patterns despite the vulnerability itself likely being new.

Through the history of the exploitation of the zero-days, machine learning models develop a probabilistic explanation of the attacks that could occur in the future. They are taught that certain patterns of occurrence such as unusual privilege escalation and subsequent horizontal movement, say, are highly associated with exploitation attempts irrespective of the exploited vulnerability.

Predictive Analytics in Practice

The transition between reactive and proactive security is likely to be the most significant change that I have noticed. The AI systems that examine historical attacks data are able to predict possible threats and take proactive security actions before the attacks happen.

An example of this is as follows; since historical data indicates that attackers will scan certain services of the network, and then follow up with zero-day exploits, predictive systems can raise warning bells as unusual scanning behavior that may be followed with an attack. The system is not being used to detect the zero-day, it is identifying the reconnaissance stage before exploitation can actually occur.

Encouraging both historical threat intelligence and real-time analytics, AI-based systems are transforming cybersecurity by motivating a preventive mode rather than a proactive one. Rather than detecting an intrusion following a breach, companies can detect the potential preparation and improve defenses prior to the attack.

To learn more about the way predictive analytics can be applied in vulnerability management, refer to Predictive Vulnerability Analysis.

The Behavioral Signs of Zero-Day Exploitation Tries.

Suspicious Activity: The Look of It.

Having studied the case studies on detection, I have discovered that even the zero-day exploits also leave breadcrumbs in terms of the behavioral patterns, being invisible otherwise. The following are the indicators that the contemporary AI systems monitor:

Abnormal execution of the processes: Applications that spawn children process or execute code in a non-normal manner. Browser is a PDF reader that runs PowerShell? That’s suspicious.

Abnormal network communication: The application communicates with external servers that the application never reached out to prior.

Patterns of privilege escalation: an account of a normal user, without having specified privileges, suddenly submits an administrative request or requests authorization to conduct, operations or processes that they would not normally need.

Lateral movement: Systems that are using resources they do not normally interact with (e.g. in quick succession between multiple machines).

The data exfiltration signatures: Massive data transfers to atypical destinations, the generation of compressed archives at odd hours, or odd query of a database accessing much more data than normal processes.

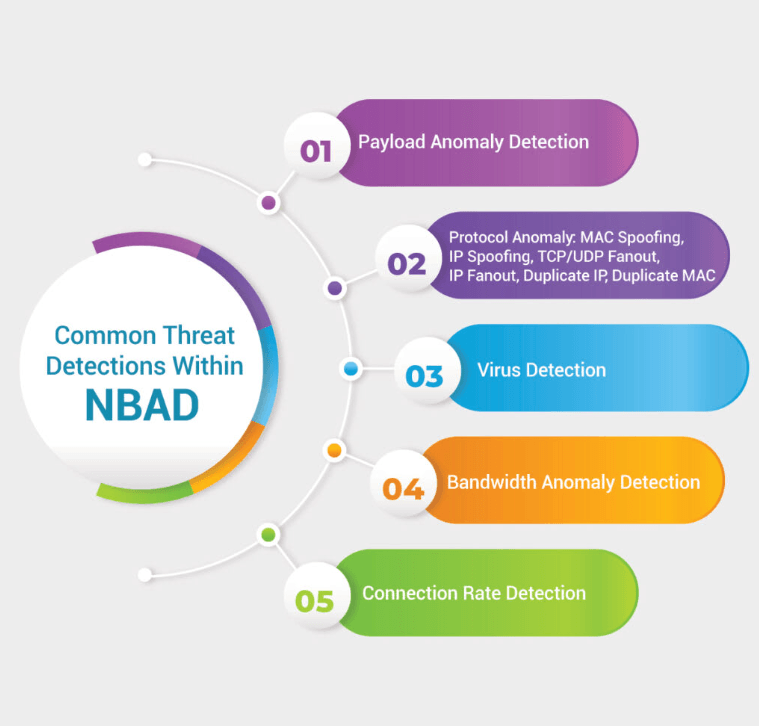

Signal Detection of Real Time.

AI is especially good in terms of the speed of detection. Conventional solutions take days to weeks to detect threats, whereas modern AI-based solutions can identify any threats within hours, and the new technologies can respond within milliseconds in the automated mode.

There are several detection layers operating at the same time. EDR is software used to monitor individual devices against suspicious use. User and Entity Behavior Analytics (UEBA) monitors how the users and the systems are behaving. It is the network traffic analysis that monitors communication aberrations. Sandboxing option blocks the suspicious files to analysis prior to getting to the production systems.

The main point: Zero-day exploits should avoid being detected by several detection systems at the same time in case organizations use defense-in-depth strategies. The fact that one of the methods of detection overlooks the threat does not mean that there is no coverage by other means.

Read about the use of AI to bear real-time detection at AI Threat Detection Explained.

In Comparison to Known Vulnerability Detection.

Known vs. Unknown: The Significant Differences.

I believe the concept of identifying known and unknown vulnerabilities in the program should be clarified since the two demand entirely different strategies.

Vulnerability identification is easily known: patches are in place, security signatures can be found and vulnerability scanners are aware of precisely what to expect. You are really testing to see if your systems are listed on a list of known problems. Status of detection is definite- vulnerability exists or not.

Zero-day detection works under infidelity. There are no patches, no signatures are of use and you are dealing with probabilities and not certainties. It is detected by detecting anomalies in behavior (which can be a sign of exploitation not a known pattern) and does not need to be compared with patterns.

Implications in the practical sense are enormous. When it comes to known vulnerabilities, the problem is predominantly organizational: it is necessary to fix systems in time, keep an inventory of assets, and regulate the updates. In the case of zero-days, the difficulty lies in technical terms; that is, it is more difficult to create a system of detection that is sophisticated enough to detect certain threats never encountered before.

Why Zero-Days Require Other Approaches.

This is what I have learnt regarding why the strategy must be different, Zero-day prevention cannot be done and the concept instead becomes one of detection and swift response and not prevention.

In case of known weaknesses, it is possible to guard against being exploited through patching. In zero-days, there is no way of being able to prevent it since you stay unaware of the vulnerability. All your strategy changes to three priorities:

Detection speed: The speed at which one can detect the exploit once it starts.

Limit architecture controls to the extent of the blast radius: Architecture controls such as network segmentation and least-privilege access allow limiting the distance attackers can reach even in case they exploit a zero-day.

Response velocity: Automation of containment and remediation to reduce the amount of damage that occurs before even the human analyst can begin investigations.

Conventional security is usually very prevention oriented. Zero-day defense appreciates the failure to be defended against, and is defined to minimize the consequences by detecting and responding quickly.

Case Studies: Detection of Butterfly Malware Children.

DARPA AI Cyber Challenge Results.

Among the most interesting the things I studied was the DARPA AI Cyber Challenge (AIxCC) that will end in 2025. It was this competition that drove autonomous AI systems to detect and fix vulnerabilities human-free, in other words, asking them to test themselves against zero-days.

The findings were veritably gorgeous. Opposing teams created the fully autonomous AI engines which are able to locate and patch vulnerabilities on their own.

The following is what these systems achieved: The Autonomous systems found 70 synthetic vulnerabilities with a success rate of 77% and found 18 real world zero-day vulnerabilities, or real bugs in the production code that the competition was not rigged with.

Better still: 43 vulnerabilities were fixed without human assistance, the average time to patch a vulnerability was 45 minutes without human debugging. The systems would independently find issues with it, comprehend their effect, create remedies, and ensure that the remedies functioned.

The Buttercup system by Trail of Bits was in the second place on the architectural design of a multi-agent system in which various AI agents specializing in engineering, security analysis, and quality assurance collaborated independently to identify and resolve vulnerabilities.

Real-World Detection Performance

Outside of competitions, I was curious to have a glimpse of how these systems work in the real world production of the real organization.

ZeroThreat has a high accuracy rate of 98.9% in no false positives in the vulnerability scanning on the automated penetration testing platform. Zero false positives is especially remarkable since it implies that all notifications which the system will raise are actual cases of security concerns which need to be addressed.

It is not only an accurate determination – it is prompt enough to count. The system by OSWAT successfully defeats 100% of user-simulation and anti-VM evasion techniques, that is it can identify even advanced malware so that it does not show up during analysis environments.

The lesson learned in these case studies is that AI-driven zero-day detection has no longer to be relegated to research laboratories but current production systems are providing valuable returns on security enhancement. It is not just a theoretical technology any more, but it is operational.

Integration with Threat Intelligence Feeds for Context

Why Context Matters

I soon learned one thing: with a good system of detection at least, the system works better when it is given context. That context is available through threat intelligence integration that tries to match what is happening into the world around you to what is happening around the world.

Threat intelligence feeds monitor developing zero-day vulnerabilities, running attack communities, indicators of compromise (IOCs), and attack tactics and methods.

Threat intelligence is used to answer key questions when your detection system has recognized suspicious behavior: Is it part of a well-known campaign? Has it been similar in other organizations? What are the common follow-on actions to this kind of initial access?

The unification is a two way one. You have detection systems, which feeds what is observed to the threat intelligence platforms, contributing to community defense.

This exchange occurs within the industry that is facilitated by the Information Sharing and Analysis Centers (ISACs) and has created collective defense that would not have been created at a single organization.

Building Intelligence-Driven Detection

The best examples considered by me do not think of threat intelligence as being distinct of detection- they make it a part of the analysis pipeline.

AI systems have the ability to cross-reference observed behavioral anomalies instantly with threat intelligence databases when detected as such. When the anomaly is similar to known zero-day exploitation indicators in other organizations, the confidence levels rise and automatic response is automatically increased.

On the other hand, new zero-day campaigns can be detected and hunted by the detection systems immediately new threat intelligence reports the relevant indicators in historical data. More frequently than not, organizations find out they must have been hit days or weeks ago without being aware of it- the threat intelligence gives them the background to understand the attack hindsight.

This is a mutual process of detection informing intelligence and intelligence enhancing detection that constitutes a continuous improvement cycle. The greater the distribution and correlation of data within organizations, the quicker the entire will establish the emerging threats.

In more detail on how to relate intelligence feeds to detection systems, refer to Threat Intelligence Integration.

Limitations and Complementary Detection Strategies

What AI Still Can’t Do

I personally am truly impressed by AI detection functionality, but it makes me to be a disrespect to you to hide that there are real limits. That is what AI systems are not yet able to get correct:

This new type of attacks: AI is not an exhaustive algorithm and may fail to recognize very new or otherwise complex zero-days that are dramatically different than the training data. When an attack employs methods that are distinct enough to have not been encountered in the model, they may traverse undetected.

There is always the possibility of false negatives: There is no detection system that identifies everything. Artificial intelligence models rely on the available body of knowledge on vulnerabilities, which means that entirely unknown threats might not be detected.

The interpretability problem: It is not always clear how AI has complex decisions. This complicates the issue of how a certain vulnerability was identified or overlooked and this puts trust problems whenever you are unable to confirm the rationale.

Adversarial evasion: More advanced attackers develop attacks with the aim at deceiving AI models. AI hallucination where systems are reporting access or nonexistent vulnerabilities is also a major limitation.

State-of-the-art autonomous systems only overcome new tasks approximately every third according to the industry standard, and the context windows are impaired by hardware, which hinders long-term operation coherence.

These limitations offer temporary benefits to defenders, and they cannot be considered as permanent benefits.

Defense-in-Depth Approach.

As these restrictions are given, the intelligent play is not betting all its resources on AIdetecting. Rather, effective organizations also have various overlaying strategies:

The main way of zero-day detection is behavioral analysis of detecting anomalies in network and system activity.

The endpoint protection is also used to monitor suspicious activities and unauthorized code execution in isolated devices.

Network segmentation restricts the extent to which attackers can go even in case initial compromise is successful.

Zero-trust architecture sees the need to continuously verify and not trust anything outside the network perimeter and inside.

Regular penetration testing prevents the attacker by finding the vulnerability before the attacker can.

In threat hunting, security personnel actively hunt down signs of compromise as opposed to being alerted about them.

SOAR based Incident response automation elicits containment actions in milliseconds when threats are detected.

The principle is simple; Zero-day exploits have to avoid a number of detection systems at once in case organizations use defense-in-depth. Although behavioral detection by AI may miss something, network segmentation will restrict the harm, and other threat hunting during a manual investigation may identify it.

There is no unique technology that is used to resolve zero-day threats. Successful defense involves AI-enhanced detection coupled with architectural mechanisms, human knowledge and processes of constantly improving.

Wrapping This Up

Having plunged into zero-day detection and artificial intelligence functions, here is my frank opinion: we are in a truly epochal shift associated with our cybersecurity. The change in the signature-based detection (which is unable to detect anything in the form of a zero-days) to behavioral AI systems with a 98-99% detection rate is the key change in the capabilities.

The level of accuracy in detection is not the most impressive part to me, it is the speed. Spending weeks in detection time reduced to seconds or even milliseconds of time allow organizations to really socially act before enormous destruction has taken place.

The competition outcomes by DARPA of autonomous systems to locate and close vulnerabilities in less than an hour? That is not a process of incremental improving, it is a paradigm shift.

All in all I also gained realistic expectations. AI detection is not a magic and does not eliminate a good security basis. The companies which are doing this effectively have integrated AI-based behavior analysis with network segmentation, zero-trust theory, threat intelligence, and human knowledge. They do not use AI as a silver bullet but as a powerful instrument of a bigger strategy.

In case you are a security professional, this technology is mature enough to be put into use today- the case studies have demonstrated to work in production. Detection of behaviors and machine learning KSAs will be the more valuable part of your career in case you are entering the sphere of cybersecurity.

The threat environment is not becoming any less challenging. Attackers are not only employing AI but the distance between vulnerabilities being discovered and exploited is also increasingly narrowing.

However, defenders in the first place also obtained means with which it is actually possible to intercept unknown threats before they cause irreparable damage. That is something to pay attention to.

Related Resources:

- Go back to over Cybersecurity (Parentpage)

- Predictive Vulnerability Analysis – Budget on a predictive vulnerability forecast delivered by AI.

- Detection of Threats using AI – Understanding AI detection processes.

- Threat Intelligence Integration – Integrate the intelligence feeds with the detection systems.

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!