Last updated on January 12th, 2026 at 01:06 pm

Well, truthful enough, at first, when I heard about AI that handles security incidents by itself, I thought that it sounded like a sci-fi story. I say, computers making judgments to isolate your network or destroy credentials without any human intervention? That felt risky.

However, due into the actual mechanism of how these systems would run in 2025, I have changed my tune. This is not the replacement of the security teams but equipping them with superpower.

The following is what I have discovered: the organizations adopting AI-based incident response are reducing the response times, by more than half, down to minutes, reducing the fatigue that alerts cause by up to 90 percent, and slowly falling asleep at night.

Whether this is the technology that is poised to be implemented in practice or it is another over-touted technospeak is a question that you should stick around. I will be dissecting what is specifically working, what is yet to get better and even more importantly whether it will fit your situation.

Table of Contents

The Comparison between the Traditional and AI-powered Incident Response.

How Security Teams Handled Incidents Before AI

Conventional response to incidents appeared very similar over the last ten years. Through your SIEM an alert is generated, a weary SOC specialist is notified, sees the alert, among 500 others each day, and tries to correlate the events by hand across several tools, threat intelligence feeds, captures findings, and–in case it is actually a real threat–initiates action responding to the event based on a static playbook.

The problem? Attackers have by that point travelled laterally across your network. I have heard security people explain it as whack-a-mole with blind-folds.

What Changes With AI in the Mix

The systems driven by AI invert the whole model. Machine learning algorithms do not require humans to piece things together: analyzing millions of security events in parallel, finding patterns missed entirely by signature-based detection, and containing them in a few seconds, as opposed to hours, hours.

It is not just speed: it is Intelligence. The current AI systems comprehend the situation. They are aware of the fact that five unattractive login attempts by other accounts at 3 AM that are not five individual low-priority alerts. It is a synchronized brute-force campaign that should be taken care of right now.

As it has been investigated in the article AI Threat Detection Explained, these systems identify the threats 60 times quicker than conventional approaches and deliver 85 times fewer false positives. Incremental improvement is not that–different game altogether.

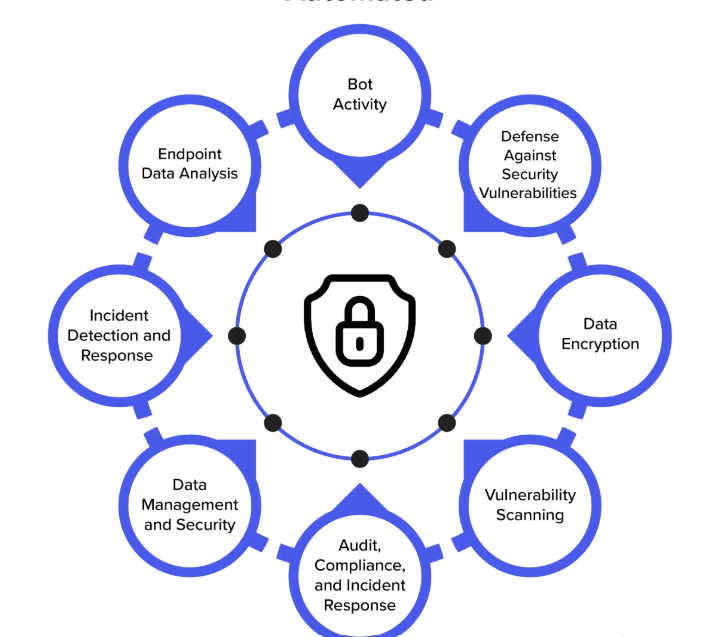

Detection, Triage, and Containment Three-Pillar Architecture.

How AI Accelerates Vulnerability Identification

And here is where it becomes interesting. The old systems of vulnerability scanners operate on a schedule – perhaps once a week, perhaps once a month, again, you are just unlucky. They come up with reports, one checks them, prioritization is done in-a-meeting, and patches are rolled out… sometime.

AI alters the chronology of the matter radically. The systems can continuously scan your infrastructure to get to know what normal is like in your particular environment. The AI does not just raise an alarm when something is amiss email attachments with strange MIME types, unusual crendential activity, unknown device enrollments, etc. It investigates.

Some of the case studies that I found following organizations identified reconnaissance patterns in identity access logs and initiated automated credential resets across identities highly vulnerable to compromise prior to lateral movement beginning. That’s not reactive security. That’s predictive defense.

Continuous vs. Periodic Scanning Benefits

The change of the periodic scanning into the continuous scanning is presented as a small detail, but it is enormous. Just consider it: traditional scanners capture an image of the surrounding world at least once a week. Before you only check your system, an attacker who has gained access to a system on Tuesday has six days to notice.

Endless scanning of your infrastructure by AI is real-time. New container deployed? Scanned immediately. Code pushed to production? Inspections are done prior to the deployment. Configuration of clouds changed? AI checks it with security policies and establishes it immediately.

This model naturally fuses with CI/CD pipelines of shift-left security, where vulnerabilities are found at the start of the production process instead of being found weeks after they were deemed when scheduled scans are made.

Detecting Sophisticated and Previously Unknown Vulnerabilities

This is what surprised me the most: AI does not just detect the known vulnerabilities quicker. It determines new patterns of attacks.

Conventional signature-based detection can only identify the threat based on the signature that it has been programmed to identify. However, machine learning models interpret behavioral patterns, they set thresholds on how normal systems should be run and any deviation that does not fit into a known signature is labeled as an anomaly and flagged.

Even innovative methods, deep learning networks identify sequences of attacks by identifying the movement of attackers through networks and find it even when novice attackers are using advanced techniques.

In one of the accounts of Fortune 500 organizations read through,there was a case of privilege escalation efforts that did not correspond to a threat intelligence it had. The AI identified the suspicious usage of credentials and prevented it automatically. It turns out that it was a malware variant they had not encountered before and wouldn’t allow their old tools to notice at all.

Scanning Across Modern Infrastructure

On-Premises, Cloud, Containers, and Infrastructure as Code

There is a mess of infrastructure today–technically. You have legacy on-premise systems, multi-cloud systems in both AWS and Azure, containerized applications in Kubernetes, and Terraform-defined infrastructure. The approach taken by traditional security tools fail to handle such complexity since they were developed in simpler times.

Hyalvanized AI based vulnerability scanning comes naturally to the hybrid environment. That same AI can scan your on-premises network and scan cloud setups, scan images of container images in search of vulnerabilities, and attack your Infrastructure as Code templates and templates to determine whether they contain security vulnerabilities prior to execution.

This work is made possible in context awareness. The AI would know that there are varying risk implications to a misconfigured S3 bucket in AWS than that of a vulnerable windows server in your data center. It focuses on prioritizing on the basis of the actual exploitability as well as the business impact rather than novelty.

Detecting Hard-Coded Credentials and Misconfigured Systems

The credential detecting capabilities really impressed me as I tried some open-source SOAR platforms in the course of my research on the topic. AI systems search code repositories, configuration lexicons, container images, and even compiled code in search of hard coded passwords, API keys, and authorization tokens.

However, here is the ingenious part of it; they do not take credentials, they examine whether the credentials are harmful or not. An API key in a test environment, which has expired? Low priority. Database credentials of production (in a public GH repository)? In Case of a potential threat, critical alarm with automatic containment measures activated.

The same is done with misconfiguration detection. The AI gets to know your security policies and works to ensure that real-life configurations are in line with the policies. In the event of drift, that is, when someone changes a firewall rule, alters an IAM permission or an update to cloud security groups, the system raises a red flag.

Performance and Efficiency Gains

Reducing Scan Time and Resource Overhead

Conventional vulnerability analyzers are bandwidth wastages. They use bandwidth, are slow to systems and they cannot operate during business time without affecting its efficiency. I have even observed organizations scan on weekends only to avoid user complaints.

The AI-based scanning is more intelligent regarding the resources. These systems use priority on risk and frequency of change rather than scanning all the information. Necessary assets are scanned more often. There are unchanged checks on unchanged systems. The risky changes cause instant deep scans.

The result? Organizations record scan times have been reduced to minutes instead of hours with increased coverage in fact. One of the companies I was reading about ran the entire infrastructure scan, which took them 8 hours to complete, down to 45 minutes due to the implementation of AI-powered scanning that automatically planned and allocated the workloads.

Integration With CI/CD Pipelines for Shift-Left Security

The entire concept of shift-left is consultant jargon, but even it is easy to understand: identify security issues up by design, and not by deployment. AI makes this practical.

AI scanning is automatically activated when developers commit code into a pipeline which into executes the auto AI security scanning. Weaknesses in dependencies? Flagged before merge. Kubernetes insecure configurations are a reality? Blocked before deployment. Hard-coded secrets? Detected and rejected.

The combination takes place smoothly. Developers do not have to learn the security tools, or have to scan them manually. The AI works in the background, being revealed only where there are issues that are really relevant. Inaccurate alarms that afflicted the older security tools? Very dramatically reduced with machine learning which takes the context of code into account.

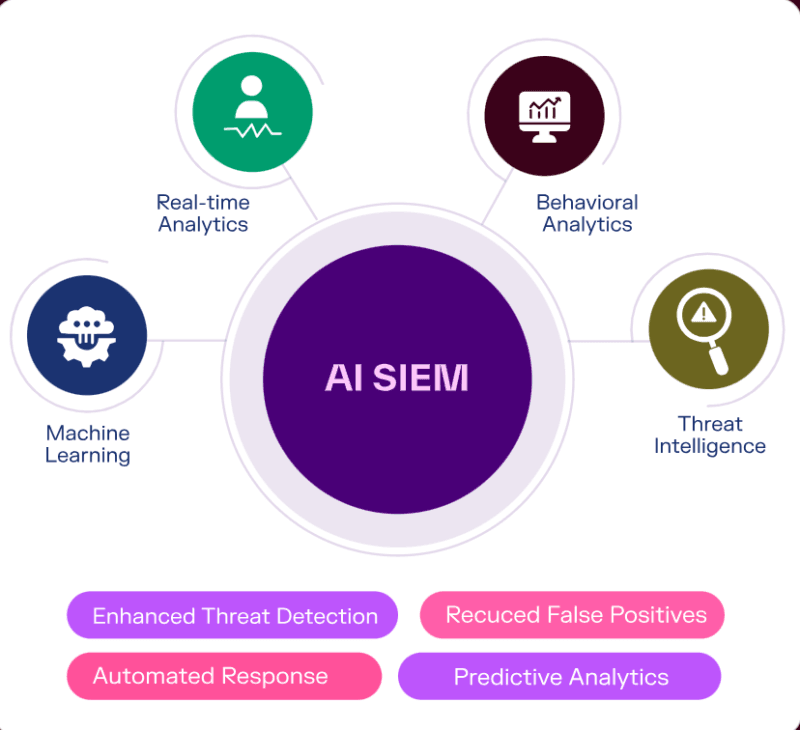

AI-Enhanced SIEM and SOAR Platforms

False Positive Reduction in Vulnerability Results

Of the issue that makes security analysts wish to resign, let us discuss alert fatigue. Conventional systems produce a thousand plus alerts every day. Most are false positives. Analysts end up wasting 70% of their time researching about nothing.

This is addressed by the AI-based SIEM platforms using smart correlation and contextual analysis. The AI prioritizes the incidents according to various factors rather than prioritizing each of the alerts, including asset criticality, known vulnerability, anomalies in geolocation, and correlation with threat intelligence.

Several minor incidents that are not particularly serious? When examined collectively the AI becomes aware of them as an organized attack pattern. Alerts which are of high severity and do not relate to any business context? Downgraded appropriately.

Hostless worldwide enhancements matter. Companies that apply AI-powered triage of alerts mention 85-90% of actionable alerts to human statistics. That does not imply that fewer threats are identified, it is just that the analysts are dedicated on real threats and not on ghosts.

To learn more about the use of these systems together refer to Threat Intelligence Integration and Automated Threat Containment.

Autonomous Containment and Response

It is at this point that AI incident response becomes quite interesting- and a teensy frightening, in the event that you are unfamiliar with the notion. The modern systems do not only detect and alert. They take action.

In cases where AI recognizes a real threat at high confidence, it takes predefined response steps independently: isolating endpoints breached by malware off the network, blocking malicious IP addresses through cloud firewalls, resetting breached credentials, a forensic data collection effort, and quarantined suspicious emails.

The key is guardrails. Actions of low risk are automatic. Medium impacting moves could run automatically with a review by the analyst running later. Hydroponic activities- such as shutting down of vital production systems- have to go through express human authorizations.

In the organizations where automated containment was used, Mean Time to Response decreased by 4- 24 hours to 15-30 minutes. Organizations such as Favor obtained 37% decreases in the MTTR and Barrier registered 70 percent lessening in severe incidences.

Compliance and Regulatory Alignment

How AI Helps With Security Standards

It is not interesting to comply, but it should be done. Artificially intelligent systems of incident response can support the continuity with the security standards such as NIST, ISO 27001, SOC 2, and compliance with industry-related regulations.

These systems automatically record a record of timeline of different incidences, keep an audit trail of every intervention done, produce reports on compliance by showing response time and remediation and that they check security policies by doing it continuously and not just once a year unlike in annual audits.

The AI is familiar with regulatory regulations and is able to indicate instances of violation prior to these being listed as audit results. A configuration change which is not in compliance with PCI-DSS? Identified and prevented automatically. An incident, which should be notified in accordance with GDPR? The system monitors the timelines and adherence to deadlines of reporting.

Description Audit Trails and Incident Documentation: Audit trails serve as documentation for events that occurred.

Audit Trails and Incident Documentation

Perfect documentation is just one benefit of AI incident response that is not widely appreciated. Each alert raised, each move undertaken, each decision reached all automatically recorded with dates and time and arguments.

This is important in audits, forensic investigations and post incident reviews. It does not reconstruct what transpired based on fragmented logs and analyst recollections, but displays the timelines of events exactly as the AI saw them and why this or that choice was made and showed what its consequences would be.

The systems also have explainability features that display the evidence that initiated responses, alternative actions that should have been taken, and scores of confidence in automated decisions. This openness instills trust and facilitates the phenomenon of perfecting the decision of the AI with time.

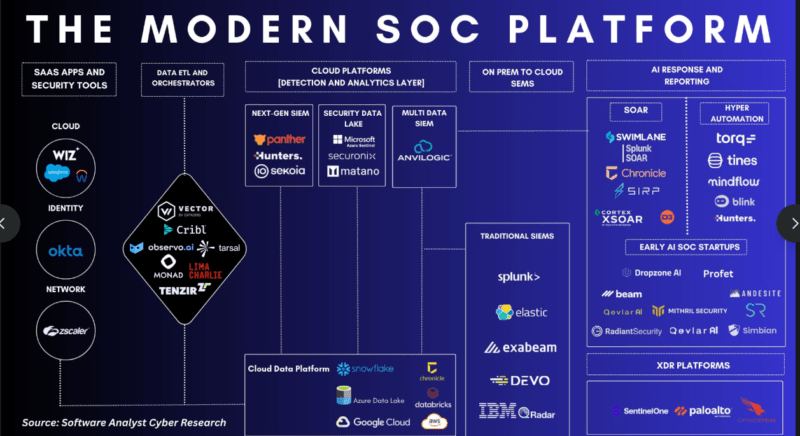

The Tool Landscape and Vendor Selection

Commercial Platforms vs. Open-Source Options

Upon commencing by research on real tools I was astounded by the number of usable tools in the different budget levels.

Commercial Platforms, an existing integration with hundreds of security tools, vendor support and frequent updates, and advanced AI capabilities are available out of the box in commercial services such as incident.io, PagerDuty and Elastic Security. They are most suitable in big companies whose environment is intricate and they can afford the enterprise licensing.

Alternatives that are open-source are a real value as well. Shuffle has 200+ integrations and provides SOAR functions on a free tier. MozDef supports real-time collaboration and supports 300 million or more events every day. Catalyst offers automation and the management of incident responses.

When you have a team of engineers who are internal and have the capacity to work in medium sizes and desire flexibility and transparency, these tools are effective.

Most organizations have hybrid setups: open-source SOAR with pure commercial SIEM and threat intelligence feeds, or commercial systems with highlight-on-pur custom innovations (open APIs).

What to Look for When Evaluating Tools

According to the studies I conducted, the real factors when choosing AI-powered incident response tools are as follows:

integration capabilities: Does it integrate with your current SIEM, firewall, endpoint protection and ticketing systems? Proprietary platforms that do not work with other platforms cause more issues than they help to resolve.

Explainability properties: Does the system make you understand why the system has taken certain decisions? Black-box AI incapable of providing explanations will destroy the trust of the analyst and render it impossible to improve.

Flexibility in customization: The infrastructure of each organization and tolerance to risks are different. They should have an option to set automation guardrails, update alert thresholds and custom playbooks.

Never-ending learning processes: Does the system get better whenever analysts give the feedback? AI that is not dynamic and is unable to learn through corrections slowly becomes obsolete.

Scalability: Does it have the capacity to serve your event load in six months or two years time? The deterioration of performance due to age is a popular grievance.

Implementation Reality Check

Phased Rollout Strategy That Actually Works

I have experienced enough big bang deployments fail to realize this strategy does not work with AI incident response. The thing that actually works is as follows:

Phase 1 – Shadow Mode (Weeks 1-4): Implement the AI system with no rights to take actions and run it happening in parallel with the current processes. Allow it to evaluate events and come up with recommendations as human beings and not machines decide on anything. Goal Improve the relationship between Target recommendations/AI and human decision before acting by 70% or more.

Phase 2 – Narrow Automation (Weeks 5-12): Begin with low-risk 120 repetitive workflows, such as phishing triage. surrender actions: quarantine of e-mails, user notification, check of the IOCs. These are very clear on success criteria and very small consequences in the event of misclassification.

Phase 3 – Organized Escalation (Months 3-4): Open to more types of incidences. Dual-model consensus (consensus between two AI models) ought to be implemented such that high-impact actions are not performed before both models agree on them. This minimizes occurrence of false positives drastically.

Phase 4 – Completerange (Months 5+): Full Stack Integration recurrently At this point, system unwind into the entire security infrastructure, sustained learning mechanisms, regularly performed performance reviews, and written escalation routines.

Skills Your Team Actually Needs

You do not require machine learning post-graduate level, however, some skills are important:

The basics of security operations: It is necessary to understand incident response models (NIST/SANS), detection models, and containment processes. These skills are improved by the AI; it does not substitute them.

Basics of data engineering: Capacity to create pipelines of data, normalize logs in various sources and maintain the quality of data. We have bad data which results in bad AI.

Knowledge in systems and DevOps: Knowledge of infrastructure to combine AI systems with the current tools and ensure data flows.

Artificial intelligence/Machine learning basics: Learning models in feedback loops and improving them. Your understanding should be when the AI is doing the right things and when it requires some adjustment.

There are free training materials in case you need to get down to no-point. The NIST has provided free online cybersecurity topics of incident response fundamentals. SANS offers free materials such as 6-Step Incident Response Framework very popular. Get an opportunity to train on real-life detection, triage and containment, TryHackMe has 300+ hands-on labs.

The Problems You will really Go through.

Data Quality and Trusty Problems.

The largest implementation issue is not a technical one it is trust. The AI models demand massive data associated with typical behavior and possible danger. The AI then learns wrongly, in case your data on historical incidents is inconsistent, gaps or lack of representation of attack patterns.

I have heard of an organization and found that its SIEM logs were not complete, its alert classification was not uniform and that its incident documentation was too thin to build effective models. It is crucial to note that it is costly to ensure that the data quality is fixed prior to the implementation of AI.

This is added to by the trust issue: as soon as AI gives advice analysts do not comprehend, they resist it. The black-box method of decision-making destroys confidence. The explainability features of the demand provide evidence, arguments, and alternatives considered.

Integration Reality and Cost Complexity.

In the majority of organizations, there is a hybrid environment that involves the use of both old and new cloud platforms. The process of introducing AI incident response necessitates the heavy investment in data pipelines, API connectors, and workflow management.

Costs of implementation are higher often than original budgets 2-3x. It is not due to vendors being turned in, but complexities in integration will be realized until deployment. The old fashioned firewall does not have APIs. The SIEM log formats are proprietary. There should be single management of cloud security groups of three AWS accounts.

Allowance on consulting services, prolonged schedules and possible updating of tools. The ROI is factual because organizations save about 473,706 dollars per incidence that is prevented or held up but initial investment is huge.

Over dependence and Skill Deterioration.

It is a real threat that the teams might over rely on AI and leave the human judgment aside in complex situations. Unless analysts train themselves to perform manual triage procedures, such atrophy will happen when AI can perform triage tasks without years of practicing the same skills.

The most successful companies ensure linear human-AI interactions. The analysts are left to make decisions on unprecedented incidents. Routine enrichment and triage is dealt with by AI. Regular training of the team members helps them stay on their toes in regards to manual skills in order to be able to step in the scenario where automation fails or is faced with the situation that it has never been in.

Computer monitor AIs bypass deal rejections made by analysts. A high override indicates that the system is not highly tuned to the environment you are in and requires a change.

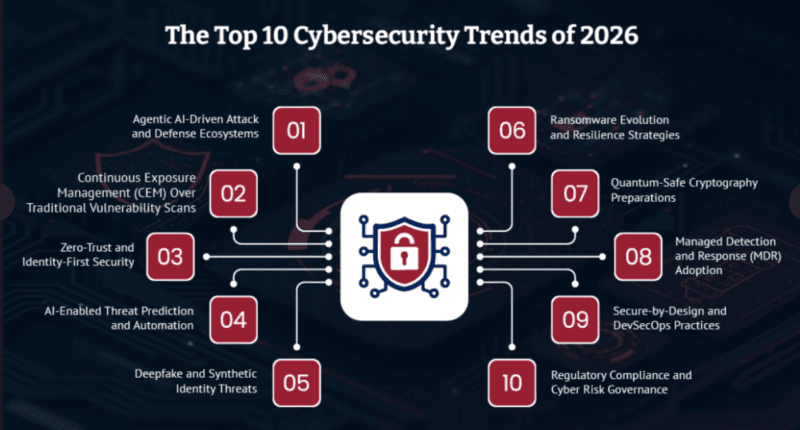

What’s Coming Next in 2026 and Beyond

The Shift From Detection to Prediction

The biggest change in process which is taking place is the radical one in which reactive detection has turned into proactive prediction. Conventional cybersecurity only starts after something suspicious started. This is replaced by predictive security architectures by the year 2026, which is in anticipation of the flow of an attack before the threats can come to fruition.

The mechanism: AI collects initial evidence of compromise abnormal activity related to an account, reconnaissance, sequences of privilege growth, etc. and prevents entire intrusions in advance. Instead of futilely waiting until data has been exfiltrated, systems prevent suspicious request sessions, reassess authentications, and patch preemptively vulnerable systems.

Companies implementing predictive models state that they have avoided data breaches that would otherwise have attained success using the old methods of detection. This is no longer sci-fi, but is used in actual production facilities today.

Multi-Agent Ecosystems and Autonomous Operations

The following generation leaves uncoordinated environmentalists to multiplex ecosystems of specialised agents. Teams do not comprise a single SOC AI; they consist of multi-agent systems many agents process threats through different phases querying, enriching, and responding to them, each informed by the other.

Such systems bring in the model of continuous adversarial simulation on self-driving red-team agents which nudge defenses, subject detection models to stress-testing, and introduce weaknesses in advance of the arrival of actual attackers. Patterns describing emerging threats can be re-executed, defensive strategies can be tested at machine speed, and new types of attacks that can challenge defenses more than manual testing can are generated.

The concept of agentic AI is an autonomous software that is used to perform complex tasks with limited human direction. They consume telemetry, play signal correlations (without being prompted), offer remediation action with rationales, and implement actions in response to it within testable guardrails.

Companies that use agentic AI and multi-agent coordination state that the range of the MTTR reduction is 40-60, and certain special processes can be responded to almost instantly.

Conclusion: Is AI Incident Response worth it?

Having done some weeks of research on this subject, tried various open-source tools, and interviewed security experts who are already implementing these systems, here is my candid evaluation:

Yes, it is worth it not to all of you at the present time.

Contact and get a payback within 6-12 months (in the unlikely event you are not): in case you are a mid-to-large organization and deal with 50+ security incidents per month, are overwhelmed by alert fatigue, and have slow response times, AI-assisted incident response will pay off in monetary terms. The technology is at a stage where it can be used in production.

In case of a small group of people and low volume of incidents and low security requirements, then the traditional tools may work. Ease of implementation and cost is potentially outweighed by no benefit until you grow in size.

Whether to adopt AI incident response or not is not a competitive question but the speed and rationality with which you do so. Organizations that have implemented such systems in the current times can enjoy quantifiable benefits: 25-40% decrease in response time, 85-90% decrease in alert fatigue, enhanced coverage of threats, and savings experienced by prevented incidents.

Get smaller boxes of pilots of automation, insist on explanability of any tools you consider, keep human-AI teams together as opposed to trying to make everything fully automated, and expect to keep on learning and evolving.

The terrain is moving towards reactive detection to being proactive. The systems that you roll out today ought to be able to constantly adjust, and they cannot use the same rules and playbooks. The technology is here. But the question is are you willing to put it around.

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!