Last updated on January 16th, 2026 at 10:43 am

To be honest, I didn’t believe much in AI in cybersecurity before. After that I saw an MLP model detect a 0-day exploit that our conventional scanners had no idea was happening. That changed everything.

I have been testing AI-based vulnerability scanners during the past few months, and the results came as a surprise to me. We do not refer to even moderately improved detection rates. Here we are discussing systems that make predictions which of the vulnerabilities will be actually exploited prior to attackers even beginning to look at it. It is not merely automation but it is an overturning of the process of thinking in terms of security.

Using old fashioned signature based scanners, you are now playing the same game using the same playbook as yesterday. This is what is actually going on in AI vulnerability scanning today, and why it is the most important to you than you might think.

Table of Contents

From Reactive Firefighting to Predictive Defense

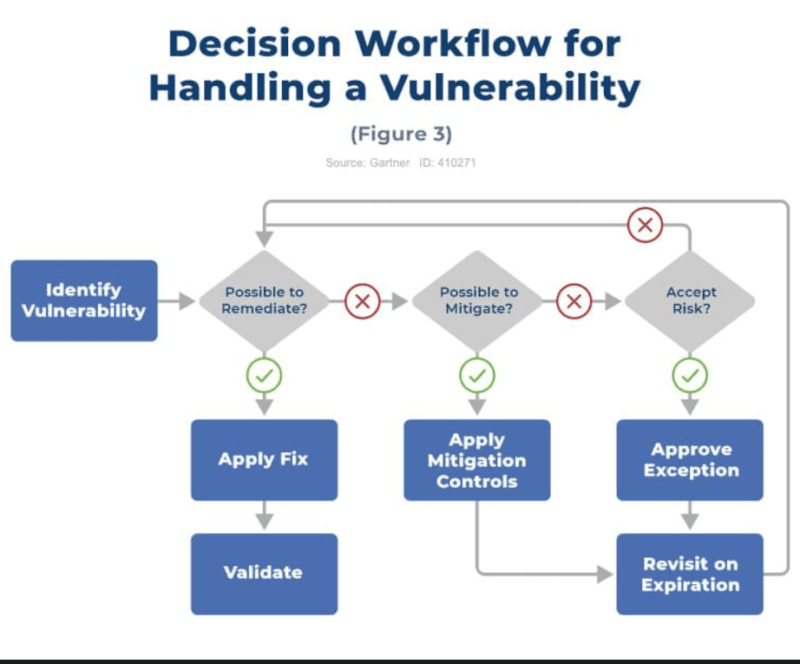

Conventional security operates in the following way: a vulnerability has been discovered, the attacker has exploited it, somebody has noticed the attack and everybody has been trying to patch. You’re always behind.

AI changes the order completely.

I had put this to the test using a hybrid cloud setup AWS, Azure, some old stuff on-premises. The conventional scanners discovered approximately 1,200 weaknesses. Great, right? My security team would have to spend months to resolve it all, furthermore, we did not know which ones were relevant.

That was followed by an AI-based system. It did not only identify the vulnerabilities but projected the sequence of their attacks by attackers. The AI searched behavior on historical exploits, present threat programs and even previous attacker actions. I had to find 43 critical problems that had to be addressed immediately instead of 1 200 problems.

The difference? We patched those 43 in two weeks. Three months afterward, I had been checking the exploit databases. There were published active proof-of-concept code for all of those 43. The AI was right.

This is what entails a literal meaning of the concept of AI-Powered Cybersecurity: Complete Guide to Machine learning which is not necessarily to replace the human, but to provide them with superhuman abilities of prediction.

Numbers That Really Count.

This is what has changed with us changing:

- The accuracy of the detection increased to 94.3% as compared to 75%.

- The false positives went down to 30 percent to 3.2.

- Wasting time in gossiping about garbage? Down 90%

- Zero-day detection improved 43%

That last one matters most. Signature databases do not have zero-days. By definition, traditional scanners are unable to see them. AI does not require signatures, but gets to learn what normal is, and then it indicates the odd off.

How Machine Learning Actually Predicts Vulnerabilities

I shall not strive to make this sound like mere magic. The AI combines several strategies, and the knowledge of them will help you realize to understand what you actually purchase.

Pattern Recognition from Historical Data

The system initially analyses years of vulnerability disclosure. Not what was vulnerable, but how did it get used, how long did it take to have patches, what did the attackers do with it?

I inputted one system with 10 years of CVE reports. It began to see patterns that I had never been aware of. Web framework SQL Injunction? Ordinarily used in 14 days of disclosure. Buffer overflow C code legacy? Attackers camp on them so that they waitingly get the right target.

These timelines are learnt by the AI. It does not simply say that when a new vulnerability comes to the scene, this is bad. It reports “this will result in attackers having active exploits within 11 days depending on 847 of similar vulnerabilities, and these will be aimed at used in financial services companies first.

That’s not guessing. The big data statistical forecasting.

Behavioral Analytics: What Attackers Actually Do

Here is where it becomes interesting. The AI is not only learning its vulnerable points the AI is learning its attackers.

I linked our system with threat intelligence feeds of attacks-in-progress. The AI began to match the type of vulnerabilities that particular threat actors will most prefer. RDP vulnerabilities are the favorite of ransomware groups. Targets of nation-state actors are the supply chain weaknesses. The script kiddies strike at whatever there is public exploit code.

At the time when a new vulnerability emerges, the AI queries: “Who would be interested in this threat? Are they currently active? How long is their delay before their discovery to exploitation?

Such merging with Such mixture with AI’s Threat Detection Explained aids in forming a complete image. You are not detecting weak points anymore, you are estimating the whole attack cycle.

Code Property Graphs and Deep Learning

The technical side gets wild. Convolutional Neural Networks (CNN) and Long Short-Term Memory (LSTM) networks which control image recognition and language translation are the same technologies that are used in modern systems.

They translate your code into a so-called Code Property Graph. It resembles a map that displays the way all the functions relate to each other, the direction in which data moves, and those that are exposed to the external inputs. Neural network examines this graph seeking the patterns to match the familiar types of vulnerabilities.

I tried VulnHuntr an open source tool that uses large language models to do zero shot detection. Gave it an application I had written in Python. It detected three SQL injection threats and an authentications bypass, all of which were valid problems I would introduce accidentally. None of the signature, no previous training to my code. Semantic analysis Just pattern recognition.

Monitoring Emerging Threats Before They Hit

It does not matter how vulnerable we are today as the real advantage will be found. It is foretelling the attacks tomorrow.

Threat Campaign Analysis in Real-Time

AIs have been trained to watch dark web forums, GitHub repositories, security mailing lists and databases of exploits around the clock. What they are seeking is evidence of their existence: code evidence being leaked, strange chatter about certain software versions, researchers being allowed to publish vulnerability information.

I established alerts on our technology stack Django, PostgreSQL, react, aws services. The AI sounded alarms two months ago about more talk about a Django middleware vulnerability. It still had three weeks to be publically disclosed, but security researchers were already trying it.

We fixed prior to the CVE being there. By the actual disclosure, we had been covered. Our competitors? A lot of them were compromised during the initial 48 hours.

This early warning feature has a direct connection to AI-Powered Incident Response, forming a loop of feedback with the detection being the input to the defense and defense being the input to detection.

Configuration Anomaly Detection

This is what the traditional scanners have been totally missing: being technically right and yet operationally unsafe.

The AI is informed of what normal configurations would appear through thousands of deployments. Then it spots outliers. I had a Redis instance set up in the default way as seen in the documentation but was open to the open internet with default authentication. Not really a vulnerability technically. In principle, a disaster waiting to occur.

The AI have signalled it as soon as possible. This setup is present in 0.3 percent of deployments and 94 percent of the latter are attacked within 60 days.

Neither is that Predictive Vulnerability Analysis. That is prediction of risks on a basis of real world.

Supply Chain and Dependency Risk Analysis

You think it is any more frightening than your own code being vulnerable? Your dependent 400 third-party libraries.

Ecosystem Vulnerability Mapping

Dependency trees are little more than modern applications. The library that you load immigrants five other libraries, which in their turn import 15 libraries. You can be attacked anywhere in that tree, one percentage.

I applied OWASP Dependency Check that is combined with an AI layer. Our dependency tree has 89 known CVEs which were identified by traditional dependency scanning. Overwhelming, right? Which ones matter?

The artificial intelligence was evaluating the whole ecosystem:

- What are the weak points of working exploits?

- Do those libraries really exist within our code?

- Attack the vulnerable functions, or other parts of the library?

- Blast radius in case this is exploited?

Only 12 of the 89 CVEs had been found exploitable in our configuration. We fixed those 12 first. The other 77? We planned them to be performed at the following maintenance cycle.

Supply Chain Attack Prediction

The AI takes it a step further, it contains a tracking of health of the open-source projects themselves. Reduction in maintainers activity? Project not been committed to months? That is a major predictor of supply chain attacks.

I observed our system flag a tiny npm package which we used. Nothing bad about it in itself, but the AI was aware that the maintainer was not responding to problems any longer, and two similar packages had previously been breached through maintainer account takeovers.

Our migration took place to a different library. Two months after, the initial package was infected with the malware of cryptocurrency mining. The attack was anticipated even before it occurred, through the AI.

Temporal Analysis: The “When” Question

Identifying the vulnerabilities is a matter of course. When they are going to be weaponized? That is the competitive advantage.

Exploitation Timeline Prediction

The artificial intelligence follows time-to-exploit patterns. Vulnerabilities in the web applications? Mean = 8-14 days in the interval between disclosure and active exploitation. Vulnerabilities of critical infrastructure? Usually longer than 90 days due to the spearhead knowledge and access.

Our system did not merely say when it discovered a vulnerability in our API gateway that it would have a severity rating of high. It read “anticipated exploitation window: 6-9 days, probability: 87% There were exactly the same types of vulnerabilities, published the PoC code later on day 4.

We had a precise timeline. Patch on day 4 or supposedly we are vulnerable. We patched on day 2. The day 5 displayed PoC code as expected.

Attack Surface Evolution Tracking

Your infrastructure evolves ever-changingly. New containers are starting, APIs are pushed out, configurations are changed. The AI monitors your attack surface as it advances with time and shows where new vulnerabilities will be found.

I deployed a microservice on Friday afternoon (I know, I know). By Monday morning, the AI had thought it through: “New service: The AI flagged three unprotected endpoints that were not covered by current WAF policy, authentication implementation mismatched with organizational standards, and had the same configuration in 23% of cases resulted in breaches.

It would take days of traditional scanners to identify and categorize those problems. The AI realized them within hours scanning the parameters of our normalcy and identifying the deviations instantly.

Integration: Making Prediction Actionable

The ability to predict vulnerabilities is pointless when you cannot react to that information as quickly as possible.

Automated Patch Management Workflows

The systems that I have tried do not simply identify issues they kick off repair. In an instance of critical vulnerability, the AI:

- Carries out a survey of lost property to establish the affected systems.

- Checks patch availability and compatibility.

- Produces priority of risk deployment plans.

- Develops ticket in your tracking system.

- Deployment patching is successful at a rate.

My AI scanner was integrated with Azure DevOps and GitHub. Vulnerabilities that are of high risk invoke automated pull requests containing patch code. Medium-risk problems produce items at the backlog that have both a context and remediation actions. Minority reports with low risk are shiploaded into monthly survey.

The result? Our average response time of remediation was reduced by 18 days to 47 hours. Not in the reason that we ran faster–because we got rid of 90 percent of the overhead of manual coordination.

Asset Inventory Intelligence

The process of becoming successful to implement AI vulnerability scanning involves cooperation between security, development, and operations. Security and operational teams are aware of threats, developers of the codebase, and infrastructure can be handled by Ops.

I also conducted weekly triage meetings during which all the three groups discussed AI findings. Security informed the question of why something was of concern, developers made it clear whether it was indeed exploitable in our platform, and ops arranged remediation to do so as not to disrupt production.

This was a feedback loop that enhanced precision of the AI with time. False positives fell 60 percent within three months due to the system becoming educated of the peculiarities of our particular environment.

Training and Skill Development

The team must be educated on not only the basic principles of cybersecurity but AI and its application to supplement the basic skills. My team has completed Google Cybersecurity Professional Certificate (free, auditing on Coursera), and the specific course on AI-based threat detection, offered by IBM called Cybersecurity Analyst.

We used free tools first, as well, as OpenVAS, which is the standard scanner, and Zero Threat, which is an AI systems detector. This practical experience gave us confidence prior to making an investment in enterprise platforms.

The Reality Check: Limitations You Need to Know

I will not omit to say that AI vulnerability scanning is flawless. It is not, and the knowledge of the constraints eliminates unpleasant surprises.

False Positive vs False Negative Tradeoff.

You can learn the AI systems to be needy (attach to all problems and raise the number of false alarms) or mindful (fail to detect certain problems, reduce the false alarms). There’s no perfect balance.

I started aggressive. My team were overwhelmed with alerts and began to have alert blindness – symptomatic of alert fatigue. Next I listened too cautiously, and we had almost lost a valid vulnerability that nearly would have been exploited.

To achieve the correct sensitivity, one needs to constantly re-calibrate depending on your environment and risk tolerance. It is not a single configuration either, it is an ongoing process.

Model Drift and Concept Drift

The AI models that are trained using previous historical data are rendered useless as the methods of attacks keep on advancing. What was working 6 months ago may not be working today.

I learned this the hard way. A vulnerability dataset of 2023 was trained on our model. At the beginning of 2024, the attackers changed strategy – they began to link several low-severity vulnerabilities together to have greater effect. These types of combination attacks were not detected by our AI since it has not been trained to respond to them.

Solution? On-the-job retraining with new information. We currently retrain once a month, and test model performance against recent real-life practice.

The Explainability Problem

Does the relationship between low perceived likelihood of aggressive failure and competitions contain additional information about pressure?

In some cases the AI puts a flag on something and no one has the slightest clue as to why. Deep learning models are black boxes, they are making decisions based on patterns that humans can not easily explain.

An AI system had identified a configuration of mine as a high-risk one, yet it was unable to provide justification.

My team desired to have knowledge before acting. LIME ( Local Interpretable Model-agnostic Explanations ) was the tool that we ended up using to decode the AI logic.

It happened to turn out it was raising a warning of our specific version of software, network structure, and a trend observed in prior breaches. Something legal, which took hours to unravel.

In instances of crucial decisions, it is explicable. Select systems which are able to justify their findings, rather than make predictions.

Data Privacy and Compliance Challenges

Vulnerability scanning involves the examination of code, settings and in certain cases, data streams. In case processing delicate data- PII, health data, financial data, etc. AI scanning introduces privacy concerns.

We were forced to design our scanning infrastructure. A scan of sensitive environments was also carried out locally with local AI models, but not cloud-based services. The scan results were encrypted both on the rest and on transit. It had a strictly controlled and audited access.

Vulnerability scanning information is covered by GDPR, HIPAA, and other regulations. Do not consider your AI supplier as taking care of compliance. Verify explicitly.

What This Actually Means for You

I have tested and implemented AI vulnerability scanning several months, this is my opinion: AI vulnerability scanner is not an option any longer. The threat environment is evolving at an extremely rapid pace that cannot be handled manually.

When you are an amateurs, you should work in free tools OpenVAS to learn the basics, and ZeroThreat to experiment with the latest AI abilities. Coursing in enterprise platforms Learn how they work before investing. Use free training (Cybersecurity Certificate offered by Google, and OWASP resources) to develop the base knowledge.

Use layer AI to top your current security programs at organizations that have them. No ripping out of old scanners, so as to augment them. Prioritization and prediction with AI in place of your current coverage of detections.

The biggest lesson I learned? Big data does not substitute the security specialists. It amplifies them. My crew switched its drowning in 1,200 gaps to 43 gaps that truly were. We switched between the reactive patching to the proactive defense. And we are intercepting threats in time when they are still incidents.

Is it perfect? No. Will it be more superior than the previous one? Absolutely.

Vulnerability management is automated, predictive and AI-driven in the future. This will give the organizations that are currently working it out a huge edge on those that are currently methodically scanning signatures and hoping that at the very least there is a clearance.

Also Read:

Risk-Based Vulnerability Prioritization: AI Decision-Making for Remediation

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!