Hopefully, I have spent many a late night watching vulnerability dashboards to understand how that makes me feel; 8,000 dictated critical alerts, 3 security analysts, and less than 40 hours/week with some degree of luck.

I had my team experiment with the patch everything methodology, and this lasted six months. It didn’t work. We were drained, compliance metrics were awful and the vulnerabilities that mattered? Some of those got through, all right.

This time we embarked on AI-driven prioritization. It was not to sound cool, but we were drowning.

This is what I discovered about artificial intelligence revolutionizing the process of security personnel in determining what to fix first and why it is not all hype.

Table of Contents

Beyond CVSS: Why Traditional Severity Scoring Fails

The Problem with “Critical” Everything

CVSS became the standard in the industry since 2005. It ranks the weaknesses between 0 and 10 according to aspects such as the ease at which they can be manipulated and the type of harm they may bring. Sounds reasonable, right?

The thing is as follows: CVSS is used to measure theoretical maximum severity. It does not matter that that vulnerable Apache server may be sitting on some remote development network with no internet connectivity and no critical information. It simply sees: remote code execution, and screams: 9.8 CRITical!

We were at 3, 200 high and critical findings on the first pull with our vulnerability report. Our patching SLA stated that we were to resolve the most critical problems in 48 hours. Any math, physically impossible.

What CVSS Actually Tells You

Response to CVSS: “In the worst case, how bad can this be?

It doesn’t answer:

- Is any one really exploiting this in nature?

- Is this vulnerability present in a system that is of importance to our business?

- Do the compensating controls reduce the actual risk?

I’m not saying CVSS is useless. It’s a baseline. However, when choosing based solely on CVSS is not the way to prioritize: it is like driving with a map that considers all roads as equal- they are all roads, but one does get to your destination and another takes you to the end of the road.

AI Risk Assessment: The Missing Context Layer

Four Dimensions That Change Everything

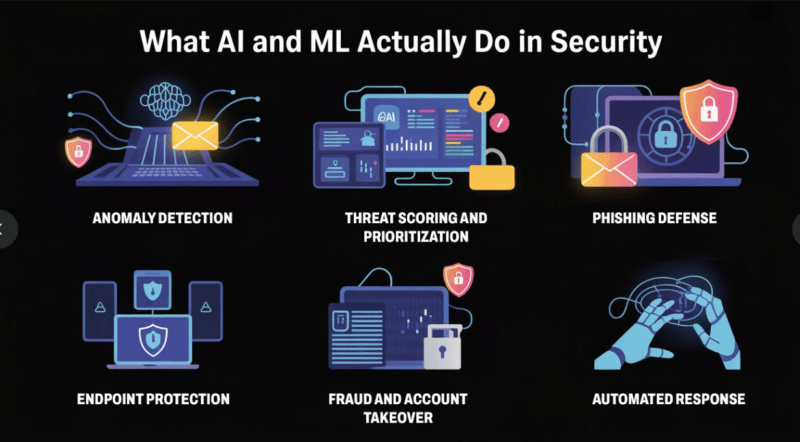

AI enhanced vulnerability prioritization lays situational data and scores against raw ones on a severity score. And here is what the needle is really moved by:

Severity x Exploitability

Rather than simply querying “how bad this is AI models that have been trained on actual attack information query: how probable is that one is going to get used in the next week? In other systems such as EPSS (Exploit Prediction Scoring System), trends based on real attacks, exploit kit releases and discussions on the dark web as well are analyzed to generate predictions as to which CVEs attackers will exploit.

On our part I tried it out. Our CVE was 7.5 (high) by CVSS and only 2-percent of chances of exploitation by EPSS. A medium vulnerable had a 68% EPSS score meanwhile, actively being used in ransomware campaigns-a medium 5.8 vulnerability.

Conjecture what we patches first?

Asset Criticality

Equal servers do not exist. Vulnerabilities to business context are mapped to AI systems:

- Tier 1: Revenue generating systems, customer facing application, infrastructure that is compliance critical.

- Tier 2: Data vulnerable internal tools.

- Tier 3: testing systems, development environments.

- Tier 4: Non-production costs Sandboxed labs.

Critical vulnerability on a Tier 4 test box? It can wait. You have the same flaw on the server with which you process payments? That’s a weekend emergency.

Threat Intelligence Situation.

Live feeds give AI models the information of the current moment. Do we have CVE being talked about on exploit forums? Is it included in their Known Exploited Vulnerabilities catalog of CISA? Do the ransomware groups actively scan it?

At the time of the release of the Log4Shell vulnerability in December 2021, it was reported by conventional scoring as being critical. Systems with AI enhancements announced “critical + actively exploited + publicly available + proof-of-concept code + proves to the internet-facing assets = patch immediately before anything else.

Exposure Assessment

An internet-facing web server has a vulnerability that is fundamentally different than the vulnerability on a system that is air-gapped. AI models factor in:

- Firewall Policies and Network isolation.

- Availability of services on the internet.

- Authentication requirements

- Systems classification of affected systems.

Here is where the context is actually essential. I observed companies spending days fixing internal only systems, and not even fixing open endpoints with the same vulnerability.

How Machine Learning Predicts What’s Next

That is where the point of interest begins. ML models do not simply respond to it, they make predictions.

Analyzing the resources of historical exploitation, behavior of all types of threat actors, as well as vulnerability properties, AI can single out which forms of flaws will probably constitute a target before the very existence of exploits. It is recognition of patterns at large scale.

Indicatively, models observe that in 80 percent of cases, buffer overflow vulnerabilities in some forms of network drivers are weaponized within 30 days of disclosure. A new CVE with a pattern matching is flagged by the system immediately, before anybody has even actively exploited it.

We have been utilizing this predictive ability six months ago. At this point our team targets some 400 vulnerabilities rather than 3,200. And here is the kicker we have actually decreased our attack surface more successfully since we fix the correct things.

Real-Time Threat Intelligence: Dynamic Risk Scoring

Why Static Scores Don’t Work Anymore

I would run vulnerability scans on a weekly basis and prioritize on that snapshot. By Thursday the situation had already changed. Another exploit kit is released, an actor out of a nation-state changes strategies, a vulnerability is added to the CISA KEV list, and the priorities of my priorities of Monday are already obsolete.

Threat feeds are taken in by AI systems always:

- Surveillance of the dark web on availability of exploit codes.

- Ransomware tracker Databases.

- Vendor threat intelligence

- Analysis of security vendor attack patterns.

- Researcher communities and Social media.

Being medium risk on Monday can be patch now by Wednesday when the threat intel demonstrates active scanning campaigns against it.

The Dark Web Intelligence Layer

This part surprised me. In order to evaluate the interest and availability of attackers, AI systems scan underground forums, abuse markets, and communities of hikers.

If a CVE has:

- Sold or distributed working Pays-Davrad.

- Actively discussed forums on breaches.

- Ransomware-as-a-service documentation.

- Indications of precision reconnaissance.

…the risk score of it automatically increases–whatever CVSS may say.

I had observed this occur in a file transfer application weak point. CVSS rated it 6.5. When it comes to exploit code sales, Dark web intelligence depicted sales of exploits code at a cost of $2,500, and also ransomware groups were negotiating it. Our artificial intelligence system has been moved to first priority. Three days after, we observed scanning attempts which were aimed at that specific vulnerability.

Business Impact Analysis: Aligning Security with Reality

The Board Doesn’t Care About CVEs

The lesson here that I have learnt the hard way is that executives do not care that you have fixed 1,000 severe vulnerabilities. They are concerned with the risk of the business.

AI-based Cyber Risk Quantification quantifies any technical discovery into money:

- Possible loss in revenue during the downtime.

- Financial penalty in the case of breach of customer data.

- Brand damage and loss of customers due to an attack.

- Incident response and recovery Cost.

I did not discuss CVSS scores when I was introducing our new prioritization strategy. I discussed how to help minimize the monetary risk of an occurrence of a ransomware attack by cutting the costs by one-third to $400K as a result of vulnerabilities that might cause domain controller compromise.

That went through with a narrative.

Risk Tolerance and SLA Alignment

Various organizations have varying risk appetites. AI systems allow you to adjust to available priorities

according to your tolerance:

- Conservative (Financial Services, Healthcare): Patch anything more likely to be exploited in 72 hours with probability greater than: 5 percent.

- Balanced (Tech Companies): Concentrate on targeting the internet-facing and high-value.

- Aggressive(Startups): Be more risky on non critical systems to sustain speed.

Our SLAs are defined according to the levels of risk:

- Critical Risk: 48 hours (systems which may cause business downfall in case of breakage)

- High Risk: 7 days (200 but compensating controls)

- Medium Risk: 30 days (inclusive of monthly maintenance)

- Low Risk: Mobile Penetrated Overview (isolated systems, small impact) Quarterly review.

This practice is justifiable to auditors and it is in fact feasible to security staff.

Prioritization Methodology: Comparing Across Dimensions

Building a Composite Risk Score

The AI systems generate weighted scoring models which integrate several aspects. The simplified version of what we had applied is as follows:

Risk Score = (CVSS multiplied by 0.25) + (EPSS multiplied by 0.30) + (Asset Criticality multiplied by 0.25) + (Threat Intel multiplied by 0.20)

All the factors are normalized to 0-100 and we proceed by weighting it depending on what is most important to our company. In cases when the company has heavy regulation requirements, you may weight its asset criticality. Threat intel weight increases in those who are targeted with attacks.

the aesthetic AI in this case is unceasing recalibration. A monthly comparison between prioritization rankings and what was actually exploited in the wild results in new weights every quarter.

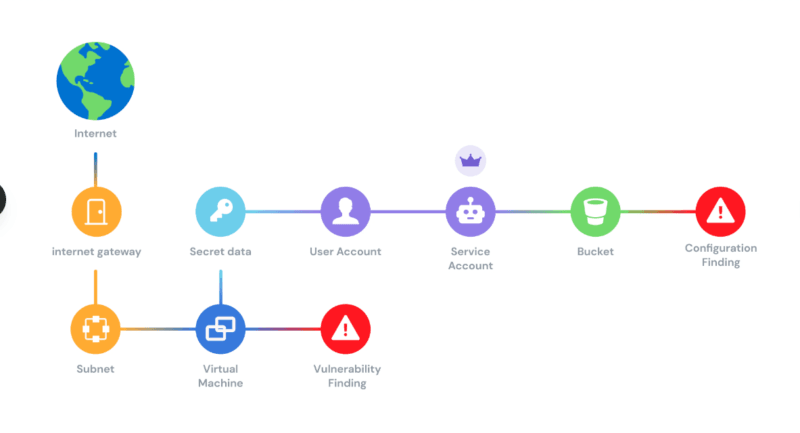

Attack Surface Exposure: The Reality Check

I have said all this before, but it is a thing to hunt into. AI systems chart your attack surface with the combination of:

- Data on network scanning (what is accessible in fact).

- Cloud configuration analysis.

- Graphs of application dependencies.

- User access patterns

A microservice of ours was also one of the so-called critical vulnerabilities that happened to become not reachable over any external network: it simply spoke to two services inside the company via encrypted tunnels. The AI analysis demonstrated that the real-life course of attack must have compromised three other systems initially.

We moved that off the list of patch this week to the list of include in next sprint.

In the meantime, our authentication service with all the customer logins had a medium vulnerability? That was at once of the top priority since it was exposed directly to the internet and not a Web Application Firewall.

Patch Management Optimization

Beyond “Patch Everything”

Look, I did the patch everything critical. We had burnt out our teams, we had broken the production on two occasions using untested patches and we had exploitable systems due to our mis-priorities.

Grater AI-based patch management smarter The patches are ordered in a smart manner because the AI takes into consideration:

- Combined risk scores

- Stability and availability of patches.

- Incompatibility and dependencies of the systems.

- Optimal period of maintenance.

- Resource availability

The system informed us: the most vulnerable aspects of you are these 12. The following is the suggested patching order to ensure a minimum of conflicts. This one needs a re-boot so book it with these three on your Saturday maintenance schedule.

Resource Allocation That Actually Makes Sense

We do have three security engineers and two DevOps people that do patching. The use of AI systems assisted us in making resources decisions:

- Week 1: Prioritize all of the top 50 risky discoveries.

- Week 2: DevOps patches Tier 3/4 systems on a routine basis.

- Week 3: Security team works on complex remediation which involves a change of code.

- Week 4: documentation, testing and review.

Prior to AI prioritization, we were in the disarray given that we all worked on different items without a clear strategy. We are now truly concentrated on the important part.

To subscribe to the idea and to apply similar strategies, larger AI vulnerability scanning features and the explanation of AI threat detection to achieve more context in developing a coherent security program may be informative to the teams.

Case Study: Breaking the “Patch Everything” Mindset

The Before State

Six months prior to us carrying out AI:

- 8,247 total vulnerabilities

- 3,186 rated “high” or “critical”

- Average time to remediate critical: 18 days (SLA was 2 days)

- Compliance: 34%

- Team morale: terrible

We applied totally CVSS-based. And in case it was critical we attempted to patch. We missed every time and to make matters worse, we were not even sure whether the actual risk was being minimized.

The Transition

We started small. I decided on one application stack and did AI-based prioritization as pilot. Here’s what changed:

- 412 findings in that stack

- AI system described 23 as an urgent risk.

- To patch those 23 took three days.

- Moved over 380 findings into the lower priority levels on analysis.

We measured: upon using external attack simulation tools we discovered that focusing on that 23 vulnerabilities removed 94 percent of potential attack paths to that environment.

The After State

Six months of complete AI prioritization implementation:

- And it is still approximately 8,000 total findings (vulnerabilities continue to emerge).

- Not 380 confirmed as critical or high risk on AI analysis.

- Time to remediate critical: 3.2 days.

- Compliance: 92%

- Team morale: greatly improved.

Better still, we possessed real measures in the form of reduction of the business risk. External penetration testing revealed that the paths exposed to critical systems were reduced by 78 percent.

Measuring Success: Beyond Compliance Metrics

What Actually Matters

We track these KPIs now:

Redemption Risk Tier Velocity.

- Critical: <48 hours

- High: <7 days

- Medium: <30 days

Business Risk Reduction

- Percentage change in breach estimation cost.

- Viable attack path attacks eliminated.

- Exposure to critical vulnerabilities on the internet reduced.

Operational Efficiency

- The time spent on the high-value work of security analysts versus the alert triage.

- Reduction in false positive rate.

- The patches stepped backwards because of conflicts (need to involve enhanced sequencing).

Predictive Accuracy

- What is the number of the vulnerabilities of high priority which appeared in subsequent threat intel?

- Conjunction between the AI risk scores and actual exploitation in the real world.

The Reality Check

Prioritization of AI vulnerability is no magic. It makes mistakes. We have had previously experiences of having to deal with a vulnerability that we had been given a low ranking due to low EPSSs only to be exploited- however in the case of a low-value system.

The trick is that AI is an aid to decisions and not the replacement of decisions. Risky decisions (such as patch deferrals in internet facing systems) need to be reviewed by humans. We have ensemble models, which are a combination of various AI methods and we have quarterly recalibration processes, which involve comparing predictions to reality.

However, what I do know, without a doubt, is that it is impossible to revert to CVSS-only based prioritization again. Disclosure rate is increasing faster estimates project 50,000 BVs in 2025. Just 11 percent higher than it will be in 2024 and 470 percent higher than two years later.

It is impossible to rank 50,000 vulnerabilities by hand. You need AI.

Where This Goes Next

My development of interest being being continuous threat exposure management the systems are not frequently run to scan the existing system; however, in current systems, the attack surface and the current risk are being monitored in real-time with priorities changed on a minute-by-minute basis as the threat environment changes.

We too are beginning to explore automated remediation where AI is used and is not just prioritization but proposes specific solutions, too: “Install patch version 2.4.7, but do not install version 2.4.6 as it is known to have compatibility problems with your load balancer setup.

Appearances: vulnerability management was previously the act of fighting with a blindfold on. You stitched and fervently prayed you would have the important stuff. The blindfold is lifted to artificial intelligence-induced prioritization. You will not get it all, not ever, but you will concentrate the little time and resources you have on the weak points that do pose a threat to your business.

In case you are still focusing based on the purely on CVSS scores then you are battling a 2025 threat with a 2005 strategy. Begin with one area of application or environment, AI prioritization should be tested and results measured then scaled.

Your security staff will appreciate you. And even get to sleep at night.

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!