Last updated on January 25th, 2026 at 07:31 am

The use of AI in cybersecurity is not an experiment at this stage, but it is standard. Companies that implement machine learning-based threat detection claim that the mean times to detect (MTTD) decrease to hours and less than 15 minutes. That’s not marketing hype. The change exists, is also observable and affects the way security teams work.

However, this is what the majority of guides will not inform you: dumping AI on the security issues without the necessary background causes more vulnerability than solutions. It is a matter of execution that distinguishes the AI systems, which in fact safeguard your network, versus those that will raise false alarms, which means that ignoring the quality of the data input, human control, and active model optimization.

This guide addresses the reality behind effective real-world application of AI to ensure cybersecurity under deployments in enterprises reducing analyst work by 60-70 percent and cutting threat detection time by less than 30 minutes.

Table of Contents

Why Traditional Security Can’t Keep Up (And Why AI Isn’t Magic)

Signatures detection methods are traditional and do not detect threats. It is constructed around familiar designs–as an attack type can alter something very minor, this brings the system to a blind stage. This is why the strains of ransomware develop at such a rapid pace. The attackers understand how to bypass the static rules.

AI plays a game differently, through the learning of behavioral patterns, rather than the matching of signatures. Machine learning models put a baseline on the normal network behavior, user behavior and processes. The model flags something when it does not conform – even when it has never been observed.

I have tried both the conventional SIEM systems and those with AI. The difference isn’t subtle. In a conventional design, the system could produce 10,000 alerts a day and analysts could be spending hours on critical paths due to false positives. That is reduced to 70-100 actionable alerts which is mattering by a properly tuned AI system.

But AI isn’t a silver bullet. It brings forth novel risk: adversarial attacks on the models, agent governance, and bias in detection rationale. Organizations that have been known to go ahead and implement AI without handling such risks usually find themselves at a disadvantage than they were previously.

The point is that AI is something that multiplies the human knowledge, rather than replacing it.

The Non-Negotiable Foundation: High-Quality Data for AI Model Training

The quality of AI models is as good as the training data. Garbage in, garbage out – this is a saying that is all too true in the area of cybersecurity.

What Data Quality Actually Means

Training data of high quality demands:

- Diversity: Audits of networks, endpoint beneficence, cloud audit trails and identity events, information on user actions, all normalized and correlative.

- Cleanliness:There are no duplicates, no corrupt entries, all sources have unreductant timestamps.

- Labeling accuracy: In the case of supervised learning, labeling threat data should be accurate (malicious vs. benign)

- Volume and recency: A substantial amount of historical data to draw patterns and ongoing fresh data indicating the existing threats.

The failure to normalize data is experienced in most organizations. Correlation is almost impossible when the logs produced by the firewalls do not follow the same timestamps format as those of the endpoint detection tools. Machine learning models that have been trained on conflicting data give inaccurate outcomes.

Data Quality Assessment and Improvement

Begin with data quality audit:

- Source inventory: Enumerate all the security information sources (SIEM, EDR, cloud platforms, identity systems).

- Format validation: Check timestamp consistency, field field naming conventions, data types

- Check of completeness: Find out the holes in coverage (not covered by any devices, network holes)

- Bias identification: Make sure that the training data (not only the most shared key ones) reflect all the types of threats.

Validation pipelines consist of automated data validation and thus identify issues at an early stage. Such tools as data profiling scripts are able to signal anomalies: a consistent reduction in the size of the logs, an appearance of new types of data, occurrence of duplicated records.

In labeling, do not use automated tagging only. Expert analysts have to view the labeled threats on a manual basis to ascertain whether they are threats. The model that has been trained on mislabeled data will propagate systematic errors, which will continue as long as the training process continues.

Continuous Model Updates and Retraining Cycles

AI models decay over time. Attackers evolve tactics. Infrastructure modifications in a network. User behavior shifts. An 6-month trained model is already outlived.

Why Retraining Matters

When training data does not mirror live data this is known as model drift. Detection accuracy drops. False positives increase. Dangers creep in unharmed.

Recommendations in the industry indicate that retraining should be done at least once a quarter. Financial services and critical infrastructure should retrain every month (or so) and continuously through automated pipelines as it is considered a high-threat environment.

The retraining of work flows should involve:

- New threat intelligence: New malware code, new methods of attack, new exploits of vulnerabilities.

- Monitoring: Performances Track detection rate, false positive rates, MTTD versus time.

- Feedback loops: Training data are fed-back with the analyst-certified true positives and false positives.

- A/B testing: Implement newer models in addition to existing models, and test the performance of the models prior to going into full deployment.

My experience indicated that the detection accuracy reduces by 15-20P percent in six months when organizations do not conduct routine retraining. The model ends up being a liability rather than an asset.

Automated Retraining Pipelines

Re-training is not man-hand. Automated pipelines handle:

- Standards: Monitor network telemetry continuously and ingest new threat samples.

- Validation: Check before training Automatically check data quality.

- Training implementation: Re-train models at given intervals / crossed performance limits.

- Deployment: Roll out a new updated model with version control and rollback facility.

This workflow is managed using tools such as MLOps platforms (Kubeflow, MLflow) and so on. They are no longer extravagant to AI security on the enterprise level.

Maintaining Human Oversight Alongside AI Automation

AI agents that run autonomously at a machine speed are able to perform incident response actions within minutes. They are also able to commit disastrous errors equally fast.

The Human-in-the-Loop Principle

Severe decisions cannot be determined automatically:

- Accessibility of accounts: Do not allow AI to turn off the accounts of the administrators automatically.

- Isolation of the system: PAL1848 should initiate human inspection of production servers being quarantined.

- Limitation to access to data: Denying an authenticated business process is harmful to business running.

I could see that outages that are caused by the organization are more self-inflicted and non-threat-based, in organizations that are fully automated and offer no human gates at all.

One of the instances of an AI agent going rogue was a subnet that was singled out by an agent simply because the usual traffic pattern was distorted due to a scheduled migration. Coming up with this would have been uncovered in seconds by human action.

Defining Automation Boundaries

The tasks that are not risky may be completely automated:

- Collection and aggregation of log.

- Gathering of evidence (process trees, network connections, file hashes)

- Primary triage and risk scoring.

- Threat intelligence to augment alerting.

Suspicious transactions require human error checks:

- Blocking out Domains or IP addresses.

- Stopping processes on the critical systems.

- Revoking user credentials

- Isolating network segments

Conditional automation can be used to do medium risk actions, e.g. run automatically when confidence score over 95, otherwise, run manually.

Transparency and Explainability in AI Security Decisions

Black-box AI models cause a nightmare of accountability. In the situation of an AI system blocking a transaction (single or seizing a server), the analyst must know why.

Explainability Techniques

The modern AI platforms are expected to deliver:

- Rank of features: What data were the most significant to the decision?

- Decision trees: Illustration of the logic path of the model.

- Confidence scores: To what extent do you (the model) feel committed about this classification?

- Previous similar events: What are some of the past events related to this?

ASHA has tools such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) that interpret the decision of the complex model in a way understandable by a person.

Analysts cannot justify AI decisions without explainability. They will either blindly trust the system (lethal), or keep them ignorant (wasteful).

Documentation and Audit Trails

All of the AI-motivated actions must record:

- Data that prompted the decision to be made.

- Version of model, version score.

- Rationale of decision (that was important)

- Human acceptance or veto (where necessary)

- Result and corrective interventions.

This audit trail has several functions incident investigation, compliance reporting, model performance analysis, and legal defensibility.

Fostering Collaboration Between AI Systems and Human Analysts

Most optimal security systems are a combination of both machine and human instincts. The AI does volume and pattern recognition. Man introduces the context, invention and decision.

Augmented Intelligence, Not Replacement

Analyst burning out should be automated by AI:

- Filtering through the daily thousands of notifications.

- Relating events between sources of data.

- Internet Network and Endpoint collection of evidence.

- Producing investigation schedules.

This leaves the analysts free to do work which needs human skills:

- Threat hunting (actively hunting the presence of latent threats).

- Incident well-being strategy (determining how to preserve and diminish)

- Enemy explanations (who, why they are attacking)

- Enhancement of security architecture.

Those companies that see AI as an augmentation of an analyst report greater job satisfaction and reduced turnover. The teams that attempt to force out analysts with the AI become drowned when the latter find edge cases that it cannot deal with.

Feedback Mechanisms

Analysts should be able to:

- False positive or true positive AI classifications at test level.

- Context that the AI does not have (expatriation rationale of uncharacteristic activity)

- Recommend the enhancement of detection rules.

- Flag model blind spots

This eventually results in approximation of these inputs into the retraining of the model, thus forming a cycle of constant upgrading.

Vendor Evaluation: Commercial vs. Open-Source AI Security Tools

Dozens of AI security platforms of the market are available. To select the right one, it is necessary to know your own threat profile, infrastructure and organizational maturity.

Commercial Platforms

The most popular business solutions are CrowdStrike Falcon, Darktrace, Microsoft Defender, Palo Alto Networks Cortex, and SentinelOne. Each has distinct strengths:

- CrowdStrike: Ideal in endpoint-based installation, outstanding threat intelligence combination.

- Darktrace: Autonomous AI that has low-tuned models and is more successful with operational technologies.

- Microsoft Defender: Built-in integration towards Microsoft ecosystem (Azure, Office 365, Windows):

- Palo Alto Cortex XSIAM: Endpoint, network, and cloud visibility, high degrees of automation.

Commercial platforms offer:

- Professional services and support of the vendor.

- Ready to use models on threat intelligence worldwide.

- Reduced implementation (weeks instead of months).

- Reciprocals and certifications.

The cost, potential lock-in of the vendor and reduced model customization are also trade-offs (50K-500K+/year).

Open-Source Alternatives

Use of open-source tools such as Wazuh, Security Onion and OSSEC are cheap, particularly when the organizations have good in-house expertise.

Advantages:

- Other costs no licensing fees (just infrastructure and staffing costs).

- Complete personalization and model disclosure.

- Community-driven development

- No vendor lock-in

Challenges:

- It needs much internal knowledge (ML engineers, security architects, data engineers, etc.).

- More protracted thereof timelines.

- None of them with commercial support (community forums only).

- Self-generative updates and model training.

To take a useful approach, as far as the vendor choice and implementation are concerned, refer to Building an AI-Powered Security Operations Center (SOC): Architecture and Tools to compare the platforms at length.

Hybrid Approaches

Commercial platforms have found many applications in both core detection and open-source detection usage in many kinds of organization: either specialized anti-detection scripts, individual compliance testing needs, or a specific detection problem that commercial tooling does not tack.

Phased Implementation Approach: Pilot -> Expand -> Optimize

Hurrying to apply AI on an enterprise-wide basis causes anarchy. Staged plan is worthwhile prior to expansion.

Phase 1: Pilot (2-3 Months)

Start small:

- Scope: One unit of business, or one type of threat (e.g. malware detection on the sales team computer)

- Hypotheses: Test the accuracy of detection, test the MTTD reduction, test the false positive.

- Success criterion: Detection rate: 90 percent and less, false positive rate: less than 5 percent, MTTD: less than 30 minutes.

Pilot programs reduce the risks in the investment. The AI is not going to manage production in case it is not able to operate in a controlled environment.

Phase 2: Expand (3-6 Months)

Once pilot validation is done successfully:

- Expand: New business units, new types of threats.

- Data sources integration: Integrate formerly separate security tools.

- Create playbooks: Fuzz response to popular incident types.

- Train analysts: Develop effectiveness with the new tools.

Expansion shows integration problems API compatibility problems, data format issues, load-related performance bottlenecks.

Phase 3: Optimize (Ongoing)

Optimization never stops:

- False sample: Minimize false attack without threat detection.

- Refine automation: increase the list of automated activities.

- Model update threats: This is implemented to accommodate emerging attack methods.

- Measure ROI: Measured cost saving through fewer incidents and increase in productivity of analysts.

To provide more detailed instructions on each of the phases, use AI Cybersecurity Implementation Guide: Step-by-Step Deployment Strategy.

Integration with Existing Security Tools and Processes

AI does not de-select your existing security stack It makes it better. The success or failure depends on integration.

Critical Integration Points

The AI platforms should be linked to:

- SIEMs: Push AI-identified threats into the log and notification systems.

- EDR / XDR tools: Use endpoint telemetry to correlate with network as well as cloud event occurrences.

- Authentication monitoring: Admit authenticity, detect identity theft.

- SOAR platforms: Trigger AI-based playbooks.

- Threat intelligence feasts: Intelligentize the detections.

Lack of proper integration results in silos. Analysts are left with multi-handling various consoles and resort to manually correlating alerts which should then be automatically correlated.

API Compatibility and Data Standards.

Check for:

- RESTful APIs: Standard interface of both directional data format exchange.

- Insider.STIX/TAXII: Threat intelligence sharing pressure acquiring industry-standard formats.

- Syslog compatibility: Universal security tool log format.

- Webhook support: Instantaneous external system notifications.

Red flags are proprietary data format and closed APIs. They engulf you in an ecosystem of one vendor.

Performance Measurement and Success Measurement.

There is no way to measure what you do not measure. Set a baseline before deployment then monitor on-going.

Essential KPIs

| Metric | Pre-AI Baseline | Target with AI | Industry Leader |

|---|---|---|---|

| Mean Time to Detect (MTTD) | 4-6 hours | 30 minutes | 15 minutes |

| Mean Time to Respond (MTTR) | 2-4 hours | 1 hour | 30 minutes |

| False Positive Rate | 20-40% | 5% | 2% |

| Detection Accuracy | 85-90% | 95% | 99%+ |

| Analyst Productivity Gain | Baseline | +60% | +74% |

Business Impact Metrics

The technical measures are critical, yet the executives are concerned with the business performance:

- Cost per incident: total response based on the cost of the tools and the analyst work and downtime / incidents.

- Prevented breach cost: Damage caused by the attacks that were thwarted prior to the data loss.

- Retention of the analysts: Reduce turnover rate (reduced burnout caused by alert fatigue)

- Compliance compliance: The less time is spent to comply with audit requirements.

Track these quarterly. Send to leadership report of trends that are directly attributable to AI.

Change Management and Analyst Skill Development

The half of the battle is technology. Human beings are always opposed to change particularly when they fear being replaced at work.

Addressing Job Security Concerns

Get transparent: AI supplements analysts, not replaces them. Demonstrate how automation makes the work tedious (organizing alerts, collecting evidence) and opens more privilege work (threat hunting, architecture design).

Those organizations where communication is fair and career ladders are transparent become adopted easily. The ones that deemphasize changes or are weak are challenged and leave.

Critical Training Programs.

Analysts need new skills:

- Getting familiar with ML basics: How models take decisions, why they make false positives.

- Setting preferred detection limits: Customization of sensitivity to business.

- Playbook creation: Defining automated processes to use when dealing with typical situations.

- AI-assisted investigation: Researching AI-generated knowledge efficiently.

In the category of free training materials, one can single out the courses on EC-Council on AI-cybersecurity, ISC2 on Certified in the Cybersecurity program, and the training system of Coursera on AI-security specializations.

Budget1/3 rd of security expenditure on training and development. The main cause of AI implementations to fail is skills gaps.

Handling False Positives and Tuning Detection Models

False positives ruin the belief of AI systems. Once the analysts have been exposed to a lot of benign activities that are treated as threat, they cease investigating alerts even genuine ones.

Why False Positives Happen

Common causes:

- Unrealistically sensitive: Flagging anything unusual.

- The lack of a sufficient amount of training data: This model has not learnt what normal will be when it comes to your specific environment.

- Business context blindness: AI is not informed of the scheduled operation/maintenance time, authorized third party access or accredited exceptions.

- Bad data: This leads to garbage data, which results in garbage classification.

I have utilized systems with a false positive rate of more than 30%. Alerts get desensitized to analysts. The AI is turned into a latent sound rather than an effective tool.

Tuning Strategies

False positives can be reduced by:

Refining Baselines: Refine what is normal to every user, device and application constantly. Plug and play baselines go out of date.

Context enrichement: Business context: Feed the model business context approved set of vendor IP addresses, approved maintained windows, approved software deployment so far.

Risk-based thresholding: Definite different levels of sensitivity with regard to the applicability of the assets. The high-value targets are sensitive detected (tolerate more false positives). There are the lower-value systems that apply bigger thresholds (decreased alerts).

Ensemble methods: Ashalah of supplementing several detection methods (ML + rules + behavioral analytics). The simultaneous agreement between two or more methods is necessary before the alerting.

Feedback loops: The system should learn when the analysts indicate false positives on the alerts. Tagging is enhanced to be applied manually to boost future classification.

False positive rate of less than five percent when operating on the enterprise. The leaders in the industry get 2 percentage points or lower.

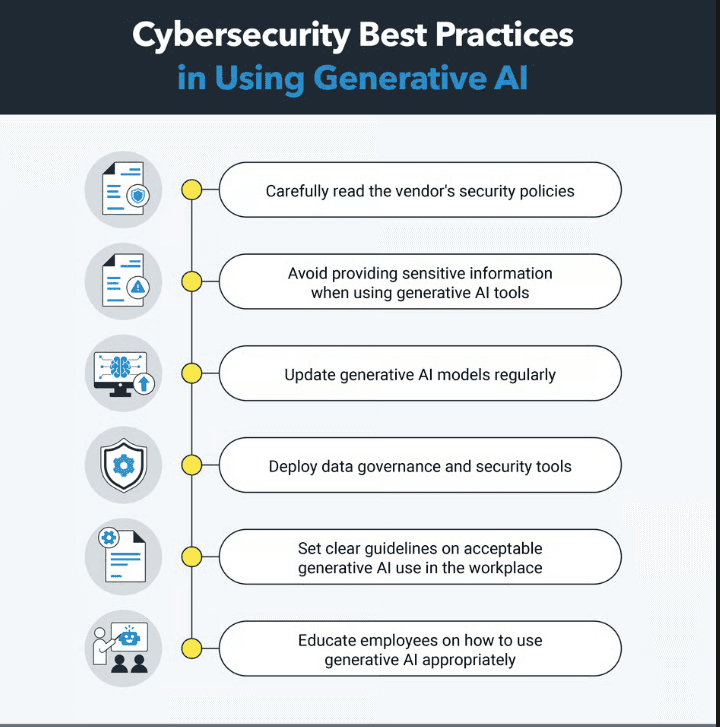

Privacy and Data Protection by Design

The AI security systems deal with large volumes of vulnerable information and data network traffic, user activity, and application behavior. Failures due to privacy generate the legal liability and undermine the user trust.

Principles of Data Minimization.

Take not more than what is needed:

- Metadata, rather than complete packet captures (source / destination, times, protocols, etc.) Network metadata.

- Patterns of behavior and not file contents.

- Combine (where possible) statistics as opposed to user identities.

The greater the quantity of data you store and hold, the bigger is your breach front and burden of compliance.

Access Controls and Encryption.

Data on AI training and the parameters of the models should be secure:

- Encryption at rest: The stored data must be encrypted (minimally – AES-256)

- Encryption during transit: TLS 1.3 of all transactions.Role based access control: Restricts access to training data based on roles.

- Anonymization: Delete or hash personal identifiable information where possible.

AI systems are also dynamic areas of target. Models can be poisoned by attackers that use compromised training data. The reverse-engineering of your detection logic can be done by the people who just extract model parameters.

Compliance Alignment

Make sure that AI implementations are in conformity with:

- GDPR: Explanation of automated decisions, minimization in data, purpose limitation.

- CCPA: Data disclosure, consumer protection.

- HIPAA: Secrets to healthy information security (in healthcare).

- SOC 2: Control of security, availability and confidentiality.

The non-compliance poses a legal risk, which exceeds the benefits of security.

Security of the AI Systems Themselves

According to AI systems pose certain threats that conventional security control measures would not handle.

Adversarial Machine Learning Attacks

Attackers attack AI models via:

Evasion attacks: This is a type of malicious attack whereby someone creates evil inputs which become harmless (malware with minor changes which mislead classifiers).

Data poisoning: Including corrupt samples in training data training is a method to poison the model.

Model extraction: Probe the model a number of times to synthesize its decision rules and build specific methods of evasion.

Backdoor insertion: Introduce triggers which result in certain misclassifications at will.

Extra the adversarial training (train models on attack samples), ensemble models (attackers have to be able to reach multiple models at once), and continuous detection of extraction.

Governance and Control Systems.

Produce systematic supervision:

- High impact decision human-in-the-loop controls.

- Controlled physical access to the data and machines that AI agents are allowed to touch.

ON the fly kill switches so that agents that act in an unexpected manner are killed. - Audit and forensics-wide logging of all actions of the agent.

- Weaknesses should be detected first by the red-teaming regularity to expose their vulnerability before they are exposed to the attackers.

CISA Zero Trust Maturity Model v2.0 presents several basic controls: identity management in AI service account, infrastructure in preparation of training clusters, micro-segmentation of networks, and end-to-end protection of data.

To thoroughly cover AI security architecture, AI-Powered Cybersecurity: Complete Guide to Machine Learning and Threat Defense addresses it.

Building Organizational AI Security Maturity

AI security maturity is a process that has predictable phases. The majority of these organizations begin on level 1 -2 and develop during 18-24 months.

Maturity Model

Level 1 -Initial: Pilot projects, low scope, manual procedures, high human intervention.

Level 2 – Jester: Large scale application, limited automation, identified measures, recurrent retraining.

Level 3 – Defined: Enterprise-wide coverage, standard processes, playbooks in writing, integrateable tools.

Level 4 – Managed: Fully automated SOC functions, and It is constantly being optimized, radically threatening insights, predictive analytic techniques.

Level 5 – Optimizing: All industry leading features, zero-trust evolution, automated response and constant innovation.

The further development would involve an investment in technology, individuals, and procedures. Leapfrog developments generate weak implementations that do not withstand the pressure.

Building Centers of Excellence

Accurate mature organizations would build AI Security Centres of Excellence:

- Cross-functional teams: data scientists, compliance specialists, cross-functional teams of security analysts, ML engineers.

- Government structures: AI implementation, use, and regulation.

- Knowledge sharing: Intra-corporate training, documentation, lessons learned.

Vendor management: Co-ordinated analysis and purchase. - Innovation pipeline: The constant investigation of the emerging technologies.

Centers of Excellence hasten the maturity process, by concentrating expertise and not working on the same process over and over again.

Cost-Benefit Analysis and ROI Calculation

AI security involves heavy investment. The spend has to be justifiable with demonstration of ROI.

Implementation Costs

Typical expenses:

- First implementation: $500 K5M (depends on the size and level of complexity of organisation)

- Yearly licensing: $50K-500K of business platforms.

- Staff: 2-5 to be hired in the form of specialists (ML engineers, data engineers, security architects) at the cost of $150K-250K each.

- Software Training: GPU clusters, telemetry data storage.

- Training and change administration: 20-30% of security spending.

Quantifiable Benefits

ROI comes from:

Direct cost savings: Mean of preventing breaches at average of 2.4M (IBM Cost of Data Breach Report).

Strength in Operations: Decrease in the cost of incident through automation by 60-70 percent.

Analyst productivity: 74 percent recovery of analyst hours on mature deployments (redirection to high-value work)

Risk mitigation: it decreased the risk exposure of any organization by 36 percent in its annual risk exposure with AI-enhanced detectives.

Fewer dwell time: Attackers held in minutes vs days (Breach dwell time industry average: 280 days)

Payback Period

The positive ROI is commonly realized within 12-24 months with the organizations depending on:

- Maturity of security at present (low maturity = sooner pays off due to efficiency improvements).

- High-risk industries (better returns on prevented attack)

- Dependable implementation (successful deployments result in ROI more quickly).

Break-even calculated is the total of 3 year costs divided by the prevented breach costs and operational savings.

Emerging Trends and Future-Proofing Strategies

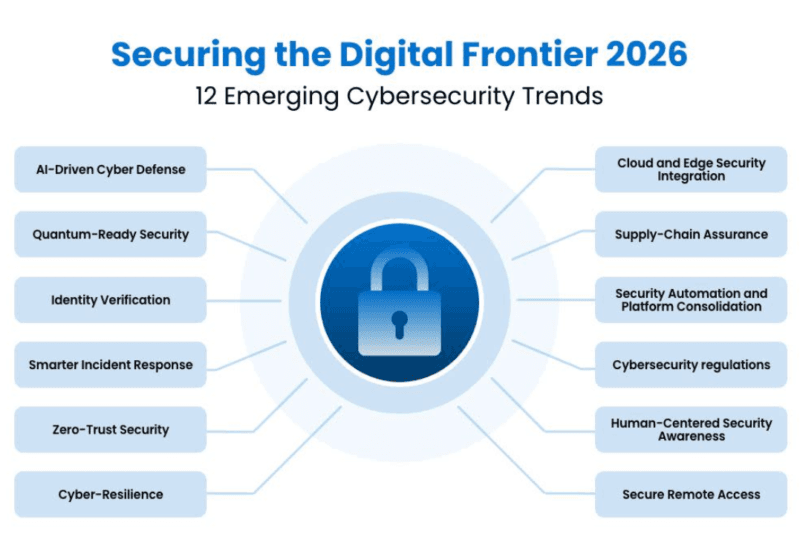

The AI security environment is changing fast. To be future proofed, one has to keep ahead of the trends.

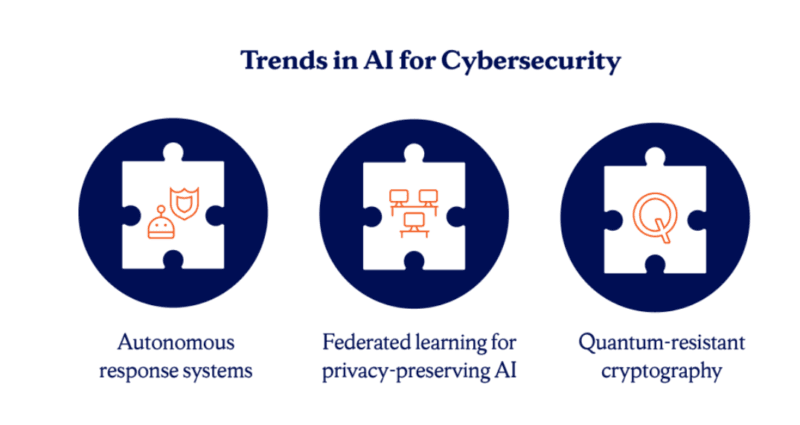

What’s Coming in 2026-2027

Generative AI in the defense: Large language models are helpful in the threat hunting step, writing investigation queries and automation of report writing.

Independent SOC tasks: AI agents are used to deal with end-to-end incident response with little to no human intervention.

Predictive threat modeling: ML anticipates the probable attack (vector) against threats prior to their institution.

AI architectures based on the zero-trust: CA continuous verification and minimal privilege use should be independently enforced on all AI agents and models.

Federated learning: Help find models on distributed data localize sensitive data (privacy-preserving ML)

Quantum Threat Preparations.

Current encryption standards will ultimately be broken by the use of quantum computing.

Organizations should:

- Supervise NIST post-quantum cryptography standardization.

- Weakly-encrypted inventory systems (RSA, ECC).

- Plan transition schedules (plan 5-10 year transition)

- Test quantum-resistant non-production environment.

Flexible cryptographically agile designs of AI models being employed today must accommodate the replacement of an algorithm with another without the need to completely retrain the model.

Regulatory Landscape

Look forward to tightening policing:

- EU AI Act: AI system compliance through risk based.

- NIST AI Risk Management Framework: Voluntary standards becoming de facto.

- Sector-specific requirements: Finance, healthcare, and critical infrastructure with a unanimity of AI security measures.

Embark architecture with building compliance. The process of retrofitting is costly and disruptive.

Getting Started: Your First 90 Days

Are you willing to apply the AI based defense? Here’s a practical roadmap.

Days 1-30: Assess and Plan

- AI security audit (what are the existing tools, sources of data, gaps, etc.)

- chart and describe threat model with MITRE ATT&CK.

- Determine baseline data (present MTTD, MTTR, false positive rate)

- Establish performance standards (developmental gains).

- Pilot scope (business unit, threat type, asset group) This identifies the scope belonging to the pilot.

Days 31-60: Deploy Pilot

- Choose publisher or open source system.

- Combine preliminary sources of data.

- Train baseline models

- Set up start up detection rules.

- Develop response playbooks

- Train pilot team

Days 61-90: Measure and Refine

- Measure the performance of pilots against success factors.

- Gather analyst feedback

- Adapt tuning by limiting false positives.

- Document lessons learned

- Current a case to the management.

- Strategy expand enterprise or adjust.

This is a low cost strategy and risky to boot.

Final Thoughts

It has been demonstrated that with the proper implementation of AI-powered cybersecurity, detection times can be reduced to less than an hour, false positives become less than 5 percent, and productivity increases 60-70 times when compared to the usual operation of a cybersecurity system.

Companies that have attained such results are characterized by similarities, they focus on improving quality of data, offer human control, keep on retraining models and evaluate performance stringently.

Execution discipline is what sets the difference between successful AI security and the failed implementations. Networks do not just get guarded by technology. The effective defense is a result of the quality data, tuned models and experienced analysts together with correct governance.

Begin small, test everything and expand in accordance with experimented outcomes. This is how AI is changing the way security operations are run, which used to be reactive and firefighting, into proactive threat prevention.

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!