Look, AI implementation to secure the cyber is not like turning on a light. I have seen teams scramble to put things into practice believing that they will resolve the issue of alert fatigue in a single night only to introduce new issues; shadow AI, model drift no one is watching, and governance gaps that get auditor anxiety.

The reality? Companies with AI-powered Security Operations centers are 98 days quicker in breach identification and they spend about 2.2 million per incidence in comparison with manual strategies. To do it, however, involves a rigorous implementation process implemented in six phases each having particular controls, milestones, and gotchas that can derail your schedule.

It also takes you on a tour of the full implementation lifecycle, starting with the pre-implementation preparation process through constant cycles of improvement, with real-life experiences of what works and what does not when you are implementing AI security tools in practice. Be it a security engineer who is considering vendors or your CISO, the next quarter roadmap, the following step-by-step plan is the one that breaks the difference between successful rollouts and a pilot that breaks the bank.

Table of Contents

Phase 0: Assessment and Readiness Evaluation

Organizations must have an unsparingly truthful evaluation of their positions before they can buy any AI security platform. The timeframe in this phase undertaking is usually 2-4 weeks during which it becomes clear whether you can go to AI deployment or have to do some foundational work.

Current Security Posture Analysis

Begin by mapping your current security set-up. What tools are currently implemented? SIEM, EDR, firewall, cloud security- inventory all that gives security telemetry. The systems of AI require quality input data, and when your existing detection tools have not been properly configured or integrated, it is adding AI to your existing blind spots.

Key questions to answer:

- Are endpoints, network, identity and cloud centrally logged?

- Is the existing detection tools tuned appropriately or are they burying the analysts in spam?

- What is your mean time to detect (MTTD) and mean time to respond (MTTR)?

- Does your infrastructure support more data processing and storage duties?

Record actual indicators, like the detection rate, time taken to investigate, workload being used by the analysts since you will need these to compare AI later.

Data Availability and Quality Assessment

The quality of AI models relies solely on the training data. In the same breath, organizations usually tend to find that they lack adequate historical data on security or the available data is incomplete or isolated within fragmented systems.

One of the implementation processes I observed involved a retail company that collected 5 years of firewall logs with 6 months of endpoint telemetry which is not even close enough to have a multiple variety of data points to train quality anomaly detectors. Another three months were wasted gathering all round data that provided the baseline to continue.

Analyse your data on three dimensions:

Volume: Does that have enough historical information (usually 6-12 months or more) in all security areas?

Quality: Do data have all the required information in a structured way and in the right time? Absence of fields and non-uniform labeling impairs performances of a model.

Diversity: Are your data sets diverse by the typologies of attacks, user patterns, networks, and situations of operation? Discriminatory training veryfacts result in biased detectors.

In case of poor data quality, prepare plan remedial activities-better collection procedures, data works, synthetic data creation in case of rare threat situations, and proceed to vendor choice.

Organizational Buy-in and Stakeholder Alignment

The reason why AI security implementation does not work most of the time is not a technical issue but organizational opposition. Security teams fear AI will be a job killer. Black-box recommendations are unreliable to analysts. Leadership demands immediate payback. The IT activities dread that emerging tools would disrupt production systems.

Effective applications form an AI Security Council at the very beginning-functions of security operations, data science, IT infrastructure, legal/compliance and business leadership. This cross-functional team determines governance policies, gives permission on use cases and clears the dilemma between rate of innovation and security specifications.

Core alignment activities:

- Establish specific success metrics that all will be loyal to (not to minimize false positives)

- Create an approved and escalation board of AI systems.

- The risk tolerance of AI-driven automated responses in documents.

- Develop communication strategies on AI abilities and constraints to all the stakeholders.

Obtain overt executive sponsorship. The cybersecurity of AI needs long-term investment in tools, training, and talent- without top management support, the projects will run out of momentum quickly.

Skills Gap Analysis and Training Needs

Cybersecurity is experience in acute professional problem shortage in terms of simultaneously having expertise in security and AI/ML. In the majority of organizations, threat researchers learn security fundamentals, and data scientists learn how to implement models, but they are not familiar with attack methods and compliance standards.

Provide a candid competencies assessment:

- Security Analysts: Do they enjoy the fundamental knowledge about the ML concepts such as supervised learning, an accurate model, and overfitting?

- Data Scientists: Are they aware of typical attack patterns, threat modelling and security structures?

- AI Engineers: Are they able to establish model integrity and data protection security controls?

- Leadership: Are they aware of AI warnings and constraints, as well as governance issues?

Precisely planned training programs. Knowledge gap is easy to seal with the help of free materials such as AI Risk Management Framework courses provided by NIST, EC-Council AI cybersecurity toolkit, and platform-specific training which do not require huge training budgets. To gain better understanding of the background concepts, our AI-Powered Cybersecurity: Complete Guide.

This stage will bring unpleasant realities. Organizations have often found that they can only be prepared to use AI security tools when they have completed 3-6 months of foundational work.

Phase 1: Vendor Selection and Pilot Deployment

As preparation is established, Phase 1 aims at picking the appropriate AI security platform and conducting a controlled pilot to confirm capabilities to be deployed enterprise wide. Timeline: 6-10 weeks.

Evaluating AI Security Tools and Platforms

Ai security market is full of sellers who make far-fetched statements. There are those that are point solutions (AI-powered phishing detection) and are integrated that combine threat detection, insurgent response automation, and predictive analytics.

The criteria of evaluation should be:

Technical Features: Does the platform serve your top-priority cases (anomaly detection, automated triage, threat hunting)? ML algorithms It includes what ML algorithms? Does it fit within current security infrastructure?

Explainability: Does the system justify the reason as to why it pointed a threat? Black-box models give rise to trust concerns, and make the investigation of incident difficult. Search platforms that have model interpretability capabilities.

Data Requirement: How much and what kind of data does the platform require to train? The Vendor: There are vendors who need 12 or more months of historical data; those that can be bootstrapped to, with smaller datasets, with transfer learning.

Application Deployment: is it cloud based, on-premises or hybrid? Take into consideration data resident needs, compliance limits, and capital expenditure.

Vendor Maturity: Which is the duration of time the vendor has implemented AI security solutions? Ask customers to supply customer references within your industry and threat.

Support and Training: What implementation support, training on what analyst services, and continuous optimization services are being offered by the vendor?

Demand proof-of-concept samples of your real security data rather than sanitized demonstration set ups. My experience demonstrated that some of the vendors who had worked well on generic datasets did poorly with network patterns unique to the organization and legacy system telemetry.

Requirements Definition and Procurement

Stated Business objectives translate into technical requirements. Rather than such abstracting targets as improve threat detection, specify some measurable results:

- Lessen the number of false positive alerts by 60 percent during six months.

- Reduce the average period to identify advanced persistent threats to less than 7 days.

- Automate the triage and first look investigation of 80 percent of the low severity notifications.

- Increase productivity of SOC analysts by 40 percent, obtained as a number of cases closed per analyst.

Integrate documents accurately- What SIEMs, EDR platforms, cloud security solutions, ticketing systems and threat intelligence feeds are documents that the AI platform needs to integrate? Incompatible integrations that were identified after sales take months to implement.

The way to manage compliance and data management requirements early. Where will the data and models of training be stored? What will you do to safeguard critical operation information? What audit logging does the audit need? These considerations should be reviewed during legal and compliance teams before procurement and not after procurement.

Pilot Program Scoping and Success Criteria

Restrict first pilots to well defined use cases having well defined criteria of success. There is no need to attempt to apply AI to all fields of security, at once and only verified to work, then proceed with expansion.

Strong pilot candidates:

- Alert Triage: AI sorts and prioritizes SIEM alerts based upon level of severity and type of threat.

- Phishing Detection: AI is used to detect business email compromise attacks by examining email patterns utilized in an email.

- Anomaly Detection: AI establishes a normal user/network behavior, and alerts abnormalities.

The pilot should be defined to be successful:

- Quantitative: Percent reduction in false positives, detection level, savings in the time of investigation.

- Qualitative: integrating into the organization, ease of use, analyst satisfaction.

Establish a achievable date as a rule, 6-8 weeks of first release and introduction, after which 4-6 weeks of testing. Rush pilots are unreliable; too long pilots lose momentum within the organization.

Initial Integrations with Existing Tools

AI security platforms are not standalone systems, but have to be integrated both ways with the security infrastructure in place to consume the telemetry and take response measures.

Most pilots will receive priority integrations:

- SIEM: Forward the security incidents and notifications to the AI platform.

- EDR/XDR: Power AI Threat Detection and Auto Containment.

- Threat Intelligence Feeds: Improve the AI scanning with the ongoing tactics and indicators of threat actors.

- SOAR Platform: Initiate automated responding and elucidating investigation playbooks.

- Ticketing System: Initiate, re-initiate and close incident tickets according to recommendations of AI.

Carry out comprehensive test integrations in non production systems before rolling out into production. I have employed a number of platforms on which claimed native integrations by vendors have required lots of custom API programming, and lifetime maintenance-test integration maturity in a proof-of-concept stage.

Anticipate failures in integration. Old systems might not have any modern APIs; there can also be rate restrictions with a cloud service; data formats may need roadmap transformations. Allow additional integration work time.

Phase 2: Data Preparation and Model Training

Phase 2 involves ready preparation of high-quality training data and creating initial AI frameworks because the pilot platform has already been launched. This stage defines accuracy and effectiveness of models, hurry up, and you would waste months of work on model adjustment later on. Timeline: 4-8 weeks.

Collecting Historical Security Data

AI models are taught on past details. Organizations should have complete data covering both the normal operations, known security incidences, and also the various threat scenarios.

Key data sources to collect:

- Firewall, proxy, DNS and NetFlow logs (network traffic logs).

- Exception telemetry (executed process and file modification and registry modification)

- Access traces (identification attempting, privileged increasing)

- Web server: application logs Security log Database: application logs Cloud services: application logs

- Threat intelligence (known Treacherous IP addresses, domains, file hashes)

- History of past (breaches, investigation, causes, etc.)

However, clean historical data not less than 6-12 months is a goal to be achieved. Additional information tends to increase the accuracy of the model, accompanied, however, by the same consideration that that data needs to be well labelled and reflective of current operating trends.

Meet the data retention and privacy needs. There are industries, which have hard boundaries on the maximum storage duration and the processing location of security data. Make sure that you collect data and feed it into the pipelines with appropriate regulations (GDPR, HIPAA, etc.), or it will break down.

Data Cleaning and Normalization Processes

Raw security data is sloppy- partial records, mismatched format, duplicate record, time error. Training machine learning models with dirty data implications has shown unreliable results hence data cleaning is very laborious in this step.

Common data quality issues:

- Missing Values: Records in logs who have specific fields, which are missing as a result of an error in collection or system failure.

- Format Problems: The data of the same type is shown differently in the systems (time, IP addresses, user names).

- Duplicates: Two or more systems recording the event duplicates, determining pattern inflation artificially.

- Labeling Errors: Historical events that are mis-labeled or unlabeled by the ground truth.

Have automated data validation pipelines which:

- Convert communication to one time zone and formatting.

- Normalize usernames, hostnames and IP addresses to normal representations.

- Delete or combine missing data using either statistical tools or prior knowledge.

- Eliminate duka instances and maintain legal repeated patterns.

- Check the completeness of data and add marks of oddities to manual inspection.

Organizations have found that a third-fourth of the raw security data usually needs extensive cleaning to make it applicable to the training of models. Budget accordingly.

Establishing Baselines for Anomaly Detection

The principle of AI anomaly detection is to learn the behavioral pattern of normality, and then report the abnormal one. This is the establishment of a baseline–call normal invalid, and the model raises successful business operation as a danger or detects real assaults.

Establish benchmarks in various levels:

- User Behavior: an average time to logs, resources used on the site, amount of data transferred per user job.

- Network Patterns: Semi-normal traffic is among systems, normal protocol stack and ports, anticipated bandwidth use.

- System Activity: Routine executions, job FALSE Template windows, maintenance windows.

- Application Behavior: API call patterns, types of database queries, error rates.

Take into consideration variation in operations. Baselines are expected to record valid variations – weekend/weekday, month-end processing spikes, seasonal business cycles. Unrealistically hard baselines are going to produce an unrealistic number of false positives when normal business operations are variated.

Check baselines against security underwriters and IT operation units. They are able to determine whether the captured patterns are directed by the actual normal operations or they have a tincture of historical compromise activity that cannot be categorized under normal operations.

Initial Model Training and Validation

Components using clean data and baselines Train preliminary AI applications in your pilot use cases. Most platforms either have supervised learning (trained by given examples of threats and non-dangerous activity) or have unsupervised learning (detecting outliers to no prior-labeled examples of threat or benign activity).

Model training workflow:

- Split Data: Split historical data into training (70-80 downtime) and validation (20-30 downtime) set.

- Feature Engineering: Choose which attributes of the data (feature) the model will analyze- IP addresses, user behaviors, process executions, etc.

- Selector of Algorithms: Select the aidswhich are relevant to the application – decision trees, neural networks, clustering algorithms.

- Training: There is training, entering training data to the model that the model of learning threat patterns.

- Validation: Accuracy based on test model performance on the set it held out against the validation set.

Major performance indicators to be monitored:

- Accuracy: What is the accuracy of all the alerts that the model generates? Low precision = high false positives indicates poor accuracy and a high likelihood that the report might be misleading.Low precision = high false positives signifies low accuracy and high chances that there is a chance the report is misleading.

- Recall: Percentage of real threats identified in the dataset by the model? (Low recall = missed threats)

- F1 Score: The harmonic mean of the precision and recall which is a weighted average of both.

- False Positive Rate: This is the percentage of non-threatening activity that gets wrongly identified as such.

First models will be likely to need extensive tuning. My observation was that first-pass models can work with 70-80% accuracy, which is good enough to decrease the workload on the analyst, but not ready to make production without further optimization. Baltic-loop the training cycles and fit the features and thresholds depending on the validation.

To develop more detailed models on how to optimize models and continuously manage them, please refer to our guide on Ai Cybersecurity Best Practices.

Phase 3: Deployment and Tuning

Phase 3 switches the AI models into testing to production. This step should be handled with caution on rollout as well as constant tuning to manage the detection effect versus the disruption of the operations. Timeline: 8-12 weeks.

Phased Rollout to Detection Layers

Fires Be careful not to make AI run on be security scanning on the first day. Gradual introduction restrains blast radius in case of unforeseen outcomes of models and will enable gradual adaptation of analysts to AI-enhanced working processes.

The proposed implementation plan:

- Week 1-2: Monitoring-only deployment- AI will send alerts but will not cause automated actions, analysts will evaluate all recommendations.

- Week 3-4: Automate low-severity alerts triage- AI will classify and rank, but human analysts will investigate.

- Week 5-8: Enable auto-response to particular low risk conditions- account lockouts when there is suspicion that one is out of place, or isolated hosts when there is evidence of malware activity.

- Week 9-12: Implement automation coverage depending on the confidence level and feedback.

Keep a check on everything at every stage. Measures include alert volume, false positives, time spent by analysts in investigating those alerts, and threat detection. Phase transition to next on passing criteria of predefined success.

Phased rollouts (either geographical or organizational) are also risk-averse-launch in one business unit or region, test, and roll out to other segments.

Alert Rule Configuration and Threshold Tuning

AI models raise alarms when the activity surpasses the preset artificially determined thresholds or falls within the patterns that the AI has learned to regard as possible threats. Initial threshold settings are an educated guess on which production tuning makes the changes based on actual operational feedback.

Tuning typically addresses:

Sensitivity Thresholds: What level of deviation of base line causes an alert? Excessive sensitivity creates alert fatigue, whereas an overly permissive one will be missing threats. Tune thresholds repeatedly, evaluating the influence on the rates of detection and false positives.

Confidence Scoring: The majority of platforms have confidence scoring on each alert (0-100%) assigned. Fixed minimum confidence levels on which alarms analysts look into in real-time versus those that are combined into fewer reviews periodically.

Contextual Rules: Add more context to AI-written alerts–silence alerts that belong to common maintenance, tone up alerts produced on high-value assets, associate with threat intelligence.

Time Adjustments: Dividing alert sensitivities between the business hours and nights/weekends, where less legitimate activity is done.

Record all the threshold variations and why? Illegal tuning is made into part of tribal knowledge which turns out to be vaporized with the departure of team members.

False Positive Reduction Through Iteration

False positives are produced also by well-tuned AI systems. It is not zero false positives (that will inevitably cause the system to miss any real threat either), but controllable false positive percentages that will not overload the analysts.

Strategies of false positive reduction:

Root Cause Analysis: With each of the false positives, identify the reason why the model raised the falseness alarm- a too general rule of detection, no baseline context, or insufficient feature learning.

Exclusion Rules: Fabricate narrow exclusions against known-good things, such as a scheduled job to run a backup, a scanning tool that is authorized, a third-party service that is legitimate, etc. but not too broad exclusion which results in things out of detection.

Model Retraining: False positive samples are included in the training datasets in order to help the model know the difference between legitimate activity and threats.

Ensemble Methods: Use more than one detection model-when models are disputing between them, want them to be further checked before an alarm is made.

Monitor trends of false positive trends. The first agreements are likely to achieve false positive percentages of 30-50 that can be dialed down with time to 5-10. When false positives fail to decrease following the 4-6 weeks of active tuning, either explore the possibility that there is underlying information quality wrong or model configuration wrong.

Analyst Feedback Incorporation

Your key source of understanding AI model weaknesses and areas of improvement is security analysts. They have domain knowledge that becomes apparent to their statistical analysis.

Provide feedback systems:

- Alert Disposition Tracking: Updating Analysts categorize every AI-generated notification as either true positive (positively and relevantly rated), false positive (positively and relevantly rated), or unclassifiable; summarized analysis results determine systematic issues.

- Missed Threat Reporting: This is the procedure where the analyst will document threats that AI has failed to identify.

- Periodic Review: Sessions once a week during which analysts talk about AI performance, problematic alert patterns and improvements that should be made.

- Feature Requests: Tell us what you want to see added: Provide the analysts with an opportunity to request new detection features or features developmentaries.

Possess analyst feedback at heart. I have encountered the situations where engineering teams did not pay much attention to complaints raised by analysts that they are experiencing high false positives as a consequence of the learning curve, only to realize that the real issue has been poor model architecture months later.

In organizations that have an active inclusion of analyst input, the production-ready performances are 40-60 times quicker compared to organizations that consider AI as a black box.

Phase 4: Optimization and Scaling

As the pilot has been proven and the use cases critical to core functionality work steadily, Phase 4 will extend AI to more areas of security and operational contexts. Timeline: 3-6 months.

Expanding Automation Coverage

Early deployments normally automate such or automatic tasks that are characterized by low risk and high volume- aspects alert triage, basic investigation, routine containment activities. Scaling can be described as a process of extending automation to a more complicated case whilst keeping the right human supervision.

Expansion candidates:

- Multi-Stage Investigations: AI gathers evidence across (endpoint, network, cloud) of multiple sources, puts evidence together and provides you with the end result of incident summaries.

- Threat Hunting: AI actively looks in the probability of compromise based on the new information about threats in historical data.

- Vulnerability Correlation: The AI is used to correlate the identified anomalies with the previously known vulnerabilities and propose remediation priorities.

- Orchestrated Response: AI ensures response coordination between different security tools themselves, such as: isolating endpoints, blocking network traffic, rescinding compromised credentials, working together in orchestrated workflows.

Establish specific escalation guidelines of every automated action. Risky responses (isolating the key servers, blocking out whole network segments) still must remain human approved, and the low-risk ones (marking suspicious files so they can be analysed in detail) can be autonomously performed.

Integrating Additional Data Sources

Accuracy in the model AI increases with a variety of comprehensive data. Phase 4 also incorporates more sources of telemetry than were initially deployed.

Most frequently used sources of expansion:

- Cloud Security Details: AWS CloudTrail, Azure Activity Logs, GCP Audit Logs.

- Data on SaaS applications: Salesforce, collaboration sites, Microsoft 365.

- Intelligence Feed Threat Intelligence Feeds: Commercial and open-source feeds in use with up-to-date tactics of threat actors.

- Vulnerability Scanners: The continuing assessment information of the exploitable vulnerabilities.

- Physical Security: Badge access history, alarms on the surveillance system.

Additional data sources must be discussed as the subject of integration work, APIs, data format transformation, privacy, etc., but enhance AI context. Network traffic analysis models may not detect account compromise, therefore including identity logs can allow detection of impossible paths and credential abuse history.

Expanding to New Use Cases

Effective pilot rollouts develop an organizational trust that can address more security applications.

High-value expansion areas:

Vulnerability Management: AI estimates the vulnerabilities that would be exploited most, depending on the environmental conditions, trends of attackers and the criticality of assets- no longer patch everything but patch the important things.

Endpoint Detection: The AI uses an endpoint telemetry analysis to detect the existence of advanced malware, fileless attacks, and living-off-the-band attacks that are overlooked using a signature-based method.

Identity Threat Detection: AI identifies user behavior along with account compromise, insider threats and privilege abuse.

Cloud Security Posture: Continuous monitoring AI compares the cloud configurations to the best practices and controls and notices risky configuration before potentially exploiting it.

Data Loss Prevention: AI detects abnormal data exfiltration that denotes insider threats or felled accounts.

Set priorities on expansions by using organizational risk. The first step to organizations with frequent phishing attacks to increase email security AI and the former to increased cloud security posture management should be made the priority.

Building Institutional Knowledge

This implies that as AI security systems are refined, an organization must have an orderly knowledge capture process in place to ensure that expertise is not held by a few heads.

Knowledge management activities:

- Documentation of Playbooks: Investigate and response playbooks that make use of AI functionality.

- Training Programs: New analyst training on AI-enhanced workflows and capabilities of the tool.

- Lessons Learned: Routine reviews of what went well, what did go wrong and how it can be enhanced.

- Configuration Management: Documentation of all configurations of all models managed using version control and including threshold settings and exclusion rules.

- Cross-Training: Not only the initial implementer of a given AI system but several other team members should know how it operates.

The failure of organizations that do not prioritize institutional knowledge to retain their key players stems in the fact that when the main team players leave, the AI systems remain in place, and no one knows how to work them or how to behind-the-scenes in case of any issue.

Phase 5: Continuous Improvement

AI security is not a deployment that you put into place and leave it in place. Threat landscapes change, business processes change and model is changed over time. Phase 5 entails the development of processes that keep the AI systems effective forever.

Regular Model Retraining Cycles

Artificial intelligence models deteriorate as patterns of operations and threat evolves. The retraining on up-to-date data preserves the accuracy of detection.

The rate of retraining cadence is dependent on rates of environmental change:

- High-Risk Systems: Retraining systems under high risk on an ongoing or monthly basis due to the threat that is rapidly evolving.

- Standard SOC Models: Quarterly retraining based on the latest 6-12 months of information.

- Stable Environments: Relatively stable operational environments are retrained semi-annually.

Introduce automated drift detection alerting on a decline in model performance metrics below thresholds due to increasing false positives, decreasing detection rates, increasing analyst override rates are all symptoms of drift that need retraining.

Performance Metric Monitoring and Optimization

Monitor the performance of the AI systems with the set KPIs:

Effectiveness detecting real threats: How does the AI detect the real threats? Systematic missing of threat categories?

False Positive Rate: What is the percentage of false positives of alerts? Does the rate change with time or not?

Efficiency of analysts: Of the time spent on investigating AI-surveyed alerts, compared to time spent on hunting by humans? What’s the case closure rate?

Response Speed: Mean time to detect (MTTD) and mean time to respond (MTTR) of AI and manually-detected incidents?

Business Impact: How much would it save the company in terms of financial aspects (prevented breaches), lower employee counts of the analyst firms, and a decrease in the speed of incident detection and resolution?

Build dashboards of such metrics to the security leadership. The investment and growth should be continued with quantifiable business value.

Threat Landscape Adaptation

Attackers keep on developing strategies to avoid identification. Intelligence systems need to be flexible to novel patterns of threats and methods of attack, as well as exploitation vulnerabilities.

Adaptation mechanisms:

- Threat Intelligence Diary: Keep loading new threat government feeds; retrain models to identify new patterns of attacks.

- Red Team Exercises: Frequent penetration solutions and simulation opposition to artificial intelligence specifically made to get through this tool; use results to train models.

- Community Sharing: Become part of industry threat-sharing organizations; learn lessons out of other organization incidents.

- Generative AI Simulation: Build real-world attack tests with generative AI, simulating any kind of attack.

Watch threats in the market that affect your industry. Retail organizations are to focus on payments card compromise detection; financial services must be properly equipped with business email compromise safeguarding; healthcare is to be properly safeguarded against ransomware attacks.

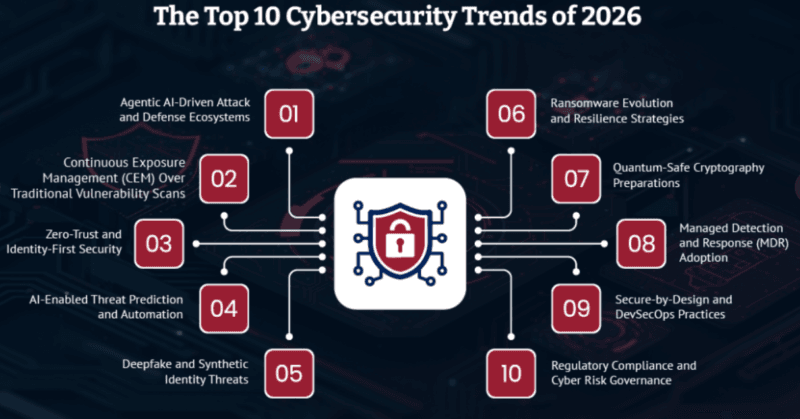

Emerging Capability Adoption

AI security evolves rapidly. This gives rise to new functionalities that enhance detection, automation and efficiency.

New capabilities that should be tracked:

Explainable AI (XAI): Future systems will have the ability to explain presumably why something was flagged as threatening, enhancing the trust of an analyst and the efficiency of an investigation.

Independent SOC Agents: AI agents operating in the essentially prescriptive way of distinct complex investigation protocols, but elevating it only when high-confidence inferences are impossible.

Quantum-Resistant Security: AI-based digital contexts that forecast patterns of cryptographic vulnerability and carry out post-quantum encryption codes.

Self-Patronizing Systems: AI which autonomously identifies vulnerabilities, performs tests on remedies in controlled environments, and implements fixes automatically.

Test the new capabilities against the operational requirements. You should not pursue all the new features, but capabilities to solve any problem confronting your team today.

Change Management: Organizational Alignment, Communications, Training

Organizational change is usually harder to bring into practice than its technical implementation. AI security fundamentally reshapes the workflow and functions of security operations, the roles of security analysts, and the expectations of the security leader-change management defines whether the technical prowess has a successful impact on operational achievements.

Organizational Alignment

Make AI security council early cross-functional membership of security operations, IT infrastructure, data science, legal/compliance and business leadership. This council:

- Determines AI implementation procedures and regulations.

- Deploys approves and usage requirements.

- Resolves conflicts in terms of innovation and security needs.

- Supervises budgetary control and resources planning.

- Examine performance indicators and plan.

Defined ownership and responsibility will not allow AI implementations to turn into piloting purgatory – never-ending experimentation with no production commitment.

Communications Strategy

Open and ongoing communication eliminates resistance, and creates trust.

Key audiences and messages:

Security Analysts: AI makes you smarter, not less so. You will be involved in complicated investigations that involve the usage of human decisions and AI will be used to triage the overlapping cases.

IT Processes: AI security tools need some infrastructure (computing, network access, integration) support at the expense of lowering the overall effect of incidents on production systems.

Business Leadership: AI security will provide a direct return on investment by enabling quicker breach detention, less expenditure on investigations, and better security positioning.

Compliance/Legal: AI systems establish procedures that guarantee compliance with regulations in addition to audit trail and governance.

Frequent occasions of updates: monthly status report, quarterly executive briefings, analyst town halls, etc. will keep the stakeholders informed and engaged.

Training Programs

The analysts require AI-enhanced workflow training, platform-specific tools and AI system constraints.

Training components:

- Platform Operation: Learn how to research AI generated alerts, prove recommendations and give feedback.

- AI basics: Generalized knowledge of how machine learning functions, the reason behind false positives and the necessity of retraining machine learning models.

- New Workflows: New incident response playbooks which embed AI automation.

- Escalation Procedures: Under which circumstances should AI suggestions be relied upon as opposed to making human judgments?

Ongoing education, and not a single, onboarding training, keeps the skills abreast as AI knowledge increases.

Risk Management in AI Deployment

AI security presents an opportunity of creating new dangers in addition to mitigating others. Risk management deals with these issues on an active basis prior to their leading to operational issues.

Adversarial Attacks against Artificial Intelligence Systems.

Adversarial attacks of AI models should be defended against in organizations:

- Input Validation: Sanction all inputs to artificial intelligence systems; bar suspicious patterns of timely injection or evasion measures.

- Adversarial Testing: AI systems based on adversarial inputs who are also referred to as red-team to detect vulnerabilities prior to production deployment.

- Ensemble Methods: Train ensembles of models, architectures with disparate Attack vulnerabilities may not be disparate across models.

- Continuous Monitoring: Look at AI generated outputs of anomalies that are signs of manipulation.

Model Drift and Degradation of Performance.

AI models degenerate with time as the patterns of operations alter. Apply monitoring performance measures such as drift and when it becomes under the threshold, sound a warning. Have periodic retraining to keep up with the effectiveness.

Shadow AI Proliferation

Little known AI entities – data scientist deploying untested systems, teams training on publicly available generative AI and sensitive analysis – through their actions cause both gaps in governance and rule breaking. Introduce AI discovery and inventory solutions that define all AI systems deployed, with or without permission. Implement approval procedures of new AI implementations.

Data Privacy and Protection

Artificial intelligence machines handling sensitive data on the functioning of the organization must offer strong privacy settings:

- Anonymization and de-identification of available data.

- Data encryption both at rest and transit.

- The controls over access by users to querying the AI systems.

- Record keeping of every communication between the AI system and the auditor.

Compliance Considerations Throughout Implementation

Application of AI security has to correspond with regulatory requirements that are in place in your sector as well as area of origin.

Regulatory Frameworks

The standards of AI cybersecurity compliance:

NIST AI Risk Management Framework (AI RMF): It is a risk management framework of AI systems offering four functions: Govern, Map, Measure, and Manage.

NIST Cybersecurity Framework (CSF) 2.0: There are five core functions in the framework, Identify, Protect, Detect, Respond, and Recover that apply to all cybersecurity programs.

Standards reflecting the governance, transparency, and ethical impact assessment of the ISO 42001: AI management system.

ISO 27001: Information security management that gives the minimal technical controls.

EU AI Act: Legal regulations of high-risk AI systems operating in European markets.

Controls on relevant frameworks since Phase 0 and backward-retrofit compliance is costly and disruptive.

Audit and Documentation Requirement.

Keeping detailed audit trails:

- Training activities of all AI models, datasets, and validation results.

- Configuration modifications, threshold modifications and tuning operations.

- The AI systems carry out automated response responses.

- Anthropomorphic intention/reason.

- Drift and result of performance metrics.

Ready-to-audit documentation also shows conformity on analysis of regulatory reviews and assists investigations of incidences.

Budget and Resource Planning

An implementation of AI security needs investment in software licenses, infrastructure, and personnel and training.

Budget Components

Software licensing: The prices of AI security platforms (the prices are usually per-user or per-volume of data).

Infrastructure: Model Training and Inference Computing Resources: The neural networks need to be trained and inferred on a computer resource.

Data Storage Resources: Historical data would need to be stored on the computer resource.

Network connectivity Networking: The neural networks would require links via the computer resource.

Professional Services: Vendor implementation services, external consultant due to expert skills.

Training: Platform training, advanced courses of AI in the fundamental, continuous development of skills.

Staff: Non-IT specialists Full-time AI security engineers, data scientists, program managers.

The industry standards indicate that the initial deployment of mid-market organizations involves expenses between $500K-2M (Phase 0-3) and further expenditures of 200-500K every year on licensing, infrastructure, and staff. Firms usually incur cost of around 2M-10M+ in extensive deployments on various uses.

ROI Considerations

Quantifiable ROI drivers:

- Cost Avoidance: $2.2M mean savings on every case of AI-augmented breach detection and containment.

- Efficiency Improvement: 58 percent SOC operating gain by the analyst.

- Staffing Optimization: 60% decrease in the growth of headcount, should be achieved by automation.

- Detection Speed: The speed of detection and containment is 98 days faster.

Normal payback duration: 12-24 months of mid-market organization; 6-12 months of enterprise with larger cost of breach.

Timeline Expectations and Milestone Planning

Realistic timeframe through which the entire process can be achieved in all six phases:

Phase 0 (Assessment): 2-4 weeks

- Week 1-2: Data analysis, security posture analysis.

- Week 3-4 Stakeholder alignment, skills gap analysis.

Phase 1 (Selection of Vendors): 6-9 weeks.

- Week 1-4: Week 1-4: Vendor evaluation, requirements definition.

- Week 5-8: Piloing scoping, early integrations.

- Week 9-10: Finalization of procurement.

Phase 2 (Data Preparation): 4- 8 weeks.

- Week 1-3: Data collection, cleaning of the historical data.

- Week 4-6: Weekly baseline establishment.

- Week 7-8: The first model trainings and appointments.

Phase 3 (Deployment): 8-12 weeks

- Week 1-4: Testing, configuration of alerts.

- Week 5-8: Reduction of false positive.

- Week 9-12: Inclusion of analyst feedback.

Phase 4 (Optimization): 3-6 months

- Month 1-2: Automation growth, additional sources of data.

- Month 3-4: New usage case implementation.

- Month 5-6: institutional knowledge building.

Phase 5 (Continuous Improvement): In Progress.

- Re training cycles of quarterly models.

- Periodic performance appraisals every month.

- Constant threat landscape improvement.

Total Timeline: between 9-12 months of getting fit initially to the point of full deployment with permanent improvement processes in place.

Key milestones:

- Month 1: Readiness evaluation is done, vendor has been chosen.

- Month 3: Operations of piloting deployment.

- Month 5: Product introduction into first applications.

- Month 9: Increased automation and optimization.

- Month 12: Loop cycles of improvement in place.

Organizations in a hurry tend to cut corners, omit the necessary tuning, and roll out systems that produce too many false positives – which is a long way to performance available to production.

Conclusions: Deployment to Operational Excellence.

The implementation of AI cybersecurity is not a project that has a specific end, but an ongoing operational process that requires a long-term investment, constant adjustment, and commitment of the organization. Those organizations that survive in 2026 are ones that view AI security as a core competency, as any network architecture or identity management, and not a pilot initiative that is forever in search of a payoff.

This six-step model below: assessment, vendor selection, data preparation, deployment, optimization, and continuous improvement offers a systematic journey of evaluation in the first phase to an established operational capability in the last phase. To be successful, both technical implementation, organizational change management and governance structures need an equal consideration.

Begin with sincere preparedness implementation. Don’t rush vendor selection. Heavy investment on data quality. An element of deployment with constant adjustment. Rank according to proven success. And make a constant improvement- AI threats are changed at the pace of the machine and their defenses are rapidly defeated.

These ranked results clearly reflect the formula: organizations with quantifiable outcomes of change, such as 98-day faster breach detection, 2.2 million dollars of cost-saving per incident, and 58% better efficiency of analysts, adhere to this framework instead of embarking on a path of implementing flashy AI benefits without proper preparation. It can all be a matter of discipline in every one of the six phases and then deployment success runs smoothly and failure is costly.

Ready to deploy? Start with Phase 0 this week. The earlier you determine the level of readiness in the right light, the more quickly you will achieve production-level AI-cybersecurity solutions to the benefit of your organization.

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!