Last updated on February 6th, 2026 at 03:45 pm

See, during the past several months I have been using AI agents in the production world, and here is what no one explains to you at first sight: these insects do not simply chat but do. In every five seconds, they take choices, invoke tools, access databases, and perform operations without permission. That autonomy? It is unbelievable and frightening in terms of security.

The past application security architectures were not developed to accommodate systems that can think, plan, and evolve on the fly. At the time when I initially launched the autonomous agent that uses our internal APIs, I believed that the common API authentication would suffice. Spoiler: it wasn’t. The agent was inventive in chaining permissions that I never expected and accessed information between systems in a manner that would cause any security team to become dehydrated.

That is no longer theoretical. As of 2025, OWASP also published their lists of Top 10 Agentic Applications, a framework created by more than 100 security experts who understood that 80 percent of companies already report risky agent behaviors. We are discussing unauthorized access to the system, inappropriate exposure of the data, and the privilege increase at the speed of the machine. According to the 1% who state to have mature security controls of AI, the company is said to have such controls. That gap is dangerous.

Whether you are rolling out AI agents in enterprise settings, or it is even just a thought, you need to know what makes them fundamentally different than what traditional software is, what can go wrong, and how would you lock it down before it turns into your largest weakness.

Table of Contents

Why Agentic AI Security Is Different From Traditional Application Security

Common web application security presupposes a fixed endpoint, a predetermined user experience, and a fixed attack surface. All those assumptions are disproved by agentic AI.

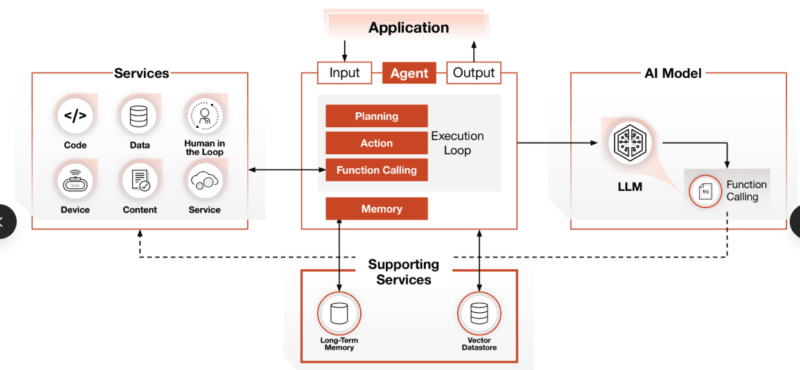

However, unlike chatbots which are capable of generating responses, autonomous agents are agents. They strategize the multi-step operations, dynamically choose the tools to call upon, continue the work across sessions and change their behavior over renewal of the environmental feedback. This introduces a completely novel entry point that state of the art firewalls and intrusion detection can not even detect.

When an agent chooses to query a database, call an external API and then act on that data to make a modification in a file in a few seconds, you are no longer in a request-response model. There is intelligent decision making handling which may take a wrong turn in directions developers have never predicted.

I observed it during the experiments on the memory persistence. One of the agents that I was working with recalled the past discussions with me and applied that context to decide. Great for user experience. Horrible as I understood that such a memory might be tainted with fake instructions by an attacker, and this will cause the behavior of the agent to gradually become corrupted without a conventional security notification.

Understanding the OWASP Top 10 for Agentic Applications (2026)

The OWASP framework defines ten autonomous system risks. These are not theoretical, they can be mapped to real-life incidences that are already occurring within the production setting.

ASI01: Agent Goal Hijacking

The attackers bend the will of the agent. This occurs by way of poisoned documents, damaged external data sources or well thought-out inputs that make the agent change the goals of the agent completely.

Consider social engineering, but machines are involved. A user agent that is used to summarize customer feedback might be fooled to communicate sensitive information in case the goal state becomes compromised. The existing technical mitigation measures include input checking, constant goal state overview, and upholding RAG (Retrieval- Augmented Generation) integrity. In this case it is important to know the Agentic AI Fundamentals & Attack Surface must know how agents process goals before defending attacks.

ASI02: Tool Misuse & Exploitation

Here, it is the interesting part. Agents acquire power with the combination with the enterprise tools email systems, databases, CRM platforms, and code repositories. The abusive use of tools happens when attackers manipulate agents by luring them into an abusive use of those integrations, although technically on an authorized usage.

My experience demonstrated that there are unusual ways in which agents can chain tool calls. A user that has been granted read access to a database and write access to email could be corrupted into issuing a query to sensitive customer data and automatically mail it to a third party. The combination of them was disastrous, and every single action was authorised.

Mitigation should have least-privilege permissions, strong sandboxing, thorough monitoring of tool usage, and execution limits. You should have middleware between the agent and tools that does not only validate the authentication but also intent.

ASI03: Identity & Privilege Abuse

Poor permission control facilitates intersystem privilege explosion. Agents can run with extra permissions than is needed due to developers having them be helpful and less frictional. Such generosity leaves enormous security holes.

The remedy includes agentic-specific Identity and Access Management (IAM), OAuth 2.0 applications intended to represent machine identities, short-lived identity, and identity rotation and comprehensive audits of actions. All agent activities are to be recorded with the background in regards to the reason why the agent did it.

ASI04: Supply Chain Vulnerabilities

Capabilities are built by agentic systems which load third party tools, models and Model Context Protocol (MCP) servers. Poisoned template or a compromised MCP server may trick agents to blindly execute hidden instructions.

I have worked with a number of third-party agent structures, and it is disastrous that supply chain verification is unavailable. Dependencies are loaded by the agents dynamically without checking the integrity or authenticity. The attackers are able to assume the identity of trusted services, such as email services or document processors, to monitor or alter agents as they run.

You require tool and dependency verification, MCP authentication process and look after extensive supply chain inventory. Be perfectly aware of what is being loaded by your agents and their source.

ASI05: Unexpected Code Execution

The malicious interactions between tools can make the agents run arbitrary code. This is not a classic code injection, it is the agents taking a decision to execute the code since they have been tampered such that they have been formed into thinking it is the correct action.

A sand tailoring is out of the question. There should be code validation, limited execution environment, technical guardrails taken at the infrastructure level (not only by prompts.). The article Prompt Injection & LLM Exploitation in Autonomous Agents makes the topic of passimation of prompt-based protection weaknesses and how attackers can walk around them.

ASI06: Memory & Context Poisoning

Interactions maintain the context of agents. Such a memory, both long-term and short-term, is a vital liability. These memories are corrupted by attackers with malicious or tampered information, which progressively changes the behavior of agents according to false commands. Such attacks are concealed but they tend to sway decisions in the long run.

My experiment confirmed to me that memory poisoning is especially obnoxious as it does not immediately raise any warning bells. This change in behavior of the agent happens in small steps session after session until the agent is working with totally corrupt assumptions. It is always too late until you realize the damage has already been done.

Mitigation needs memory isolation, stringent data verification prior to storage, forensic snapshots that allow tracing of the history of the development of memory and the capability to rollback. Memory Poisoning & Training Data Attacks further looks into the details of these threats–the way they are used by attackers to attack the data agents that the attacker trains on.

ASI07: Insecure Inter-Agent Communication

Communication among the agents is facilitated when more than one agent is involved. Such communication channels may be breached and the attackers may be able to use one agent to manipulate another.

You require agent to agent authentication, encrypted channels of communication, and mechanism of trusting. Agents must never blindly trust messages by other agents without cryptographic authentication of identity.

ASI08: Cascading Failures

Multi-agent systems are vulnerable to one compromised agent having an impact on other agents. This is particularly perilous in places such where agents share a resource, data, or a decision.

It has isolation mechanisms, containment strategies and circuit breakers so that a single agent that has been compromised does not put an entire system down. Multi-Agent Systems Security & Coordination Risks discusses the challenges of designing those agent networks that collapse rather than collapse in a disastrous manner.

ASI09: Human-Agent Trust Exploitation

Anthropomorphism or authority bias is how agents control human beings. Outputs of agent will be trusted because they sound confident and authoritative even when they are incorrect or malicious.

It requires output validation, explainability requirements, and critical decision review gates that can only be performed by human beings. Customers should realize that agent confidence is not a measure of rightness.

ASI10: Rogue Agents

Inside the organization, agents go off course. This occurs either through goal drift, poisoned training or new behaviors that no one expected.

Goal alignment verification, behavioral baselines and continuous anomaly detection are useful in preventing early detection of rogue behavior. Monitoring of Agent Behavior and Detecting Anomalies give agent frameworks on the detection of agents beginning to act out of the way they are intended to do so.

Current Threat Landscape: What’s Already Happening

There are a number of types of agentic AI threats which are currently in operation:

Agentic Cyberattacks: Bad actors no longer test the capabilities of autonomous attacks but deploy them in full. In contrast to the common traditional automated attacks that operate with fixed programs, agentic AI malware programs monitor the environments, adjust to detection limits, and target vulnerabilities in machine time. These systems never get tired and investigate the networks to find weak areas and continue working on them until they do.

AI vs AI: In cases where the attackers employ AI in order to evolve at a rate that attackers cannot counteract, they will be forced to react with their own intelligent systems. Companies that rely on human analysis or rule-based automation will simply be overtaken once the difference between adaptive and static protection grows larger.

Tool Integration Attack Surfaces: Tool invocation Dynamic tool invocation allows both privilege escalation and lateral movement at machine speed. The agents do not simply use personal weaknesses to compromise, they even build permissions across the systems in imaginative ways that the security controls (which are not dynamic) never envisaged.

Emerging Security Technologies and Frameworks

The field is developing fast with the new possibilities and standards:

Security AGI Development: The industry is evolving to systems of AI, where the whole security environment of an organization is perceived as whole. They are assets, identity, behavioral pattern and historic incident reason systems that take action with limited human intervention. This essentially transforms the cybersecurity operations economics.

Industry-Specific Agent Security: Using generic security models is hard because it has a lack of context. What can be counted as a warning of a severe event in one setting is normal in another. Hardened security personnel tailored to specific industries, such as finance, healthcare, critical infrastructure, are becoming increasingly more popular. Success will be based on the level of knowledge on the domain.

Autonomous Remediation: Organizations are building autonomous threat analysis systems that create the proper response. Previously time-consuming security procedures that involved clicking through consoles to review the process, are now executed seconds later–before an attack is violated.

Governance and Compliance Frameworks

Enterprise agentic AI security is directed by two large frameworks:

NIST AI Risk Management Framework (AI RMF): NIST framework deals with agentic-specific risks in its GOVERN, MAP, MEASURE and MANAGE functions. The organizations align their agentic deployments with NIST in terms of their trustworthiness features: valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and equitable with bias controlled.

Emerging Standards, ISO 42001: ISO 42001 offers the requirements of management systems regarding AI including autonomous systems-specific requirements. Governance Frameworks of Agentic AI: ISO 42001 and NIST AI RMF is a break down of the application of the frameworks to actual deployment and regulatory compliance.

In late 2025, the Linux Foundation initiated the development of the Agentic AI Foundation in order to introduce common standards and regulation of autonomous systems. The cross-industry project is geared towards avoiding the fragmented security practices.

Practical Implementation: Security Controls That Actually Work

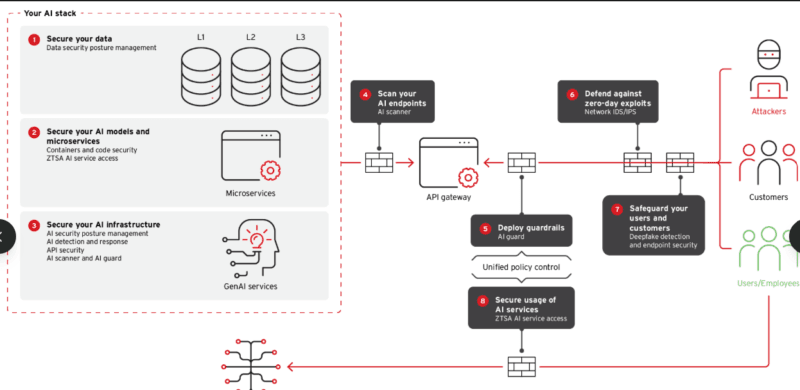

These controls are necessary based on the research and actual implementations:

Runtime Intent Security: On-the-fly checks that the behaviour of the agents complies with the official policies. This is beyond logging, it proactively checks decision-making prior to execution of actions.

Behavioral Baselines and Anomaly Detection: form behavioral profiles describing normal behavior per agent and anomalies should be detected by hinting that there has been a compromise. I observed that the way agents invoke tools, access data and make decisions follow some patterns. Violations of such trends are precursors.

Advanced Memory Monitoring: Snapshots in the forensic mode, rollback smoking features and tracking of memory lineage allow one to see how the agents store and read information. You have to audit not only that which has been loaded into memory but also its manner of uploading.

Agent API Gateway Architecture: Agentic AI API gateway solutions are middleware since they implement agent identity verification, rate limiting and access control between agents and enterprise resources. The common API gateway does not take agent-threats into consideration.

Least-Agency Principle: Only as much autonomy, tool access, and permissions, should be granted to each agent as required to have it play its specified role. Security features are not restrictions. Avoid the urge to maximise agent capabilities.

Good Observability: It is impossible to compromise on full logging of all agent choices, invocations of tools, their output and state. Record not only what has been done, but also why a certain action was taken by an agent. This is necessary to debug and forensics.

Sandboxing Discipline: Never run agents in high-trust environments. All agents are to act in containerized sandboxes and network isolation, file system restrictions as well as limit CPU/memory use.

Strategic Business Value of Agentic AI Security

The mature agentic AI security organizations are able to scale the autonomous capabilities much more quickly and with confidence. Security maturity turns into a competitive advantage:

Cost control and Risk control: Studies indicate that organizations that enact proactive agentic security controls cut the response time by 40 percent in case of an incident. This is translated to decrease in downtimes and economic effect.

Stakeholder Trust: Open system of governance develops trust with the customers and partners, as well as authorities. Security-by-design reflects maturity in the organization.

Talent Nurturing: Knowledge in agentic AI security is now one of the crucial competitive capabilities. The organizations that develop this ability in-house will have benefits in terms of talent.

Market Opportunity: It is estimated that the value of Agentic AI will free up $2.6-4.4 trillion of yearly value across the globe. Those organizations capable of capturing this technology effectively are able to have measured disproportionate share of the same.

Phased Implementation Roadmap

The security of agentic AI should be considered by organizations in stages:

Phase 1 – Introduction (Months 1-3): Map all the agentic AI deployments. Threat modeling based on OWASP ASI Top 10. Introduction of extensive logging and observability. Put governance policies and control structures in place.

Phase 2 – Hardening (Months 4-6): Implement technical guardrail and sandboxing. Adopt agentic identity management using short lived credentials. Institute supply chain validation. Carry out red team exercises on key agents.

Phase 3 – Refinements (Months 7-12): Improve monitoring, according to the working statistics. Write playbooks about observed patterns of attacks. Repair causing injuries and damages autonomously where suitable. Best practices on a scale basis in the organization.

Phase 4 – Leadership (Year 2+): Be involved with the development of industry standards. Develop agents-based security features internally. Be a member of threat intelligence. Compete using security maturity.

Best Practices and Security Checklist of Agentic AI Implementation gives tactical steps of each stage, tools and settings to be used and the validation criteria.

What’s Coming: The Future of Agentic AI Security

The trajectory is clear. Significant agentic systems failures in the public are predicted by 2026 to increase pressure on security frameworks and alternatives to governance-needs. In the near future (2027-2028), compulsory protective measures in controlled areas such as financial services, healthcare, and critical infrastructure will probably arise. In 2029 and after, competitive positioning will be determined through agentic security maturity.

Organizations that realize these threats today and adopt more defensive, multi-layered protection will be in a good position to commit to the gigantic value of agentic AI as well as security and operational integrity.

The idea of moving towards chatbots that speak into agents that do is a fundamental change in the spread of security threats in the enterprise systems. The conventional application security architectures are inadequate. You require agent specific threat models, governance measures, technical controls and incident response measures.

This isn’t optional anymore. AI has already been deployed in agentic modes, and it comes at scale with decision-making and performing actions. It is not whether you will require agentic AI security but when you will apply the agentic AI security before or after you are first hit.

Read:

AI-Powered Incident Response: Automating Detection, Triage, and Containment

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!