I understand, of course, that when you hear the term software supply chain security you think it is another buzzword of the salespeople. However, since SolarWinds made it apparent that a single breach of a build system can take down billions of dollars in corporations and government organizations, this is no longer hypothetical. It is the battlefield of attackers succeeding and the case where most of the organizations are not even aware that they are at risk.

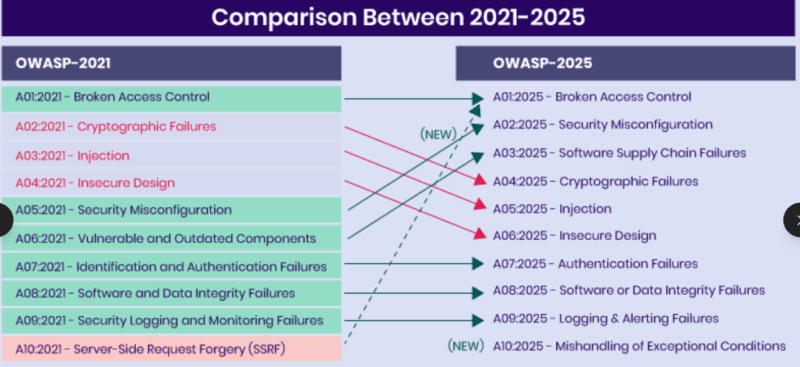

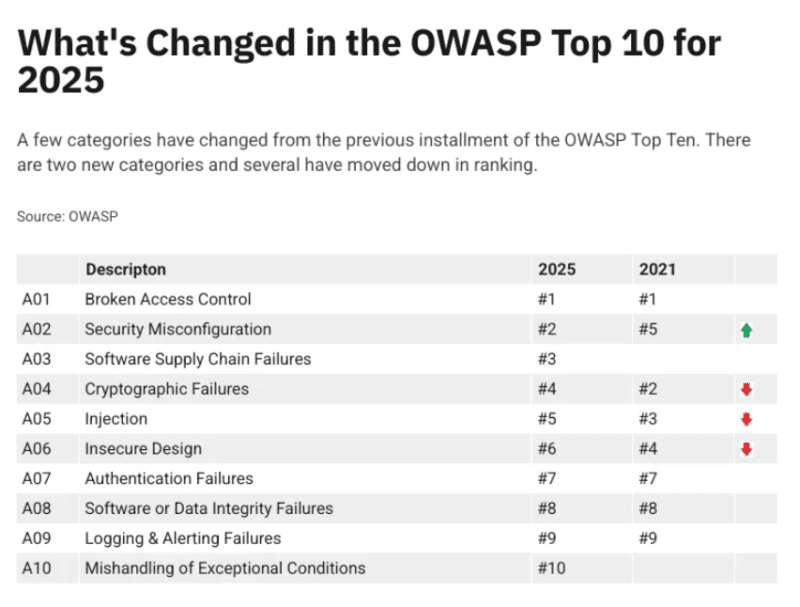

The figures tell the tale: the cost of software supply chain attack is more than 45 billion in 2023, and the estimates reveal that it will rise to 80 billion in 2026, according to CNCF TAG Security. This is why OWASP has made Software Supply Chain Failures the Number 3 in their 2025 Top 10 right beside injection attacks and broken authentication.

This is what is happening quite literally right now, what is and is not working and the direction this entire space is going to be taking.

Table of Contents

Why GitHub Is Predicted as the #1 Attack Vector for 2026

GitHub is not a place where developers put code anymore but the neuro-physiology of software development today. And that is the best thing about it: it is the most appealing target by attackers.

Security researchers are declaring it: GitHub Security will become the best attack vector in 2026. Here’s why:

GitHub Apps and actions are time bombs to explode. GitHub Apps are applications which demand extensive permissions at the organizational level. Even the apps that you installed several months ago could be compromised in order to inject the malicious code into all repos, steal secrets, or alter the CI/CD processes without raising any obvious alarms.

I worked in pipelines based on GitHub Actions in production, and this is what I have observed: most organizations use workflow files as a form of configuration and not code. They do not go through them as critically. One evil applications: statement to a weakened Action, and your whole build system has been taken over.

Hacked maintainer accounts are low hanging fruit. Hackers do not have to compromise the infrastructure of GitHub, they only have to have the credentials of a single developer. No MFA? Game over. Weak MFA (SMS-based)? Still vulnerable. When inside, they submit bad commits, are able to generate backdoored releases or make protected branches with open permissions.

This is beaten to death by the OpenSSF Source Code Management Best Practices Guide: all contributors must use MFA, all branches must have protection policies, all PR must have a review, terms in access should be locked down, every person should not be allowed to approve changes to workflow. However, most of the repos I reviewed, including open-source projects that are popular, do not have all of them.

A third party bots/ integrations introduce an additional risk. Most companies are providing bots with administrative privileges due to laziness. Leakage or compromise of the token of that bot or a compromise of the service? You have given the keys of attackers to you.

What Software Supply Chain Security Actually Covers

Consider the software supply chain to be a pipeline that has various attack surfaces in each pipeline:

Source & Developers

- IDEs, distro workstations, local toolchains.

- Threats: identity theft, low-level malware on dev machines, bad internal insiders, secret sprawl.

Dependencies Package Registries

- r. npm.. Open-source. pypi pypi Maven Gem con en NuGet. RubyGems.

- Risks dangerous elements, typosquatting, dependency confusion, malicious updates of packages.

Source Code Management (SCM)

- GitHub, Gera GitLab, Bitbucket projects.

- Threats: broken maintainer accounts, unclearified changes, set-ups, permissions.

CI/CD & Build Systems

- Build pipelines, runners, secrets, infrastructure-as-code

- Risks: pipeline credential theft, poisoned build scripts, untrusted runners, artifact tampering

Artifacts:

- Docker packages, binaries, Helm charts, images.

- Risks signed or malicigned artifacts, registry owner exposed to harm, artifact included of deceited builders.

Deployment & Runtime

- Kubernetes clusters, VMs, serverless platforms Risks: pulling untrusted images, drift from known-good images, unpatched base images

The attitude change of the critical mind: you are an apologist of the factory, not of the item. A malicious user of your build pipeline will inject malicious code into all your products to take months before you become aware.

The SolarWinds Aftermath – Why SBOMs Became Non-Negotiable

The wake up call was SolarWinds. Attackers hacked the Orion build system and inserted in the stage of legitimate code updates the SUNBURST backdoor. It was signed using valid certificates and it was delivered through the official channels, hence it was installed by thousands of organizations, including the U.S. government agencies.

The question everybody was asking afterwards, is; how do we know what is in our software?

open the Software Bill of Materials (SBOM) – which is a ingredients list of programs. It records all the elements, libraries and dependencies of an application, versions as well as licenses.

After SolarWinds, SBOM became not a wish anymore, but a necessity:

- In the United States, being sold to government agencies, the software must be subject to SBOM per the executive order 14028.

- SBOM is being adopted in Europe due to the impact of EU Cyber Resilience Act.

- SBOMs are becoming a prerequisite to vendors to enter into a contract by enterprise procurement teams.

However, the difference I identified is as follows: the majority of organizations create SBOMs as a form of compliance checkbox. Once they make them, they keep them in a place and never read them anymore. This is no way this should be the case.

The practice of SBOM nowadays involves:

- CI/CD generation with tools, e.g., CycloneDX, SPDX, or Syft, through automation.

- Signing of cryptographs to ensure that the SBOM was not mangled with.

- Introduction of vulnerability scanners so that the volatile lists can be converted into operational risk information.

VEX annotations This is where VEX (Vulnerability Exploitability eXchange) annotations are put, stating which vulnerabilities actually impact your build.

It is not about paperwork, it is to be informed about what exactly is in your software, how it got there, and whether it is being violated or even endangered.

Supply Chain Attack Patterns You Need to Know

Hackers are no longer taking advantage of code weaknesses. They are attacking processes and infrastructure of code production. The current most popular Supply Chain Attack Patterns are as follows:

Dependency Confusion Attacker makes a package of malware code and publishes them under the same name as your personal internal package, but with a higher version number. Your system to build draws in the evil version of your version rather than the one you made. It occurred to some of the largest technology firms in 2021 and is still operational.

Typosquatting Used similar names in names of register packages to libraries with high popularity (lodash vs loadash, requests vs reqeusts). The name is mistyped by developers and the malicious version is installed and it steals the credentials or injects backdoors.

Compromised Maintainers Hack into the account of a maintainer by means of phishing, credential stuffing, and social engineering. Force malicious updates to honest packages. This method was the one used in the event-stream npm package attack in 2018.

CI/CD Pipeline Poisoning Hack the very build system e.g. the runners, the workflow definitions, or the secrets in CI. Plant malicious code at the build stage, making all of the artifacts generated thereof backdoored.

Sugar Actions or gсі Actions are malicious GitHub Actions, which may reveal solely in the form of Third-Party Integrations. Malicious GitHub Actions or Third-Party Integrations Some sugary GitHub Actions present only as Third-Party Integrations.

Install a useful looking Action or bot, then update it at a later stage with malicious code. Or weaken an already popular Action.

The knowledge of these patterns is the key to successful designing of defenses.

Dependency Scanning & Vulnerable Component Detection – What Actually Works

Dependency Scanning, as well as Vulnerable Component Detection, is being ran by most organizations, only the effectiveness of these tools is appallingly different.

The (minimal) requirements everyone must have:

- Software Composition Analysis (SCA) Software such as Snyk, Dependabot or scanning of known CVEs with Dependency-Check.

- Automated PR generation on vulnerability findings.

- Enforcement blocking of policies develops when there are critical vulnerabilities.

However, this is where teams fail, with the capabilities of scanning and seeing 200 alerts, being overwhelmed, and missing everything. Alert fatigue is real.

What works better:

- Priorities based on risk are needed Risk-based prioritization not all CVEs are alike. Do you use the vulnerable functionality in your code? and is there no known wild exploit? The reachability analysis has become available in tools such as Snyk and GitHub Advanced Security.

- Dependency pinning and allow-lists dependencies Lossage of approved packages and versions. Scanning views the bad stuff that gets passed on.

- Mirror networks ( / Proxy private ) -redirect package fetches across an internal mirror (Artifactory, Nexus). Block malicious scan packages prior to gaining access to your network.

The hidden dark side: millions of packages are in open-source ecosystems such as npm. There is no possibility of manual vetting. You must have automated tooling and restrictive policies, or you are simply counting on the fact that attackers do not get interested in you.

Third-Party Vendor Risk Is Exploding (And Most Companies Are Blind)

The code that you have created may be safe, but what about dozens of hundreds of third-party vendors, SaaS tools, and open-source libraries that you rely on?

Third-Party Vendor Risk Assessment and Management was once a procurement checkbox. Now it is a very important security role as it:

- The majority of them begin with a hacked vendor (See: Target via HVAC vendor, SolarWinds vs build system)

- By cloud-native architectures, you are putting together external APIs, libraries and services at all times.

- Open-source dependencies receive their own transitive dependencies – you are trusting the code that people you have never heard of are writing.

Turning into adult companies:

- Vendor security surveys inquiries on whether they produced SBOM, are in compliance with SLSA, good secure SDLC.

- Critical dependency code review Third party.

- Weaknesses of vendors, their constant vitality (breaches, CVEs, openly reported incidents)

- Contractual obligations of SBOMs and vulnerability SLAs and breach Disclosure schedules.

My experience: the majority of companies request vendors to complete a 50-page security questionnaire when procurement occurs, never to do it again. Not risk management that, that is theater.

The more appropriate choice: consider vendors as belonging to your supply chain. Scanning their artifacts, following their repos, keeping track of their CVEs, and a strategy of what to do once (but not to it) one of them is compromised.

Code Signing, Provenance & Software Integrity Verification

The issue is as follows: how can you be sure that the binary you are about to deploy is the one that was created by your CI system, and not the one that was replaced in it by an attacker?

Code Signing, Provenance & Software Integrity Verification provides the answer to this.

The old-fashioned code signing involves GPG keys or platform-based signature (Apple, Microsoft). Your signature is signed by you, and a signature is verified by the users. Work, yet some major management is hurting and unsizable in the current CI/CD.

Sigstore is changing this:

- Automated signing with ephemeral keys using workload identity (there are no long-lived secrets to disclose)

- All malicious artifacts cannot remain hidden as transparency logs (Rekor) ensure that malicious code is assured to be uncovered.

- Cosign for signing container images, binaries, and SBOMs

- Github CI, Gitlab CI and major cloud integrations.

I have deployed Cosign to pipelines and here are observations that I have made: It is dumb easy. One command signs your image. Another verifies it. there shall be no grappling with GPG key constantly changing things and hardware.

However, that is not all about signing. You require provenance attestations — cryptographically to verify attestations that an artifact was created by which source, what inputs were used, and what build system was used to create it.

Such tools as in-toto and SLSA provenance offer this. The concept: each stage of your pipeline produces a signed attestation. When going on-line, you do a check of the whole chain. In case any of the steps is not present or invalid, the deployment will fail.

That is what is to come: physically provable provenance of the artifacts should not be deployed.

Securing the Open-Source Ecosystem – OSS Governance That Works

The truth: you are not able to quit using open source. JavaScript projects have an average of 200 and more dependencies. Python, Ruby, Go — same story. How safe you use it is a question.

Appropriate look of OSS governance:

- Accepted registry of packages – do not let coders grab off-the-lay mirrors.

- License compliance auditing – be aware of which licenses you are using and what commitments are they making on you.

- Upstream participation sponsor dependencies of significance, be a responsible reporting vulnerability reporter.

- Plans in case of fallback– can we maintain the library in question- will it become abandoned, or might the maintainer be burned out?

A gap in the research on open-source best practices conducted by the UK government is that most organizations are consuming OSS on a large scale but are spending nearly no money in upstream security or sustainability. They consider it as a free infrastructure that somehow self preserves.

More sensible: Find out which dependencies of yours are critical (i.e. their loss will cause your product to break), and actively participate in those projects – sponsor, provide patches, assist in security inspections.

What’s Coming Next – Verifiable Pipelines and Continuous Compliance

According to the latest trends set by CNCF, OpenSSF and NIST, this is where software supply chain security is taking upwards:

Verifiable Pipelines Down to the End. Attestations are generated cryptographically on each step since the commit and production are cryptographically verified. The policy engines allow the deployment of artifacts in which the provenance chains of these artifacts are verified to meet the requirements of SLSA. No provenance? No deployment.

Near-Real-Time Risk Scoring SBOMs, vulnerability feeds, exploitation intelligence and runtime telemetry are combined to condense into dynamic threat ratings per service or artifact. Not quarterly, but continuously updated.

Better Hardware and Identity Foundations of Trust. Hardware root-based (TPM, HSM) and confidential computing Build systems and workload identity. Makes compromise of pipelines extremely difficult.

Automated Continuous Compliance. Continuous streams of evidence – regulatory expectations are fulfilled by continuous streams of evidence through the means of attestations and logs, policy decisions, and these assumptions are put down in a form where verification can be conducted. Audits are no the long ordeals that are, in fact, automated queries.

Final Take – Start Small, But Start Now

Software supply chain security is not something that can be fixed during a single sprint. It’s a maturity journey.

This is how I would do it were I to begin today:

Week 1:

- Turn on MFA in your Organization.

- Branch protect and mandate PR reviews.

- Graduate Dependabot or scans such as dependency scan on.

Month 1:

- Create SBOMs of your key applications.

- Stepping implementation Basic artifact signing (Cosign on containers).

- CI/CD seekers and audit busters.

Quarter 1:

- Compare your own situation with that of NIST SSDF and SLSA.

- Carry out a roadmap to SLSA Level 2.

- Begin evaluations of third-party risk (major) stars.

Your supply chain is already being attacked by the attackers. And the issue is will you see it in time?

Read:

Agentic AI Security: Securing Autonomous Intelligent Agents in Enterprise

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!