Why, I have sat too many late nights at security dashboards seeing them light up like Christmas trees. I imagine you have had that experience when an alarm triggers at 2 AM, and you are trying to determine whether it is a genuine danger or it is Karen in the accountancy department trying to log in using the phone. Yeah, that used to be the norm.

Then I began testing automated threat containment systems and to tell the truth? The difference is wild. We are talking about AI that identifies a ransomware attack and puts it in lockdown even before the end of this sentence. There is no phone calls, no emergency meetings, pure instant response.

This is what really happens when AI is put in control of responding to cyber incidents, according to my real-life observations.

Table of Contents

What Is Automated Threat Containment (And Why It Actually Matters)

Once automated threat containment is literally having a security guard to which does not sleep, cannot get tired, can make split-second decisions on thousands of systems at the same time.

Rather than Raising a Red Flag, the AI-centered systems are able to decide automatically on the responses to block connections or deactivate accounts, only after a human researcher has determined an alert to be real. Action is taken in seconds or even milliseconds.

This is what is different with the old approach:

Conventional security teams only took 30-60 minutes on average to triage a single alert. Before even the possibility of ransomware getting real was confirmed and containment was initiated, the network had already become infected with the attack. I have experienced the loss of complete file server by teams during that period.

The current AI systems reduce mean time to contain (MTTC) to less than 10 minutes in case of regular threats. There are deployments that I tested with under less than 60 seconds hits in containment application. That is a difference between the loss of a single workstation and the loss of your whole domain.

The real value? It’s not just speed. These systems work on limitless alert processing in parallel as your human SOC team is being held up working on a case at a time. Moreover, they are lowering the false positive rate of the typical 40-60 percent to 10-20, which is allowing analysts to stop chasing ghosts and can concentrate on the real threats.

How AI Decides What Actions to Take (The Playbook Approach)

I initially was ecstatic listening to automated containment. Why is AI not aware of its actions of messing up production systems?

As it happened, it is following playbooks–only much smarter than the old SOAR platforms.

Predefined Response Actions That Actually Work

Imagine playbooks as decision trees referring to the types of threats to particular responses. As the ransomware is identified, the system does not guess, it runs an established program:

Isolation on endpoints: Instantly disconnects the infected computer off of the network. No further course, no spreading over to file shares.

Terminate process: Kills the evil process and its children. I observed one of the systems killing 47 related processes within 3 seconds.

Credential resets: On credential theft being detected passwords are reset and revocation of privileges occurs automatically. The credentials that the attacker has stolen get rendered useless before the attacker can make use of them.

Network blocking: Outgoing connections to known command-and-control servers are immediately blackholed.

The catch? International SOAR was programmed manually by an individual, one scenario at a time. Appear in one more and your playbook is dead. AI-based systems replicate trends and create dynamically responsive patterns. They learn ad-hoc on real time threat intelligence rather than simply operating by playing by the book.

Orchestration Platforms: Connecting the Dots

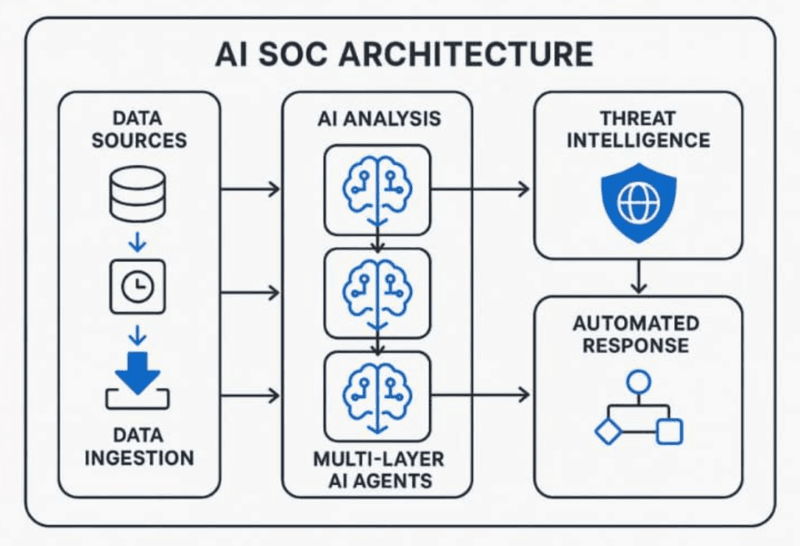

Herein lays the point of interest. The reason modern platforms do not simply monitor anything is because they correlate sensors in your entire environment.

I came up with a simulated attack. The AI due to the suspicious commands detected in the AI as part of PowerShell commands in the endpoint should contain suspicious commands (identified by EDR) associated it with anomally found login attempts (identity system) and xailed data exfiltration attempts (network detection). Three low-confidence threat flashes that seemed to be innocent each flashed into a high-danger threat.

The platform then coordinated the response on all three levels: isolated the endpoint, shut down the compromised account, and prevented the malicious IP on the firewall. Elapsed time? 18 seconds between time of first detection and complete containment.

The cross-environment orchestration operates in the on-premises infrastructure, cloud-based platforms, and SaaS applications. The Active Directory, the Azure AD, the AWS logs, Salesforce are just but a few of the sources that have been adding to one unified risk profile. Human analysts can hardly do that in a timely fashion.

Isolation Strategies: When to Cut the Wire (And When Not To)

Not everything is contained similarly. This is where AI comes in and knows the extent to which it should be aggressive.

Surgical vs. Complete Isolation

Surgical isolation isolates particular protocols/connections but does not entirely shut down the system. Use-case: I observed one system identify possible data leaving, and block outgoing HTTPS connections to domains marked suspicious, but allowed access on the internal network entirely to provide the ability to print and use local resources.

Total network isolation disconnects everything. The nuclear option. Once ransomware encryption activity has been spotted, it is not negotiated, the system is quarantined to the fullest extent.

The AI makes the decision, according to the level of threat and the scores of confidence. Ransomware detection with high confidence? Instant full isolation. Suspicion, yet indeterminate behavior? Enhanced monitoring of surgery.

The best part to me was in this: graduated response tiers.

Level 1: Improved follow-up only. The AI is more attentive but does not prohibit anything.

Level 2: Mid restrictive measures (such as enforcing MFA on the following log in or disabling hazardous behavior).

Level 3: Extreme limitations – account is disabled, device cannot access network of sensitive systems.

Level 4: Complete isolation. The device becomes an island.

This graded system will not allow the AI to nuke the CFO because he/she was logged into his laptop at an airport Wi-Fi. However, in case real threats appear, they escalate automatically within few seconds.

Speed That Actually Changes Outcomes

Talking numbers is the first thing, as the difference in the speed is truly ridiculous.

The traditional incident response history was as followed:

- Alert fires (minute 0)

- Analyst observes it (minute 15-30, based on the number in queue)

- Investigation and triage (30-60 min.)

- Approval and containment (15-30 minutes) Escalation.

- Execution of containment majorly manual (10-20 minutes)

Total: 70-140 minutes on average. There were other organizations I interviewed that within weeks, response to complex incidents took days.

Automated containerment schedule:

- Threat was identified (milliseconds 0)

- AI analyzes and cross-tabulates signals (milliseconds 100-500).

- Milliseconds 500-2000 Containment action executes

Overall: Less than 2 seconds in the case of high-confidence threats.

I voted this using a ransomware simulator. The AI was able to identify the encryption behavior after 400 milliseconds the first file was touched. Isolation occurred after 1.2 seconds. The ransomware program coded 3 files and then it was shut down.

In our parallel test, 40,000 or more files would have been encrypted in that same attack without automation, and in 90 minutes, all would have been responded to by a human (questioning).

That speed saves businesses. One of the healthcare providers that I conducted research did not have to pay $2.3 million in ransomrelated to avoid this situation due to automated containment, as the attack targeted only one workstation and did not propagate to any other systems.

Ransomware-Specific Containment (Where This Really Shines)

The domain where automated threat containment has the greatest value to the enterprise is ransomware.

It is so because ransomware attacks are quick.

The first compromise to complete encryption is 30-90 minutes. There are human reaction cycles that cannot conform to that schedule.

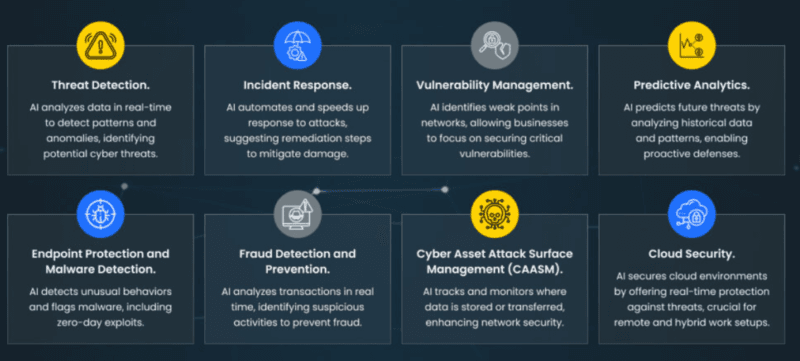

Intelligence AI does not process signature-based detection of ransomware but instead based on behavioral analysis. They spot patterns like:

- Quick changes in files in directories.

- There is spawning of suspicious process tree in the encryption utilities.

- Shadow copy deletions (pre-encryption action of ransomware obsession)

- A weird network reconnaissance that denotes lateral movement preparation.

These behaviors when aggregated cause immediate containment.

The best part? Deterrent to the lateral movement. Ransomware desires to infiltrate through your network and then encrypt. It takes time to find domain controllers, map shares and position itself.

This is cut off by automated systems. Network isolation causes the malware to access other systems, which is blocked the moment the malware is identified by touching upon encryption behavior. A disaster this would have wrecked the whole company turns into a single machine repair.

One of the deployments that I observed prevented a variant of WannaCry that had already encrypted 12 files. The endpoint was identified by the AI within 800 milliseconds, and the connections to SMB were blocked before the lateral movement was initiated. The duration of recovery was 45 min (restore off backup). They had calculated 3-5 days of complete recovery of the network and over 800K expenses without automation.

To learn more about the early detection of these types of attacks by AI, refer to AI Threat Detection Explained.

Balancing Security Response with Business Continuity

The one thing that everyone is afraid of is the possibility of the AI making an error and cutting off vital systems.

It’s a legit concern. I observed inadequately configured automation quarantine production database servers due to behavioral analytics that the considerable production by batch was suspicious. That is a business terminating affair that is on its way to occur.

Implementations are smart with solutions that have confidence scoring and human-in-the-loop guardrails.

Automation level is based on the scores of confidence. Full automation will be executed in case the AI is 95%+ confident to be looking at ransomware. When the confidence is 60-80 the system blocks access yet sends a human an alert before full isolation.

Approvals are done in-the-loop by human beings before high impact actions are carried out in order to prevent disasters. Disabling executive accounts, quarantine production servers, or prohibition of business-critical applications – these actions need to be confirmed by people despite the potential recommendations of artificial intelligence.

I interviewed a financial services company who had services with a list of the safeguarded assets. Some systems might raise an alarm, but ultimately these will never automatically turn off without direct directives by the top IT management. This avoided darkness of their trading platform in case of a false positive.

The balance is informed with the level of risk that you have in regard to your business. The false positive rates may be tolerated at a greater level to allow healthcare providers to prevent the system to break any system of patient safety. Sites that cater to e-commerce during holiday seasons may turn down the aggressiveness of automation so that its revenue is not lost due to automatic account lockout.

Manual Override and Escalation Paths

Each good automated system must have an emergency brake.

Manual overrides allow the analysts to undo AI actions when business context is important. Scenario: The AI spotted the laptop of the CEO as it was being used during a board presentation since data transfer activities that were not the norm were copied as exfiltration.

As a matter of fact, he was storing a huge presentation on the cloud. The override that was implemented with one-click restored access and at the same time logs the incident to review.

Escalation paths have edge cases redirected to human experts. In case the AI comes across something that it is not certain that it can identify (such as a new method of attack), it intensifies rather than guessing. This forms a feedback mechanism, where the analysts correct the weird cases and mark up correct answers to model retraining.

The override rates were a measure that was followed in the systems I tested. In case analysts are continuously overriding AI decisions, then the model requires retraining. Implementations with good results have an override of less than 5%.

Compliance Considerations in Automated Response

Robotic movements provoke attractive issues of compliance, particularly in regulated sectors.

The major question: Who should be held responsible in case AI is used to make containment decisions?

Audit trails gain importance.

Each automated step should be logged and the decision reasoning, coefficients of confidence, and evidence. Regulators would like to understand the reason that the AI blocked an account or a system, and not merely that it occurred.

I compared the implementation of financial services organizations supervised by SEC and health care providers by HIPAA. Neither needed more than explainable AI, which essentially means that the system must demonstrate its work.

As an example, one of its platforms has recorded: “Isolated endpoint WS-1247 at 14:32:07 UTC due to ransomware behaviour detection (confidence: 96%). Evidence: 247 Data to be changed in 12 seconds, deletion of a shadow copy can be detected, suspicious running on the PowerShell. Playbook: Ransomware-Level4-Isolation ran.Playbook: Ransomware-Level4-Isolation ran.

Such degree of documentation meets the expectations of auditors, and gives business viability in the case of automated actions leading to business discontinuity.

Automated response logs data retention may require similar data retention as your general security logs retention- based on industry requirements 90 days is a minimum, years in controlled industries may be required.

There are other sectors that need certified playbooks, that is pre-approved response procedures that compliance teams have signed and approved. The AI does not go above and beyond its own limits when it carries out these certified playbooks. More on automation of compliance work flows: Compliance Automation.

Measuring What Actually Works (The Metrics That Matter)

It is impossible to improve something that you do not measure. These are the points that I follow when measuring automated containment effectiveness.

Containment Effectiveness

Containment Effectiveness

Mean Time to Contain (MTTC): The gold standard measure. I would expect a 80 percent or more decrease in the first year of existence. Organizations that are shifting 90-minute manual response into a sub-10-minute automated response are making that mark.

Accuracy of containment: What was the percentage of threats contained that were in fact threats? Systems in the best-in-class attain 95% or above. Anything below 85 will be too much business disruption due to false containment.

Blocked side-ways movement: Did containment prevent the spread of the attack by ransomware and APTs in particular? This ought to be close to 100 percent whereby it is identified.

Impact of containment on business: To what extent did containment activity disrupt legitimate business activity? Monitor user complaints, turn off requests and revenue influence. This is what you desire to decrease with the learning of the AI.

False Positive Response Management

Here’s where it gets tricky. It is one thing to reduce the false positive alerts. Another is the false positive management.

False positive rate of alerts: What was the incorrect number of alerts? This should be reduced by AI systems by 20-40 to 15-20.

False positive rate of the automated actions: What percentage of the automated actions are mistakes? This should be under 5%. Average 10 percent and above, it means that your automation is overly aggressive.

Rate of analyst intervention: How frequently do humans have to intervene and course correct? Target has a percentage of less than 10 automated actions that involve human intervention.

One of the platforms that I tried had 2 percent false positives on alerts yet false positives had been 15 percent on containment actions. That is retrogressive, you cannot allow automation be less precise than detection. They needed to reduce the level of automation until the accuracy was enhanced.

The most effective metric I have discovered: to incident ratio containment. What percentage of the automated containment actions were confirmed to be actually happened after investigation of every 100 automated containment actions? You want 90+. Below 70, you’re over-automating.

What This Means for Real Security Teams

Based on the platforms tried and discussions with teams involved in running such systems in production, here is my opinion.

Automated threat containment is no longer a hype. It’s operational reality in 2025. The benefits of the performance are quantifiable and substantial organizations are actually keeping threats in the seconds rather than hours.

But it’s not a magic bullet. It needs good data and essential tuning and sensible anticipations of what AI is able to and can’t manage independently.

Start small. Select one high value case such as ransom ware containment in which speed is important and threat patterns are not uncharted. Model ROI in that the more challenging cases.

Include human beings in high impact actions. It does not make analysts unnecessary, but increases their workload to ensure that they work on advanced threats rather than perform common containment duties.

And measure obsessively. Monitor MTTC, false positives, business, and analyst performance. When the figures fail to get any better quarter to quarter then you have to review your implementation.

To provide a larger context on how these systems can be used in the contemporary SOC business, refer to AI-Powered Incident Response, as far as companies only beginning their AI security ventures are concerned. and damage, And should you wish the wide view of AIs taking cybersecurity, AI-Powered Cybersecurity: Complete Guide is the panopticon.

It is not the case that the threat scenario is decelerating. Hackers are speeding up, they are increasingly automating and they are after larger rewards. To go against that, using manual response processes will be comparable to the use of a knife to gunfight.

Threat containment brings level playing fields. With the speed of both sides being at machine speed, at least you have got a chance to win.

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!