Threat detection, faster response time, and automated network protection are the benefits that AI-powered security systems hold. Nevertheless, they cause at least one in-depth tension: in order to see the threats, such systems must access vast volumes of data, including personal information pertaining to the employees, customers, and users in many cases.

The issue is not whether AI can secure network protection. It consists in whether the organizations can implement those systems without making security infrastructure a privacy violation machine.

This article disaggregates the functionalities of data privacy within AI-based security systems, what the regulating policies require, and what technical strategies allow the organizations to identify any security risk without invading the privacy rights of individuals.

Table of Contents

The Privacy Paradox in AI Security Monitoring

The AI-powered threat detection systems go through behavioral data, network traffic, attempts to log-in, logs of file access, and communication metadata to determine whether the user’s behavior can be considered a threat or not. An increase in the volume of data that these systems read helps them to be more correct in revealing anomalies that are an indication of an attack.

However, this poses an apparent issue: to monitor effectively, one has to gather data about and analyze large volumes of personal data.

What would the average AI security system monitor: what files were accessed by whom and at what time, pattern of email communications, keystroke behavior, mouse movement behavior, application usage statistics, and network connections histories. Any individual data point enhances threat detection. Individual data users are also potentially identifiable.

When it dawns on organizations that the same skills that identify insider threats such as examining employee activity against abnormal changes, the same can be re-used to invade individuals. An algorithm that knows how to recognize unwarranted downloads of files would accidentally consider as such employees who do their research on areas with sensitivity or whistleblowers who pay information to journalist organizations.

Such regulations as GDPR or CCPA do not forbid AI security monitoring. They insist on the fact that organizations must have an acceptable reason to access personal information, they must provide technical security, and they should be open with their environment on the type of information captured and the purpose of access. The dispute is striking these conditions and security efficiency.

What Personal Data Do AI Security Systems Actually Collect?

AI-based security solutions process a variety of types of personal information, and frequently the user himself is not made aware of these:

Behavioral biometrics: typing velocity, mouse action more, touchscreen touching- use to authenticate a person and session hijackings.

Network metadata: Server and private IP addresses and connections records and the times and data transfer information, protocols. This does not involve message content, but it exposes the communication person to person and at which time.

Account logs: What users accessed which resources, where, when. The threat detector but also logs a detailed employee activity.

Patterns of communication: frequency of emails, list of recipient, type of attachments. To fulfill their purpose of detecting phishing attempts or data exfiltration, security systems analyze them, yet they also map social and professional networks.

Device fingerprints: Identification numbers, installed programs, browser profiles, operating system releases- used to identify the authenticity of the device by the researcher, and the identification of lost endpoints.

Depending on deployment, the scope of collection depends. The clouded security infrastructure may work with less fine-grained data compared to on-premise solutions where the access of the agent controls all the data. Anyhow, a firm must have clear data inventories that show what is collected, where is it stored, the duration of storage and access.

I have observed that a lot of organizations do not realize the extent of data that is being captured with the tools they use to provide security. What begins as a simple threat monitoring system tends to widen into an extensive surveillance system without a revised privacy evaluation.

GDPR and CCPA Compliance for Cybersecurity Monitoring

European GDPR and the CCPA of California have minimum specifications in the processing of personal data including security monitoring cases. Before deciding on unlimited data collection, both frameworks acknowledge that organizations legitimately have interests in safeguarding their networks, yet no authorization has been given.

GDPR Requirements

The security monitoring provided by GDPR article 6 under legitimate interests (cyberattack prevention, protection of business assets) but must be:

- Prior to implementation of high risk AI systems, perform Data Protection Impact Assessment (DPIA).

- Enact data minimization – only collect data that is needed to complete the particular security task.

- Be transparent with the privacies by providing privacy notices on what data is being monitored and why.

- Allow personal access privileges to enable employees and users to see what information is being gathered on them.

The article 22 limits the use of automated decisions that have a legal or major impact. When an AI system automatically revokes employee access or prevents customer accounts due to an automatic decision based on a purely algorithmic basis alone, organizations should offer human review systems.

CCPA Considerations

The California businesses with AI security systems have some requirements regarding consumer data monitorings but less about the employee monitoring, CCPA concentrates more on consumer data than on the employees.

- Disclosure policies: The privacy policies must state clearly the personal information collection even in the security cases.

- Exceptional rights to security monitoring: Security monitoring should be covered by service provision exceptions, but it is advised that organisations must point out whether or not users can choose to do away with certain data collection practices.

- Prohibitions on the sale of data: Data referring to security information with personal information cannot be sold to outsiders without their express approval.

The two systems view security surveillance as lawful as long as it is reasonable and reasonable. The most important practical compliance issue is not to record all available data points pursuant to the security of your AI detection system since it may enhance precision, but just the minimum data.

Anonymization and Pseudonymization Techniques

Separating security surveillance and personal identification is one way of minimizing the risk of loss of privacy. This space is dominated by two techniques, both anonymization and pseudonymization.

Anonymization does not leave any traces of an individual and it is impossible to trace information to a single individual. Anonymized data technically does not qualify as privacy regulations since it is no longer personal data. In the actual sense, anonymization is hard to achieve. Metadata of network traffic (when the IP addresses have been stripped out) can be re-identified in many cases by use of timing analysis or correlation with other datasets.

The direct identifiers (names, email addresses, employees IDs, etc.) are substituted by pseudonyms or tokens in pseudonymization. The original identifiers are kept in a different location under a tight access control. Normal operations involve pseudonymized data action analysis by security teams, and real identities are only accessed when conducting an active incident investigation.

My pseudonymization experience demonstrated that it is effective in the common threat detection scenario but does not play well in the incident response context. In the case of determining who an insider threat is, when a potential one is identified by your AI, the security teams must be capable of identifying the user of that particular user at the earliest opportunity- that is have clear processes with which you can reverse the pseudonym under recorded conditions.

The pseudonymization and access controls should be used together in organizations: security specialists are allowed to view the tokens in the execution of the monitoring; senior investigators can reverse the pseudonymization when recorded research; audit logs are used to monitor all the failures to de-pseudonymize.

Employee Monitoring Policies and Legal Considerations

There are always instances when the AI security systems can appear to be an employee monitoring implement. Behavioral analytics (to identify breached accounts) also monitors employee output, work habits and communication behaviors. This brings different legal and moral issues.

Various jurisdictions have different restrictions on monitoring at the workplace:

European Union: Monitoring systems are to be deployed with consideration of the works councils. There are great rights of employees to understand what is being tracked and why. The use of covert surveillance is normally illegal except in certain criminal investigation cases.

United States: The permissive level of the law of the United States applies to federal law–employers are allowed to monitor business-controlled devices and networks at minimum. Nevertheless, state laws differ considerably. In Connecticut, the employers are expected to give prior notice of electronic monitoring. The same disclosure is required by Delaware.

Australia and Canada: Guidance has been given by the privacy commissioners of both countries adding proportionality and transparency during workplace surveillance and mandatory impact assessment prior to implementation.

In spite of jurisdiction, concrete employee monitoring regulations must:

- Indicate type of data that is being gathered (emails, file access, web browsing, application usage)

- Provide security justification of every type of data.

- Explain the situation under which and to whom monitoring data is accessible.

- Define retention periods

- describe the employee rights to access their data.

- Outline procedures in monitoring data on disciplinary or termination.

Transparency does not remove the issue of privacy, however, this creates a feeling of trust and less legal risk. Workers only realize that something abnormal is going with file downloads are not as likely to be caught off their guard when security officials invade their actions.

Vendor Data Handling and Contractual Obligations

The vast majority of organizations implement third-party AI security systems as opposed to developing systems internally. This presents vendor risk: the security providers can see sensitive monitoring information, which may contain personal information of the employees and customers.

GDPR Data Processing Agreements (DPAs) are mandated by law and are becoming expected by other regulations, as well. Leading DPAs to promote AI security vendors must take the form of:

Status of data processor: The vendor is a company that processes your data and does so under your instructions. They are not allowed to use the data provided by the security monitoring to serve their own interests (by training AI models in behalf of other clients).

Subprocessor controls: You should also be notified and the relationships by cloud infrastructure, or third-party services should be contractual, in case the vendor contracts through third-party vendors.

Location and data transfers: In what location will data to be monitored be collected? In case it crosses borders (particularly between EU and other jurisdictions), proper transfer mechanism should be recorded.

Security controls: What are the encryption, access control and audit log configurations applied by the vendor to ensure the protection of the monitoring data they are processing?

Retention and deletion of data: Within the context of termination of the contract, what is the speed with which the vendor will remove your security monitoring data? What is the verification that you are given?

Breach notification: How quickly will the vendor inform you of breach to their systems? Note: In the majority of privacy laws, in the event of a breach of the monitoring information, the responsibility to inform the affected parties about it remains with you.

I have read dozens of security vendor contracts, and there is a tendency that the data handling terms are not specific or realistic but tend to be in favor of the vendor. Companies need to negotiate certain contracts based on data segregation, data retention, and data deletion schedules instead of taking standard processor contracts.

Data Retention Policies and Disposal Procedures.

The AI security systems produce big amounts of logs and behavioral data. These data get piled up and up without defined retention policies, which increase the risks of privacy and storage expenses and pose a legal liability.

Privacy policies normally demand that personal information has to be stored so far as the intended use remains open. In the case of security monitoring, necessary varies based on a number of factors:

Active threat detection: If real-time monitoring is necessary, perhaps rolling 30-90 days of behavioral data on a schedule is all that is needed.

Incident investigation: As security teams investigate the possible breaches, they require the historical context, usually 6-12 months of logs to form the baselines and define the attack patterns.

The regulatory requirements: The compliance standards in the industry (PCI DSS in processing payments, HIPAA in healthcare) might require certain log storage retention, which is usually 1-3 years.

Legal holds: When litigating or under regulatory investigation, organizations are required to maintain the pertinent data no matter their regular retention policies.

The AI security monitoring can have a defensible retention policy that may look as follows:

- Live behavioral information: 90 days automatically erased.

- Logs of network traffic: 12 months, pseudonymized in 90 days.

- Incident investigation files: 7 (conforms with normal legal requirements) years.

- Aggregate statistics: Indefinite retention Threat intelligence made anonymous: Indefinite retention

Deletion is very important, automated. Liquidation of security information is very infrequently practiced manually. Have systems clean up automatically after retention limits are met, and audit logs will show that the clean up took place.

Consent and Transparency in AI Security Deployments

The majority of privacy laws do not entail express permission to monitor security measures, valid business reasons are typically well-founded legal reasoning. However, it is less risky to law and more likely to make an organization trusted when there is openness regarding what is monitored and why.

Good AI security monitoring transparency consists of:

Privacy declarations: Understandable explanations of the data that the AI system is gathering, analysing, and retaining. They must be available even prior to the adoption of monitored systems- in employee onboarding or within the customer account set up.

Algorithms transparency: The organizations do not have to divulge their unique AI algorithms, but the algorithms have to clarify what organization parameters evoke alerts. In an event that your system is reporting suspicious file downloads, make it clear.

Access and correction rights: The user is supposed to have a way of knowing what security data is present about them and amend the wrong data. It is especially significant where AI systems produce false positives, which influence the process of hiring.

Human review ensures: When AI security systems are used to make important decisions (suspend accounts, fire employees, refer to law enforcement), make it a human review policy before action.

Other organizations go further to establish security monitoring oversight committees groups where employees are represented to conduct regular reviews of the use of AI systems, audit the outcomes of the algorithmic decisions and confirm that monitoring practices are as they are touted as being in line with the stated policies.

Privacy-Preserving Threat Detection Techniques

The technical community has come up with various methods that enhance precision in detecting threats as well as minimizing privacy disclosure. These are not hypocritical, large organizations are implementing them in manufacturing.

Differential Privacy

Differentiation privacy introduces a carefully selected mathematical noise to information or outputs of AI models, where the information of an individual cannot impact the outcome greatly. In the event of security monitoring, it means that AI models can be trained according to the behavioral patterns, instead of memorizing the specific actions of users.

The common algorithm that is used during implementation is a Differential Privacy Stochastic Gradient Descent (DP-SGD), which alters the process of neural network learning by restricting the impact of individual user information on the model. The privacy guarantee is stated in terms of epsilon (e) values that, the smaller it is, the greater is the privacy assurance, but thus, the accuracy could be lower.

The accuracy loss that organizations willing to adopt the use of differential privacy are ready to accept with being able to offer provable privacy guarantees is usually 3-9% to non-privacy baselines. In most applications, the trade -off is acceptable – particularly where infringement of privacy is subject to legal repercussions.

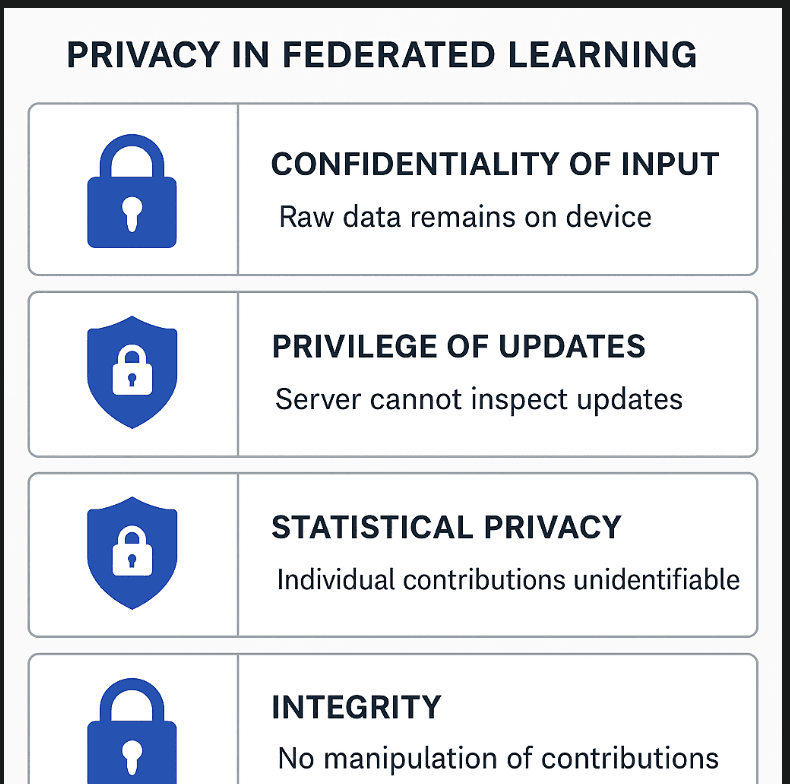

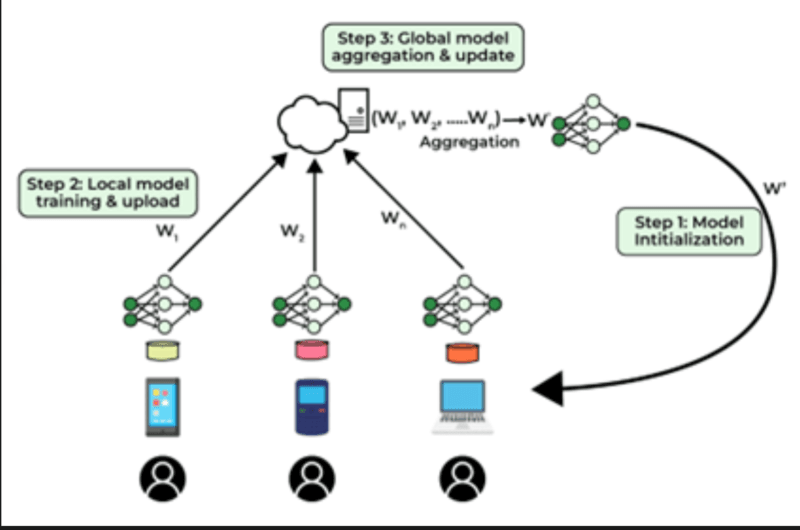

Federated Learning

Federated learning learns AI models on distributed data without having the raw data centralized. Applied to security, this allows threat detection models to reason about activity on thousands of endpoints without the endpoints having to post their logs into central servers.

The endpoints individually have threat decoders, pattern recognitors and send encrypted model charges (mathematical representations of what it has learned to) only to a central aggregator. These updates are bundled by the aggregator into a model on a global scale that enhances more detection in all such end points and raw user data does not live outside individual devices and organizational limits.

Such a strategy is especially useful in sharing the threat intelligence between multiple organizations. The competing companies can effectively enhance their security models without being in a position to share their respective incident information with one another.

Homomorphic Encryption

Homomorphic encryption enables computation to be done on encrypted information without the need to decrypt it. In the case of AI-based security systems, this implies that the threat detection algorithms do not need to see the plaintext information of an encrypted network traffic or user behavior.

Real-time applications are constrained by the fact that the technology is computationally expensive, more complex than the analysis of raw data, thus in the 1,000-10,000 times slower range. However, in the cases when the priority is given to privacy (processing regulated data, analysis of sensitive communications), the performance trade-off can be tolerated.

The latest deployments have decreased the computational load by a large measure and homomorphic encryption is increasingly feasible with specialized hardware acceleration in order to facilitate homomorphic implementation in the field of production-level security.

Balancing Security Effectiveness with Privacy Protection

Organizations are under a consistent demand to optimize threat identification accuracy, which can be achieved by gathering a larger amount of data and diminishing privacy provisions. A number of frameworks assist in going through this tension:

Risk-based evaluation: Systems do not need the same level of privacy. AI surveillance of the critical infrastructure (power grids, healthcare systems) warrants an expanded scope of data gathering than of corporate email. Align level of match privacy with real risk.

Budget allocation on privacy: You know, privacy is a limited resource. Every further decision of data collection costs a privacy. Organizations need to be more aware of the privacy budget they consciously place in security functions that result in the greatest value instead of collecting as much information as possible across all systems.

Implication to stakeholders: Legal, privacy, security, HR and other interested users should be involved in planning AI monitoring systems. Privacy concerns are usually underestimated in the technical security teams whereas real security threats are often underestimated by privacy advocates. The cross-functional design creates superior results.

Ongoing review: There is no such thing as privacy-security balance. When the threats change and AI is advanced, companies should periodically review the question of whether their monitoring practices are reasonable and necessary.

The independence of such ideas as Generative AI Security Risks also comes into play at this point as the latest AI can pose new privacy concerns unrelated to the future of traditional security applications.

Incident Response and Breached Personal Data

In the case where AI security systems identify real threats, the incident response processes should consider the personal data of the Incident and the very data that has caused the security alert along with the data that may have been stolen in the process of the security incident.

Privacy laws usually stipulate breach notification in case of personal data access, disclosure, or loss in a manner that may pose a threat of individual harm. In the context of AI as a monitors of security, this raises a number of scenarios:

Fake positives revealing employee information: In case security teams serve an employee with an AI-flagged action that is confirmed to be legitimate, did personal information get misplaced into the hands of the investigators? Procedures of clear investigation must restrict access to personal information through preliminary reviews.

Compromised monitors: In the event that the attackers find a way of breaking into your AI security system, they are able to access all the behavioral data and log the system data they gathered. In most jurisdictions, it is a reportable breach- the monitoring data has personal information of users.

Third Party breaches: Under these cases when data breach is experienced by security vendors, the organizations are still expected to alert the affected individuals. Vendor notification timelines on a contractual basis are essential in the compliance with regulatory deadlines (usually 72 hours in GDPR).

The AI security system incident response plans should be specific to:

- How fast can we locate out whether there was an incidence of security involvement of personal data?

- Who is allowed access to monitor data when investigating and what are the logs of this access?

- Which are the notification templates and communication plans of any privacy breach that involve security monitoring data?

- What do we record that we acted in proportion and in accordance with set standard practices?

Building Organizational Privacy Governance Around AI Systems

Assertive privacy management regards AI data surveillance as a continuous initiative and not a compliance box. A number of structures are used to keep organizations safe despite the evolving security systems by protecting their privacy:

data Protection Impact Assessments (DPIA): DPIAs are mandatory on GDPR when the processing is being carried out in a risky manner, and this is done in a systematic manner to analyze the risks to privacy before a new AI security mechanism is implemented. They write down the personal data being processed, the reason it is being done, the risk they have and what precautions they take.

Privacy by design: Design AI security architecture to protect privacy, not to ensure it with some privacy controls at the end. This has default settings that limit data collection, auto-destruction tools, and access control policies that limit the data visibility of monitoring data by users.

Periodical privacy audits: Periodic (quarterly or annual) reviews of the data actually captured by AI security systems vs.what policies are legalizing. Most companies find that surveillance has grown outside of what was first assessed without new privacy determinations.

Algorithm audits: Systematic testing of whether AI security systems are biased – showing more frequent results in favor of particular employee groups or providing false positives that over-represent a particular group of users.

Security personnel training: The workers in security monitoring AI systems must be aware of privacy regulations, when their work implicates personal data, and what to do in case of an escalation once privacy issues emerge during an investigation.

Obvious escalation lines: What are the actual review authorities of the alerts initiated by AI systems as per sensitive personal data (health information, privileged communications, protected characteristics, etc.), and on what grounds?

Such resources as AI-Powered Cybersecurity: Complete Guide may assist security teams with learning how such systems operate and where privacy breaches are likely to arise.

What This Means for Organizations Deploying AI Security

The privacy of information of AI-based security systems is not a resolved issue; rather, it is becoming a less significant challenge with the aid of technical creativity and careful regulation. Companies who have managed to balance between threat detection and privacy protection have a number of attributes in common:

They record obvious legal grounds upon the monitoring preceding the implementation of AI systems, rather than when regulators raise questions. They set up technical safeguards of privacy (differential privacy, federated learning, pseudonymization) instead of utilizing policy-only safeguards. They remain transparent to employees and users on what and why gets monitored. They also periodically review the AI systems on whether they are gathering only that, which is necessary in relation to their security interests.

The conflict of efficiency in an application of security and the protection of privacy is a reality and shall not be overcome. Traditionally, privacy is seen as a design constraint, rather than an afterthought, which is why organizations that view privacy as a cost component of their security design, rather than a feature to accommodate post hoc, will end up with more reliable security infrastructure and less regulatory risk, without impacting their ability to detect real threats.

The discipline is still developing at a fast rate. Theoretical research methods of privacy preservation, which were a few years ago, is today accessible as a production-ready technology.

The regulations are heading towards shared values: necessity, proportionality, transparency, and accountability. The implementation of privacy-conscious AI security by organizations today is helpful in positioning them towards success over the long term as threats and regulatory requirements keep evolving.

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!