Ey, independent AI agents are no longer fancy chatbots. They are making decisions, making APIs and coordinating workflows without having human oversight at all times. Bringing, but at the same time, that requires us to have stringent governance systems into place before the situation runs amok.

I have been experimenting with various governance models of AI agents during the past several months, and two models continue to occupy the first place on the list of every serious discussion: the ISO 42001 and the NIST AI Risk Management Framework. It is what is actually important when you are trying to govern systems capable of taking action of their own.

Table of Contents

What Makes Agentic AI Different From Regular AI

Conventional mail AI systems provide predictions or suggestions. You ask, receive an answer, and that is it. The animal that is agentic AI is quite different.

These systems can:

- Create own sub-goals in order to achieve wider goals.

- Deceive and use various instruments and Programmable Interfaces without permission every time.

- Collaborate with other agents to address complicated problems.

- Adapt their strategy, depending on what is and is not working.

I have observed this difference soon when I was testing a content research agent. It would not only respond to queries but also automatically scan databases, cross reference materials and form news reports to me as I went about my other business. Convenient? Absolutely. Yet a little creepy as well when you make them understand that it is coming up with dozens of choices that you never explicitly gave consent in on.

Such independence makes governance a problem that was unheard of previously. Questions of accountability, limits on risk and as well as supervision play a crucial role when an agent is capable of action rather than a mere theory.

The Core Governance Challenge

The basic issue is the following: how could you control and be held liable to systems which are meant to function without having to be monitored all the time?

You require structures that are capable of managing:

- Behaviors that are the results of subconscious programming.

- Coordination among agents that produce unforeseen results.

- Learning that will modify system behavior with time.

- Rapid decision-making which cannot be appropriately approved using conventional methods.

That is where ISO 42001 and NIST AI RMF come in play. They were both developed prior to the hype of agentic AI, however, they are surprisingly relevant, with a few critical elaborations.

ISO 42001: Building an AI Management System

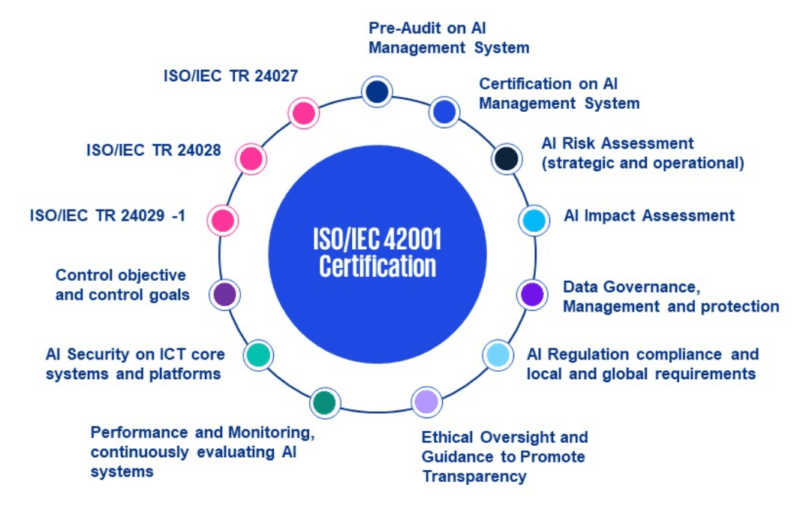

First AI management systems (AIMS) international standard is ISO/IEC 42001:2023. Consider it to be a cousin of ISO 27001, though, in this case, it is artificial intelligence rather than information security.

The standard informs organizations on how to construct institutional governance with regards to their AI implementations. It encompasses policies, functions, risk management procedures, documentation specifications, and repetitive betterment.

Why ISO 42001 Matters for Agentic Systems

This is what renders ISO 42001 relevant though it does not actually refer to autonomous agents:

Scope Definition (Clause 4.3) compels you to bring under control, which AI systems should be under governance. In the case of agentic systems, it will involve cataloging all the agents, their capabilities, the tools available to them and their autonomy limits.

Asset Mapping involves the recording of the AI elements, the data flows, and the dependencies. I used this on a multi-agent workflow, and found that there were relationships between some agents that I would not have thought of, such as the outputs of one agent driving the decision-making of the other agent, forming an unmonitored feedback loop.

Role Assignment specifies ownership of which agents, authorisation to create new capabilities as well as override authority of which. This is very important when a given agent commits the unforeseen act and you must know the party.

Risk Assessment Requirements provoke you to appraise possible inflicts in a systematic way. The standard does not specify the manner in which risks are scored but it demands a structured and consistent process.

Practical Application Steps

Inventory is a starting point of the agentic AI implementation of ISO 42001. List all deployed agents, with:

- Need and application case.

- Level of autonomy (read, suggest actions, act at permission, fully independent)

- Resources that are available to API-based agents.

- Data sources it relies on

- Possible effect in case it malfunctions.

Then put distinct boundaries. A research agent should not be able to write code in production systems. And it goes without saying, I have witnessed the plethora of so-called helpful agents who were given too much of a free hand.

Produced procedures in the response to incidents, systems, and regular reviews are also a requirement of the standard. As an agent, it implies the ability to revoke access in the shortest amount of time, roll-back changes or even shut down an agent should it be necessary.

NIST AI Risk Management Framework: The Risk Management Spine

The NIST AI RMF follows a different complementary approach. It does not emphasize the management system structure but rather risk management activities throughout the AI lifecycle.

The structure groups work into four operational pillars which are Govern, Map, Measure, and Manage.

Govern determines organizational culture, policies and accountability structures. It is here that you determine that the decisions affecting AI deployment are taken, the risk appetite of the organization, and the relationship between AI governance to the overall enterprise risk management.

Map determines the context of the system, stakeholders, possible impacts and risks. Under every agentic use case, you write down what might go wrong and who will be the affected ones.

Measure determines measures, methods of testing and continuous assessment. What makes you know whether an agent is doing what it is supposed to? What are the indicators of drift or degeneration?

Manage deals with the way you give priority and deal with identified risks over time. It is never a single event, risk management of autonomous systems must be on-going.

The Generative AI Profile Extension

NIST also published a Generative AI Profile (AI 600-1) which introduces particularity to systems based on large language models. This profile is applicable to most agentic AI in the modern world since the majority is based on LLMs.

It determines 12 categories of risk associated with generative AI:

- Confabulation (hallucinations)

- Recommendations of dangerous or violent content.

- Data privacy leaks

- Issues of intellectual property.

- Vulnerabilities of the value chain.

- Environmental impacts

Risk mappings and recommendations are provided in each category. This profile saved my life as it saved me oceans of time as opposed to having to create it blank; you are tailoring an already well-researched base.

Applying NIST AI RMF to Autonomous Agents

Multi-agent systems are particularly significant to the Map functionality. But you must know not only what each one of the separate agents does, but how they collaborate.

As I mapped a three-agent research process, I found out that the agents were basically establishing a closed feedback loop. The Agent A would come up with hypotheses, the Agent B would test the hypotheses, and the Agent C would come up with the additional round of hypotheses relying on the results of B. The coordination is not something that was created by a single person, it was a consequence of the way we had set up their objectives.

That is precisely the type of emergent behavior, which renders agentic governance challenging. Conventional risk assessment presupposes a known response of the system. Agencies have their way with you.

Measure is a verification that involves the establishment of observable metrics. In the case of autonomous agents the useful metrics are:

- Preventive rates (frequency of human intervention)

- Rate of escalation (frequency of cases when agents cover the appropriate cases with adequate assistance)

- Breaking the patterns of expected behavioral style.

- Resource usages (API calls, compute time, token usages)

Agentic AI Security: New Frameworks for New Risks

The solid grounds of ISO 42001 and NIST AI RMF also have certain limitations, and agentic AI creates certain threats that require further consideration. Newer frameworks are involved here.

MAESTRO: Multi-Agent Threat Modeling

Multi-agent security analysis is designed to be used by MAESTRO which was developed by the Cloud Security Alliance. It divides agentic systems into seven layers each of which has specific threats:

- Adversarial inputs Foundation model layer (model poisoning)

- Goals Agent layer (misalignment of goals, abused capabilities)

- Orchestration layer (coordination failures, resource conflicts):

- Tool/ API layer (unauthorized access, privilege escalation)

- Environment layer (sand box escapes, horizontal muscle movement)

- Layer of communication (interception of messages, use of fake agents, etc.)

- Ecosystem layer (cope Trojan, counterfeit web browser)

The good thing about MAESTRO is that cross-layer threats are explicitly modeled. Trading off the foundation model, an attacker may induce behavior of the agents, which in turn uses orchestration logic itself to request unauthorized access to APIs. These complicated attack chains are absent in the traditional threat models.

Identity-Centric Governance

Another trend that continues to re-emerge with agentic AI Security applications is that of viewing agents as subjects in an identity system.

Each agent gets:

- A separate identity having credentials.

- Least-privilege Scoped permissions.

- Tracking of tracks of all actions.

- One API call, which is verified using zero-trust.

I have applied the method on production deployments, and it addresses multiple issues at a time. When an agent behaves in a misbehaviour, the audit logs provide an instant indication of the action performed by the agent. In cases where you have to limit abilities, you change the permissions of your agent and not the code. Revocation of identity When an agent is compromised, then its identity is revoked.

This aligns well with the access control requirements of the ISO 42001 and the security and resilience characteristics of NIST.

Decision Rights and Autonomy Levels

Every decision need not be independent. An effective system of governance sets the boundaries of autonomy:

Level 0: Advisory Only

The agent reviews and advises, but nothing is done. Humans review and decide.

Level 1: Supervised Action

The agent is capable of acting, but only following the express human consent of every action.

Level 2: Bounded Autonomy

The agent will be able to work within some boundaries (spending limits, scope of data access, approved tools). Activities that are beyond the scope have to be approved.

Level 3: Monitored Autonomy

The agent is free in its domain yet, every action is recorded and monitored. Any interruption or overriding is possible to humankind.

These various applications require different levels. An agent collecting information that was perusing it among the people may be functioning at Level 3, an agent with the access to a database write function is likely to remain at Level 2 or lower.

Compliance-First Security Design for Agentic Systems

When this type of organization is in the regulated industries, the governance is not a choice anymore, but a necessity of compliance. This is how to be compliance-first towards agentic AI.

Start With Regulatory Requirements

Prior to the implementation of agents, locate relevant regulations:

- GDPR of European data subject.

- California residents CCPA.

- HIPAA for healthcare data

- Regulations in the field of financial services (SOX, PCI-DSS, etc.)

- New AI-related laws (EU AI Act, AI laws at the state level).

The ISO 42001 has been constructed to connect to the already existing compliance frameworks. In case you are already an ISO 27001 certified company, the addition of ISO 42001 provides a single governance framework in managing information security and AI operation.

Documentation as a Control Mechanism

The use of documentation is under focus in both ISO 42001 and NIST AI RMF; however, it is not merely bureaucracy. The importance of good documentation is that it fulfills several functions:

Accountability: In cases where the agent precipitates issues, recorded processes indicate what measures ought to have prevented the issue and where the failure took place.

Transparency: Audiitors and regulators should be able to know the decision-making procedure of the AI systems. A window is the provision of documentation.

Reproducibility: When you require to replicate or debug agent behavior, you can because of detailed documentation.

Continuous Improvement: You can not change what you have not measured and recorded.

I also observed that highly documented teams identify issues earlier. Whenever there is something wrong with an agent, he or she can easily identify the change in configuration, the addition of a new data source or model version that caused the change.

Building a Governance Program

The introduction of governance of agentic artificial intelligence needs a cross-functional team. Here’s a realistic structure:

The representation of the committee of AI Governance includes:

- Security and risk management.

- Data privacy and legal

- Product and engineering

- Domain specialists (in the case of healthcare AI, this can be clinicians; in the case of financial AI, this can be compliance officers).

Review Process based on risk levels:

- Low risk agents (internal tools, read only access): lightweight approval.

- Medium-risk agents (customer-facing, access only to a limited amount of write): standard examination and risk understanding.

Threat risk: autonomous choice, financial outcome, and safety issues (high-risk) Agents: This should be developed into a rigorous review with red-teaming, ethical review, and executive approval.

Lifecycle Gates was in line with NIST Manage function:

- Intake and scoping

- Initial risk assessment

- Design review

- Security testing and red-teaming.

- Real life piloting including monitoring.

- Production go/no-go decision

- Frequent recertification (once per 3 months or once per year)

Practical Implementation: What Actually Works

Theory is excellent, however, these are the things that have worked in my experience of implementing these frameworks.

Start Small and Specific

Do not expect to manage AI at hand. Select one agentic use case, combine the frameworks, get to know what is working, and become larger.

My initial application involved only one content research agent. We:

- Reported its potentialities and limitations (ISO 42001 asset inventory)

- Identified its risks through NIST Map functionality.

- Running a lightweight MAESTRO threat model.

- Defined monitoring metrics

By[330] Prepared a single-page agent charter documenting the purpose, level of autonomy and procedures to seek further discussion and proposing an escalation procedure.

This took about a week. It was not an ideal one but good enough to be learnt.

Build Reusable Templates

You find patterns out after ruling a few of your agents. Create templates for:

- Risk assessment worksheets

- Purpose, scope, autonomy, controls (agent charters).

- checklists MAESTRO-based threat modeling.

- The use of monitoring dashboards using standard metrics.

Through these templates, the new agents will have their governance significantly accelerated, and consistency ensured.

Invest in Governance Telemetry

Conventional systems document activities. The intentions, plans, and reasoning should be logged as well into agentic systems.

Useful telemetry includes:

- What was the purpose that the agent was going after?

- What strategy did it devise to do so.

- What tools it chose to employ and why.

- When it decided to become a human.

- When people overturned its judgment.

This telemetry of governance serves the management review criteria of ISO 42001, as well as, the NIST Measure feature. It also simplifies incidents to an extent that they are easily investigated.

Plan for Continuous Governance

Learning and adaptive agents require governance, which does so. The policies which are static decad at a rapid rate.

Set up regular reviews:

- Weekly: Police metrics, trend data, and Incidents.

- Monthly: High-autonomy agents: Risk re-assessment.

- Quarterly: Review of full governance, need of training.

- Every year: Governor program performance review.

What’s Coming Next in Agentic Governance

Autonomous AI governance is changing rapidly. Here’s what to watch.

Standardization Efforts

This already in proximate 1-2 years, both ISO and NIST are likely to issue agentic-specific guidance. Existing frameworks are effective, but clear instruction on what to do with multi-agents, emergent behavior and ongoing learning would come in handy.

Multiple standards development bodies are in progress of agent specific profiles and extensions.

Automated Governance Tooling

The first platforms are also starting to appear that apply NIST AI RMF and ISO 42001 controls programmatically, i.e., continuously monitoring agents and automatically imposing boundaries and offering compliance evidence.

Those tools consider governance as infrastructures and not paperwork. The system does not record what an agent is supposed to do but sets directives on the same.

Integration With Broader GRC Programs

With the enhanced integration of AI into the business process, AI governance needs not be an independent program, but instead an extension of the existing governance, risk, and compliance (GRC) programs.

This is already being reflected by the ISO 42001 which is specifically designed to align with ISO 27001, ISO 27701, and other management standards systems.

Getting Started: Your Next Steps

Suppose you are charged with the task of providing agentic AI governance, this is a possible point of practical initiation:

- Inventory your agents. Name all independent or semi-autonomous AI systems, its functions and its slew rate.

- Read the frameworks. Did Skim NIST AI RMF and an ISO 42001 implementation guide. There is no need to put it into effect at once, but an idea of the structure will assist you.

- Run a pilot. Select one medium-risk agent and use both the frameworks. Record the successes and failures.

- Build your governance stack. The organizational structure should be used in ISO 42001, the risk management processes in NIST AI RMF, and the multi-agent threat modeling in MAESTRO.

- Develop templates and checklists. Get your learnt pilot turned into assets.

- Establish monitoring. You cannot control things that you cannot see. Establish governance telemetry at the time of its inception.

Cybersecurity management system approach by ISO 42001 combined with risk management focus suggested by NIST AI RMF creates a good basis. Introduce layers in agentic specific security controls and you have a governance program that can genuinely manage autonomous systems.

It is not flawless – it is a very new space, and there are not flawless solutions – yet good enough to begin reasonably safely and discover what your organization is in reality requiring.

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!