Last updated on December 20th, 2025 at 03:25 pm

Well, be frankly speaking, these were my very first impressions about IPUs when I came to know about them, I thought it must be an additional technological buzzword that was deployed in order to confuse people. GPUs weren’t enough? Now we need IPUs too?

But here’s the thing. Having researched what AI IPU Cloud is, I understood that it is not just hype. It is a valid change in the way we are operating machine learning and it is important to everyone, whether you are a programmer, a startup co-founder, or a mere curious person and thus concerned where AI technology is going.

Table of Contents

So What Exactly Is an IPU?

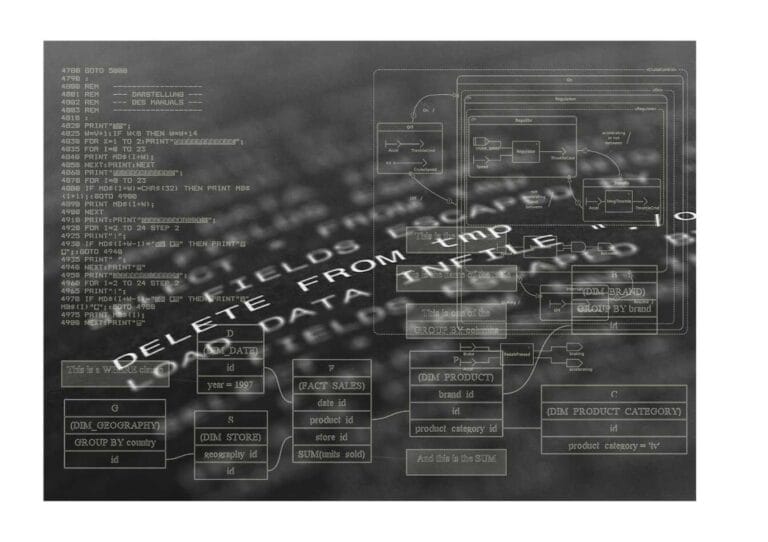

A ground-up processor designed to provide AI workloads is referred to as either or both as an IPU, or Intelligence Processing Unit. Consider it as follows: non-GPUs are generalists, the graphics fourth, the AI graphical good, the IPU? They are laser-centric on a single issue, which is machine intelligence.

The following is their difference. IPUs contain more than 1,000 on-chip independent cores. This implies that they are capable of doing things of scale, such as massive parallel computations, much more effectively than traditional GPUs in some tasks that require artificial intelligence. I mean real-time inference, a thin workload, and adaptive reasoning literatures that make or break the modern AI applications.

The Part on the Cloud: Its Real Significance.

Now, throw “cloud” into the mix. They can be deployed through places such as Graphcore and GCore Labs that have constructed cloud servers based on these IPUs but that is where the interesting part comes in.

It does not have to spend tens of thousands on hardware. You do not have to take care of physical servers. You simply spin an instance, execute your models and increase or decrease according to your requirement. It works on the same pay-as-you-go model that you are used to with AWS or Azure and it is optimized to run AI-intensive tasks.

The fact that these platforms have external environments that work out of the box was what I found as cool. Support for TensorFlow and PyTorch? Check. Development notebooks? Yep. Data pipeline tools? Already there. It’s plug-and-play for ML teams.

Why You Should Actually Care

Here is the point of the fact, why does this concern you?

IPUs could be used to reduce training time and costs in case you have AI projects. Businesses are gaining 8 fold greater performance than the outdated systems. Also, more power efficient, and therefore, reducing the energy expenses and decreasing the carbon footprint.

AI IPU Cloud is the advanced compute offering, in case of experimentation or learning. Individuals and startups do not need to purchase infrastructure to test the idea. When something works you can prototype, fail cheap and scale.

This is a bigger trend when you are concerned about the direction the tech takes. We are heading in the direction of dedicated processors to dedicated work. In machine intelligence, similarly to how GPUs transformed the world of gaming followed by AI, the IPUs are making a niche. Edge deployments, hybrid cloud environments, self-healing AI, etc. it is all founded on this basis.

Let-Tell (There’s ALways One)

Not everything’s perfect. The IPU software ecosystem lacks the level of maturity as the GPUs. In case you have existing legacy code that is developed in CUDA, then you will have to modify it. It requires a learning curve and admittedly, not all AI workloads are IPU-friendly.

There can be a lot of cost involved. Pay-as-you-go is nice until the time you leave a group of clusters unshutted and receive a surprise bill. And when you are working with regulated industries then data compliance across cloud and edge becomes complicated quickly.

Getting Started If You Just Wondered.

Wanna fool around with this stuff and not spend even a nickel? GCore laboratories and Graphcore have free trials. You may also check out free courses, possible ones include a course on AI infrastructure on Coursera, and on platforms, free skill challenges happen on a fairly decent schedule.

The bottom line? AI IPU Cloud is not going to take over GPUs in a night. However, it is opening its doors ward quicker experiments, less expensive accessibility, superior efficiency. It might occur to you whether you are developing the next great AI application, or you are simply wondering what all the buzz is, but it might be valuable to know what IPUs can offer.

FAQ’s

What’s the main difference between an IPU and a GPU?

IPUs are also AI-specific and have thousands of independent cores and on-chip memory, which is more effective when doing parallel machine learning jobs. GPUs are more generalized and are more efficient in graphics and some AI tasks, but the IPUs can serve sparse and irregular tasks more effectively using less power.

Can I try AI IPU Cloud for free?

Yep. Graphcore and GCore Labs are examples of providers that usually offer free trials to have an opportunity to test their platforms without commitment. It is an efficient method to determine whether IPUs would fit your workflow before incurring expenses on compute time.

Read:

Easy Ways to Boost Your Instagram Reach: Algorithm-Beating Tactics for 2025

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!