Last updated on January 23rd, 2026 at 04:13 pm

Table of Contents

Introduction

I quickly recall when I first had to view a SOC analyst who manually had to triage hundreds of security alerts. It was late, 2 AM, and they were taking their third energy beverage, and one-third or so of those notifications were false positive. That is when I understood that cybersecurity should be smarter.

AI-driven cybersecurity is not just some buzzwording. The change in focus to less reactive, more rule-based defensive systems to proactive, more adaptive, threat detection learning is the shift.

Conventional security systems are reactive in that they look forward to the occurrence of known attack signatures within their databases. Artificial intelligence systems process the patterns, identify anomalies and identify threats that are yet to be included in any signature database.

This is important because cybercrime is already predicted to amount to billions of dollars as it is projected to reach 15.63 trillions of dollars by 2029 as compared to 9.3 trillions of dollars in 2024. It increased by 69 percent in five years. It is impossible to maintain the organizations with manual procedures and fixed rules. They require thinking, adaptive and real time responsive systems.

This guideline addresses the entire AI cybersecurity landscape. I would also take you through how AI threat detection works and how machine learning can detect threats, summarize how automated incident response works in seconds matter, show you how AI vulnerability searches work in scale, analyze how endpoint detection and response works, describe the security risks of generative AI itself, and give you the best practices of actually implementing AI.

Not only CISOs assessing AI solutions, but also security analysts in their attempts to comprehend the operation of these systems, or a tech professional seeking to establish expertise in this area, you will find something you need here. No company nonsense only an objective data on the way AI cybersecurity works in the production settings.

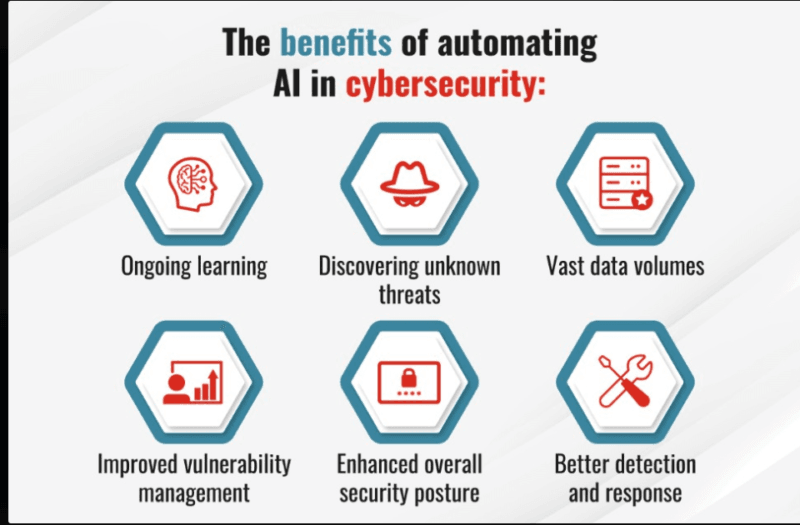

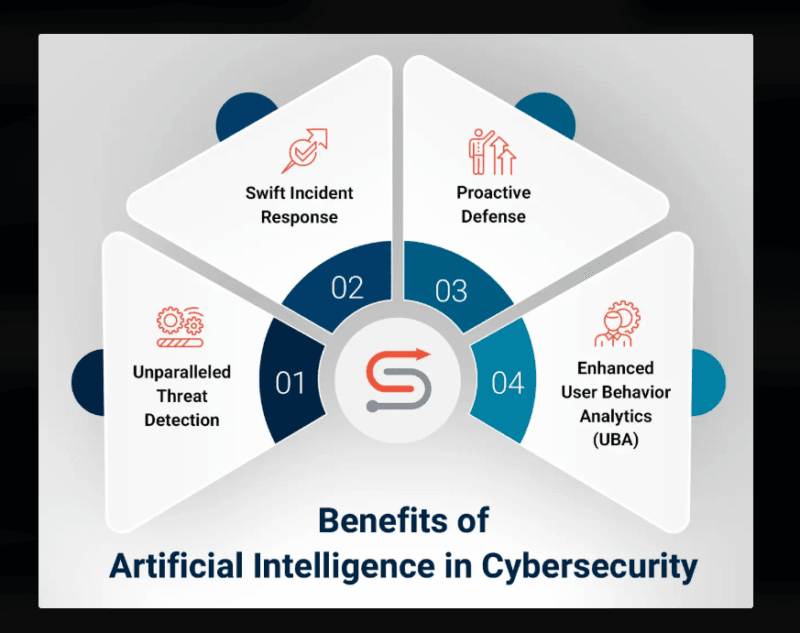

The major AI advantages in Cybersecurity.

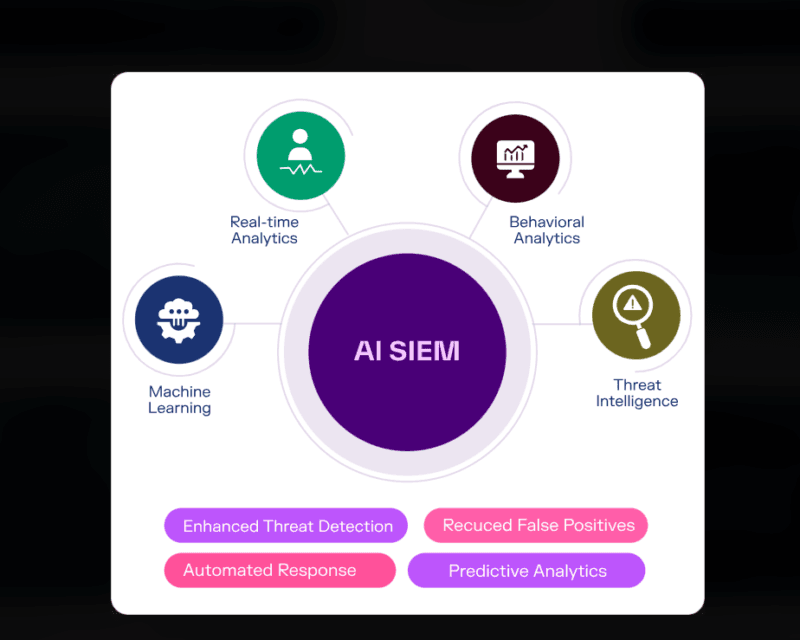

Real-Time Threat Detection which, in fact, is quick.

Conventional signature-based detection cannot be used on newer threats. Hackers would keep on switching strategies to get round existing signatures, and by the time the security vendors revise their database, they have caused damage.

AI flips this model. Machine learning systems process large datasets on-the-fly and do not need examples before identifying suspicious behavior. I have observed instances where the organizations reduced their mean time to detect (MTTD) by 30-40% following the introduction of AI-based threat detection. Between hours and days that is the difference between learning about a breach.

The technology studies behavioral patterns in your whole environment unusual mode of logging in, strange network traffic, suspicious file access, attempt to escape privileges. When it does not conform to the baseline, it gets indicated in the system. This is important since on average many organizations have their attackers spending weeks before being detected. It is through AI detection that one can enlarge that window expansively.

Response automation When Every Second Counts.

Detection alone isn’t enough. I have seen security teams discover breaches fast and then spend valuable time trying to decide what to do. As soon as the danger occurs, AI delegates vital response functions.

In case AI systems can identify malicious activity, they can automatically isolate the compromised machines, block suspicious network connections, quarantine malware, revoke compromised credentials, and provide context to the security teams. This is done in seconds or milliseconds as opposed to minutes or hours that human response takes.

There is a significant increase in mean time to response (MTTR). The organizations test the cases of decreasing the MTTR by hours to minutes. When attackers can lock down whole networks in less than a hour as seen in ransomware, there is no option of automated response but survival.

Reduced number of False Positives, Less Signal in the Noise.

Alert fatigue is real. Security analysts are getting thousands of alerts in a day and a majority of the alerts are false positives. I interviewed analysts who say that they began to ignore notifications since the ratio of noise to signal is so bad.

The AI systems are self-educated to understand what a normal appearance is about the place you are in. Machine learning models are contextual, as opposed to generic falsehoods that respond to anything that is vaguely suspicious.

That valid DevOps engineer with access to production at three am in the morning? The AI is aware that that is their usual tendency. The infiltrated authorization of an unfeasible journey situation? That is put under alarm.

The reduction in false positive directly enhances the efficiency of the analysts. Whenever analysts will have confidence in the alerts they get, they will probe quicker and in a better manner. False positives are usually reduced to 5 percent or so with 3-6 months of model tuning versus 20-30 percent with the traditional tools.

Anticipatory Vulnerability Management Preface to Exploitation.

AI does not respond, but forecasts. Machine learning algorithms are used to process vulnerability information, threat intelligence, availability of exploits and whether an asset is critical to determine which vulnerabilities the next target is going to be.

Conventional vulnerability management generates huge backlog. Incorporating your scanner, you identify 10,000 vulnerabilities, and your resources can find a way to remediate possibly 100 of them this month. Which ones matter most? AI responds to it by matching vulnerability information to proactive threat campaigns, weaponization schedules and your unique environment attributes.

I have witnessed organizations change patch everything eventually to patch what matters now. The AI can prioritize vulnerabilities that are likely to be exploited and those that have business consequences giving teams opportunities to invest their time and energy where they are likely to stop breaches rather than tick compliance boxes.

Monitoring That Never Sleeps.

Humans need breaks. AI doesn’t. Traditionally, security operations centers work overnight, and there is a lack of coverage and hand-off inefficiency. AI offers indeed unremitting watching, no fatigue, distraction, and shifts.

This 24/7 has been particularly important in global organizations whereby attackers can be located anywhere and at any time. The AI has a continuous watch over every time zone and is active in terms of the analysis of the network traffic, user behavior, a system log, and threat intelligence feeds.

The consistency also disremoves the weekend effect whereby the attackers specifically target the organizations when off-hours and when the security teams are understaffed. AI has a 3 AM Sunday detection capacity as it has at 2 PM Tuesday.

Intelligent Automation of Costs Optimization.

The cost and availability of security teams are very costly and limited. The cybersecurity skills gap is continuously increasing and hundreds of thousands of jobs remain vacant, on an international scale. AI can assist companies in doing more using the same teams.

Automation of such tasks as triage of alerts, log analysis, threat hunting and initial incident response liberate the analysts, allowing them to concentrate on complex investigations and strategic initiatives. Companies claim that productivity of security analysts improves by 40-60% following the implementation of AI automation.

Payback period usually comes in 18-24 months considering that you cut down on MTTD, cut down on breach expenses, cut down turnover of analysts and the enhanced security posture. In the case of most organizations, AI cybersecurity is a money-saving initiative by only preventing the cost of breach.

Core Components Overview

Threat Detection and Prevention: Discovering What the Conventional Tools have overlooked.

The AI threat detection works at a very different principle as compared to the signature-based systems. Machine learning models are used to detect anomalies and suspicious activities that manifest threats, instead of comparing them to known bad patterns.

Pattern Recognition at Scale: Machine learning model operates millions of data points such as network packets and user behaviors or file operations and registry changes or API calls and finds patterns that people could never hope to see by hand. The models acquire usual normal baseline behavior of all its users, devices, and applications within your environment.

In the case of deviations, the system will determine the scores of risk depending on various factors. One suspicious activity can not raise warning bells, but a blend of suspicious behaviors, such as lateral movement attempts, credential dumping, data exfiltration pattern, etc., can produce high-confidence threats signatures.

Zero-Day Threats: Zero-Day presents a difficult challenge to handle since the threat is entirely unknown and may manifest in dozens of different forms. Anomaly Detection of Zero-Day Threats: This is where AI truly comes in. Totally new exploits are termed as zero-day exploits and lack any signature.

Orthodox security systems are not aware of them. Even in cases when there is no knowledge about the vulnerability, AI systems are able to identify zero-days based on anomalous behaviors that are indicative of exploitation attempts taking place.

I have observed AI systems identify zero-days by behavioral evidence such as an atypical memory access pattern, the spawning of new processes when none should be spawned, an abnormal connection on the network, or an attempt to escalate privileges that are not part of the normal application behavior pattern.

To learn more about the technicals of how that achieves its goals, refer to our tutorial on AI Threat Detection Explained: How Machine Learning Recognizes Cybersecurity Threats.

Behavioral Baseling: AI defines behavioral norms in all the entities of your world. It understands that your database administrator accesses sensitive information on a regular basis at business-time, that your web servers are interacting with select external IP addresses, that your finance team accesses some applications.

Causal dispersion out of these baselines instigates investigation. When that DBA account attempts to access the data unexpectedly at 3 AM and oddly enough, then the AI alarms off. As you have your web server begin communicating with command-and-control infrastructure, the AI blocks it.

Incident Response: The Seconds to Containment.

Lacking response detection is merely an expensive monitoring. The incident response is automated to manage the whole process of responding to alerts and containment and remediation using AI.

Automated Triage and Enrichment: AI systems cannot detect threats without automatically collecting context.

They are able to draw correlated logs, threat intelligence feeds, discover systems that are harmed, define the extent of attack, and quantify impact on business in seconds.

This enrichment is done by traditional SOCs and is done manually by analysts, and it takes 15-30 minutes to process a single alert. AI does it immediately and it gives the analysts a full picture as soon as they see an alert. The context contains the reconstruction of attack timelines, paths of lateral movements and compromised credentials as well as recommended containment measures.

Execution of Playbooks Automatically: AI automatically executes response playbooks with the type and severity of a threat. In the case of phishing, the system will have emails quarantined, warns users of the domains that have been blocked by the system and audits the users. In the case of ransomware, it isolates compromised devices, blocks malicious IPs and activates verification of backup.

Its automation also has decision trees which change according to the observed attackers. In case of the first containment, the AI progresses to more aggressive actions. Should the spread of the threat take place, then the containment is automatically expanded to other systems.

Human-in-the-Loop of Future High-Impact decisions: Not all of it will need complete automation. AI systems ensure human supervision on the high impact events such as blocking some critical business applications or isolating executive systems.

The AI will make recommendations with a confidence score and leave it to the analysts to make final decisions on sensitive actions.

In the case of organizations that are willing and able to apply these capabilities, our guide on AI-Powered Incident Response: Automating Detection, Triage, and Containment, provides detailed instructions on the technical configuration and best practices.

Vulnerability Management: Finding Weaknesses Before Attackers Do

Conventional vulnerability scanners generate excessive back logs. AI makes vulnerability management not a compliance practice but a risk reduction program that is strategic.

Intelligent Scanning at Scale: AI driven scanners optimize the level of scanning intensity based on the criticality of assets, the condition of the network, and business operations. They smartly slow down the scanning to ensure that they do not abuse the production systems and still offer complete coverage.

The scanners compare the results of several tools, avoiding occurring the same report and also displaying a single vulnerability. It is also they that detect missing patches, misconfigurations, weak credentials and exposed services which they cannot detect using traditional scanners.

Risk-Based Prioritization: This is the point at which AI is of immense value. Machine learning classifiers consider every vulnerability on several dimensions: CVSS score, the presence of exploits that can be used to exploit the vulnerability, threat campaigns that run to compromise this vulnerability, asset criticality, versus compensating control criteria already in place, and any potential impact on the business.

The resultant list consists of a prioritized list of vulnerabilities, which are practical threats to your particular environment, and not all CVEs with high CVSSs. Security teams have the capability to prioritise on the 100 vulnerabilities that are important rather than being overwhelmed by 10 000 discoveries.

Predictive Vulnerability Analysis: AI constructs itself using any of the previous exploits history, attacker TTPs, dark web chatter, evidence-of-concept access, and even geopolitical conditions to predict the most likely exploits.

Such predictive is able to enable the organizations to stay ahead of threats. By forecasting when an attacker will use the exploit, an AI allows the teams to address the weakness before it is used in the wild. To discuss how it is to be technically implemented, see our guide on AI Vulnerability Scanning: Automating Detection and Assessment at Scale.

Endpoint Protection: Behavioral Defense for Modern Threats

Endpoints are still the major point of attack. The endpoint detention and reaction (AI-powered) offers behavior security, which does not need extra signatures.

Full-Stream Process monitoring: AI EDR follows third-party behavior on endpoint computers, viewing it in real-time–WAIT: process analytics, file access, registry adjustments, network activities, memory activities. The machine learning models detect the malicious trends even in case the individual malware is not known.

It is important since antivirus based on the signature will not work with polymorphic malware, fileless attacks, or the so-called living-off-the-land attack where the attacker relies on system legitimate tools. AI is able to identify these threats by tracking certain behavioral patterns of malicious behavior no matter what executable it is.

Ransomware Detection and Rollback: AI applications identify ransomware using the behavioral evidence, e.g. the presence of fast detection of file encryption processes, rules in deleting shadow copies, and abnormal file modification volume. Detection takes seconds, and before much encryption has taken place.

EDR solutions that are advanced also have automatic rollback functions. Also when the ransomware is identified, the computer can automatically restore files to the pre-encryption state, isolate the affected endpoint and block the command-and-control communications of the ransomware.

Memory-Only Threat Detection: There are a lot of sophisticated attacks that do not leave file traces as all operate in memory. AI EDR technologies scan memory in an attempt to detect malicious code, credential dumpings, code injection and reflective DLL loading, which are signs of the sophisticated attacks.

These memory-only attacks are inaccessible to traditional security tools due to their reliance on the file scan and network traffic scan. The behavioral analysis carried out by AI traps them due to the nature of the abnormal memory processes. The technical in-depth analysis of AI Endpoint Detection and Response (EDR) implementation and vendor comparison.

Compliance & Risk: Automated Reporting with GenAI Awareness

AI facilitates compliance but it brings new risks which need to be handled by organizations.

Automated Compliance Reporting: AI enables the creation of framework-based compliance reports such as SOC 2 and ISO 27001, HIPAA and PCI DSS or GDPR. They continuously observe control effectiveness, find areas of compliance and record remediation efforts.

This automation saves the preparation of compliance in weeks and cuts it to hours. AI extracts evidence of various sources, reconciles controls against requirements, and provides audit-compliant documentation without due effort on the part of a manual analyst.

Data Privacy and Governance: Machine learning models find sensitive information in your environment, label it accordingly, track the access history, and notify the possible privacy breach. This is also essential in GDPR and CCPA compliance wherein the organizations need to be aware of what personal data is in their possession and how they use it.

AIs are also used to detect possible data exfiltration including detecting suspicious patterns of data access,transfer, or transfer of sensitive data to unauthorized systems.

Generative AI Security Risks: Entertainment is a security risk experienced by organizations that use generative AI. There is the risk of AI models leaking training data, can be noisily trained with prompt injection, biased production or a poisoned model due to corrupted training data.

The security teams should be aware of these risks and take the relevant controls. Poisoning Data Poisoning Data attacks with the intention of undermining model behavior. Adversarial attacks are manipulations of AI predictions by use of false inputs. Training data bias generates apprehended blind spots.

Companies need to adopt the protection mechanisms such as ensemble learning, adversarial training, constant model checking and fairness auditing. To have a full look at these perceptual risks, use our Generative AI Security Risks guide.

Implementation: Turning Theory Into Production Security

Effective AI cybersecurity cannot be achieved by mere purchases and wishes to work out.

Assessment and Planning: Begin by analyzing what security infrastructure you have now. Determine where AI will provide the greatest value – potentially lessening an operator alert fatigue in your SOC, potentially speeding up incident response, potentially prioritizing vulnerabilities.

Have clear and quantifiable goals such as 40 percent of MTTD or less or false positives less than 5 percent. These goals are used in the choice of the tools and to measure success.

Data Foundation: AI needs soaring quality of data. Gather security logs of every source SIEM, firewalls, endpoints, network sensors, cloud platforms, identity providers. guarantees that the data is correct by eliminating discrepancies, format normalization, ensuring completeness.

Poor AI results are caused by poor data quality. Complete your logs or fix the gaps in your network sight before you install AI systems.

Selection and Integration of the tool: The tool used in this context is not a general-purpose machine learning platform, but AI solutions that address cybersecurity. To be integrated with other security tools, transparency of decision-making, the track record of the vendor security, and the proven scale are considered to be the key factors.

The tools should be tied into your SIEM, EDR, firewall and ticketing systems. Artificially intelligent tools that are siloed and do not share information with the existing security tools create more issues than they can resolve.

Gradual Implementation: Implement AI in pilots that are focused on particular security issues. Test against baselines, receive operational participation and optimize setups prior to deployment enterprise-wide. The success of the production is foreseeable through pilot success.

Begin small: Pillsbury Kill AI threat detection across a network segment or respond to a single type of threat automatically. Test what works, but changing processes and expanding.

Team Development: Invest in security Teach AI capabilities, tool usage, and new incident response policies. Form collaborations between data scientists and security analysts in order to optimize AI.

Your analysts must learn about the capabilities of AI, and its limitations, how to use AI advice and when to go against the robots. In the absence of such training teams will not be convinced or successfully apply AI systems.

To get detailed instruction to follow in implementing it, timelines, resources to use, and the most common pitfalls, refer to AI Cybersecurity Best Practices: Implementing Effective AI-Powered Defense.

How AI Cybersecurity Works

Knowledge about the technical workflow can assist you in making judgments in solutions and realistic expectations. This is the way AI cybersecurity systems actually work in the production scenarios.

Data Collection: Foundation Building.

AI systems consume information across all present data sources. This will consist of network traffic logs of firewalls and IDS/IPS, system logs of Windows Event Logs and syslog, user activity logs of identity providers and authentication systems, endpoint monitoring logs of EDR agents, application logs of business-critical applications and threat intelligence of commercial and open-source feeds.

It is an enormous amount of data – organizations with large size produce terabytes of security data every day. AI systems cleanse such data into common structures, add additional context such as user roles and the criticality of assets and archive it to be analyzed.

The level of AI is defined by data quality. In case your network sensor has the blind spots or you have not deployed your endpoint sensors everywhere, the AI will not have complete information and will deliver the less accurate results.

Model Training: Teaching AI What “Normal” Looks Like

Machine learning models are trained over past security information before they are deployed. They acquire habits of typical behavior, attributes of familiar attacks, dependencies among various security occasions, and risk signifiers that are used to forecast threats.

Supervised learning has been applied in training of well-known malware, phishing, and intrusions (where models are trained using labeled instances of these types of attacks) and unsupervised learning when detecting anomalies (where models are trained using unlabeled samples).

The training process would consist of feature engineering to extract the appropriate data properties, setting the right algorithm to use that should be relevant to the security issue, hyperparameter optimization to have the best model, and finally, check on some held-out test data to check whether the model was accurate. This training isn’t one-time.

The threats kept shifting and the organizational environment will keep changing forcing models to undergo a continuous retraining. A model that has been trained six months is slowly becoming less accurate as attackers are changing strategies.

Real-Time Analysis: Speedy Pattern Recognition.

AI models in the production are used to analyze incoming security data in real time.

They make comparisons between present actions and learned standards, they work out weirdness marks in the case of deviations, they interrelate occasions across various data collections, and they detect assault patterns even when specific occasions appear innocent.

The processing is done in real-time, with the analysis processing thousands or millions of events in a second. In case of suspicious patterns, the AI determines the risk scores, using various factors- severity of the irregularity, Count of the suspicious events correlated, the centrality of the various assets, probable effect on a business, and the reliability of the judgment.

The high-risk events raise instant alerts and provide full context. Automated investigation processes can be triggered by moderate conditions to act. Anomalies that are classified as low risk end up being logged to be processed by pattern analysis but do not produce an immediate alert.

Automated Response: From Detection to Containment

The confirmation of threats triggers the AI systems to perform response actions on the pre-established playbooks and learned behavior.

It depends on the level of danger and is proportional to your own environment. In case of low threats, the system may simply record the activity and draw attention to analysts. In the case of medium-level threats, automated investigation processes are used to obtain more context and then the alert is sent.

In the case of high severity threats, response measures under containment are automatic and enacted automatically, such as isolating affected systems, blocking IPs linked to malicious programs, revocation of restricted credentials, and quarantine malicious programs.

Human management is still important in high impact decisions. The AI offers advice with scores on confidence, and people decide on what to do that may interfere with the business processes.

Learning: Becoming Smarter with Each Passing Minute.

The analyst feedback (accepting or refuting AI recommendations) leads to the continuous improvement of AI systems, as does the analysis of outcomes (determining whether AI decisions averted or overlooked threats or not) and updates in the model (retaining new information with the recent attacks and with false positives).

This is the learning loop that distinguishes AI to the traditional security tools. Rule based systems are static and fixed until they are updated by the administrators. AI systems are self-adaptive and become more accurate at identifying environmental-specific threats and minimize false positives with time.

Accuracy enhancement is often in the 10-20 percent range in the first six months as models adapt to particular environments and security analysts give feedback which is used to enhance detection logic.

Real-World Applications and Case Studies.

The concept of AI cybersecurity is not theoretical it is implemented in production systems that safeguard the important infrastructure, government systems, and companies globally. This is the way how organizations make use of it.

Threat Detection at the Government and Military.

Government agencies have to deal with nation-state attackers that have boundless resources and advanced methods. The use of AI systems creates a level playing field because it is able to identify advanced persistent threats (APTs), which resist conventional security.

The defense agencies use AI to search giant networks to identify insider threats, uncover any data exfiltration attempt before the secret information is stolen, any intrusion in a chain of supply among software and hardware, and draw parallels between threat intelligence of various levels of classification.

The amount of information that these agencies are handling each day (petabytes) makes it cumbersome to do this only by human means. The only method that can be scaled to a larger threat detection is through AI.

Enterprise SOC Operations

Big companies have 24/7 security operations centers that have to deal with thousands of security alerts per day. AI disrupted SOC operations into several aspects by providing automated functionality of tier-1 analyst, filtering the number of alerts through false positives, fastening tier-2 investigation based on automatic enriched, and tier-3 analysts to specialize in suspecting difficulties.

Firms in the financial services industry apply AI to spot fraud behavior, the signs of insider trading, as well as account takeover attempts immediately.

It is used by retailers to safeguard payment card information and identify a point of sale breach. It is used to secure patient information and identify ransomware before it is encrypted by healthcare organizations.

There is little doubt about the enterprise SOCs ROI: AI lowers the workload of analysts by 40-60, decreases MTTD by 30-40, and lowers after tuning false positive to less than 5 percent. These enhancements are directly converted into cost savings and reduction of risk.

Ransomware Prevention of Critical Infrastructure.

A ransomware attack on critical infrastructure, such as energy or water, transportation presents a danger to public health besides economic loss.

The data safeguarding of these environments is ensured by AI systems based on behavioral ransomware detection, automated verification of backups, the implementation of network segmentation, and the provision of rapid containment to reduce the scope of spreading.

Energy firms rely on AI to identify aberrant control system practices as a ransomware attack or sabotage. It is applied by water utilities to defend against cyber and physical threats of SCADA systems. It is used by the transportation agencies to protect the traffic management and the railway control systems.

In such cases, the rapidity of AI response is important. Ransomware can also encrypt systems of operational technology within minutes and they might damage the physical equipment, or disrupt the services. These results are avoided by AI detection and containment in seconds.

Security of Cloud and Hybrid Environment.

Cloud environments present security issues that conventional tools have difficulty dealing with, dynamic infrastructure, workloads that are short lived, the complexity of multi-cloud environments and the shared responsibility paradigm. AI responds to them with constant cloud set-up visibility, container and serverless security, cloud workload and cross-cloud matching.

AI helps organizations utilize cloud consonance before it gets put to use, establish damaged cloud credentials and unnatural API utilization, prevent cloud-specific assaults such as account hijacking, and ensure security across all three different clouds (AWS, Azure, and Google Cloud).

The dynamically scaled up and down infrastructure characteristics of cloud environments demand dynamically scaled up and down security measures. AI will offer this dynamic security without human intervention to update configuration.

Industry-Specific Deployments

Various industries have their own specific threats that need AI specific capabilities.

The issues that mingle with the healthcare organizations are relatively ransomware attacks on patient care systems, staff insider attacks (staff accessing patient records), healthcare and medical devices security as a wide of IoT devices, and HIPAA regulation in the multifaceted setting.

AI can be used to ensure electronic health records are protected, ransomware is identified prior to damaging patient care, and documentation of compliance is automatically enabled.

Financial services experience advanced fraud, insider trading detection, payment system security and compliance to various structures. AI is used to infer such transactions, detect any patterns of market manipulations, and produce reports on compliance with SEC and with the FINRA, among other regulators.

Protection of operational technology, supply chain continuity, finding violations of supply chains, and ensuring convergence between information technologies and operational technologies are tasked by manufacturing and critical infrastructure. AI scans abnormal behaviors of the industrial control system that reveals cyber-physical attacks.

Challenges & Considerations

The AI cybersecurity provides considerable advantages, yet there are certain difficulties. Companies must be aware of such limitations and prepare.

False positives and Alert fatigue Control.

False positives are even evident among AI systems, particularly in its early use. Dumb models do not have any context on what is going on in your particular world and set off warnings on valid but strange actions.

The tuning process can take 3-6 months with models acquiring knowledge of your environment and analysts making feedback. False positive rates may be very much frustrating at this period–up to 15-20 percent. Organizations must make arrangements during this tuning period and continue to keep the analyst involved even with the noise.

This can be solved by continuous improvements in the models, optimizing the threshold by organizational risk tolerance, creating human feedback loops, and rejecting or confirming AI decisions by human analysts and enriching the stores automatically to provide background that removes ambiguity around the alert.

The highest false positive rates in most organizations after tuning is less than 5% yet it is a process that requires time and management.

Security Skills Discontinuity and Artificial Intelligence Expert knowledge.

Given that the AI cybersecurity is skill-intensive, many organizations cannot apply this technology because it needs data science to develop and tune the models, machine learning to gain an insight into the strengths and weaknesses of the algorithms, and security knowledge to implement AI in cybersecurity issues.

Cybersecurity skills gap already has hundreds of thousands of job opportunities in the world that remain vacant. It is even more difficult to hire as AI expertise is required. There is a shortage of professionals with knowledge of both machine learning and cybersecurity, which makes organizations compete to acquire such specialists.

Some solutions consist in managed security services where the vendors are the experience in AI, training the same security analysts in the fundamentals of AI, partnerships between the security and the data science teams and vendor-provided AI which requires some customizations.

This task should not be underestimated. I have witnessed companies purchasing advanced AI security systems and have not succeeded in using those services due to the lack of the technical skills to set up, calibrate, and manage the systems.

Model Training Data Quality Requirements.

The quality of AI is based on the quality of training data. Bad data generates bad AI outcomes- it is this straightforward. Organizations should do intensive logging in all security tools, record all logs in uniform formats to be analysed, provide a full view of the network without any blind spots, and had enough history that can be used to train the model.

Most organizations find out that their logging is not finished, they can see who was on their networks but not the other way around, or they retain data that is not long enough to train AI. These data quality problems can cost to resolve and before AI implementation is viable it may need choosing infrastructure investments.

The quality of data is a ceaseless problem. With the changing environments, new applications, cloud migrations, and changes in the organization, data collection should also change. Model degradation is avoided by continuous data quality monitoring.

AI-driven monitoring could be associated with privacy concerns.

Monitoring conversations by AI systems that track the conduct of users is a legitimate cause of concern in privacy. The workers are concerned with surveillance. Customers are concerned with collecting data. GDP and CCPA are also of concern to the regulators.

Companies have to reconcile demands of security with privacy rights using privacy-safe methods of machine learning such as federated learning, disclosure of what AI observes and why, data reduction gathering only the required data, and adherence to privacy standards with using proper controls.Others more recent AI methods can provide security analysis without conjugating sensitive data.

Federated learning verifies models on dispersed information sets without aggregating crude information at one point. Differential privacy measures also make sure that the behavior of individual users cannot be inferred out of AI models.

These privacy-saving strategies remain immature, yet these are the way forward when it comes to AI security in privacy-aware settings.

Generative AI Security threats and mitigation.

Generative AI has special security issues in organizations. Due to the training data, AI models may leak sensitive information that endangers privacy, be attacked by prompt injection, have bias outputs that cause liability, or be poisoned by data poisoning.

Machine learning which involves adversarial machine learning- an attempt by the attackers to intentionally design inputs in order to deceive AI systems- is increasing a threat. It has been found that malware detection systems, intrusion detection systems, and fraud prevention networks can be compromised due to adversarial inputs.

Defenses such as ensemble learning which has a multi-model combination to make results more resilient, use of adversarial training data which injects attack data during training, constant monitoring of the model to identify drops in its accuracy, and human supervision of high-impact AI choices.

None of the defenses offers a hundred percent protection. The organizations require stacked defenses that integrate various methods and a constant watch over the new AI security threats.

Conclusion & Next Steps

Artificial intelligence in general and cybersecurity in particular have ceased to be an experimental technology and become a fundamental infrastructure. Machine learning is now used in organizations working in various industries to detect threats, respond to them, manage vulnerabilities, and protect endpoints.

The technology is providing quantifiable advantages, such as lower detection time, responsiveness, lower false positives, and increased productivity of the analysts.

But AI isn’t a magic solution. It demands quality information, constant adjustment, professional operators and expectations as well.

When AI security is adopted in an organization, it works when the organization takes a collective approach to implementation, invests in its development, offers human supervision, and constantly optimizes according to the experience of operations.

The threat landscape is on a constant change. More often, attackers are relying on AI to reconstruct, social-engineer and avoid. Defensive AI should keep up with this pace and extend to new technologies such as agentic AI, quantum-resistant cryptography as well as privacy-sensitive machine learning.

Where to Go From Here

To gain a more technical insight into individual AI cybersecurity proficiencies:

Threat Detection: Understand how machine learning detects threats based on behavioral format, anomaly detection, and pattern discovery AI Threat Detection Explained: How Machine Learning Identifies Cybersecurity Threats.

Incident Response: Find out how to automate detection, triage, and containment to respond more quickly to AI-Powered Incident Response: Automating Detection, Triage, and Containment.

Vulnerability Management: Learn Scanning with AI and prioritizing risk in AI Vulnerability Scanning: Automating Detection and Assessment at Scale.

protection at the endpoint View behavioral EDR and ransomware prevention in AI Endpoint Detection and Response (EDR).

GenAI Security: Keep your AI implementations safe out of new threats in Generative AI Security Risks.

Implementation: Learn how to implement AI Cybersecurity Best Practice: Implementing Effective AI-Powered Defense.

The situation with cybersecurity is not going to become any easier. The volume of attack is rising, threat sophistication is and is complying demands are rising. The AI gives a scalable, dynamic capability of defense corporations to keep pace with threats.

Begin with clear goals, invest in data quality, launch in targeted pilots, measure results rigorously and scale what is performed. Implementation of AI cybersecurity provides the outcomes when it is applied in a thoughtful and with the right expectations and continued optimization.

This is because the organizations that integrate AI resources and human factors, along with the high security principles upheld, and the constant readiness to follow the changes in security threats will create resilient security operations which we will need in the world that is becoming more and more digital.

Read:

Authentication Methods and Protocols: A Guide for Confused Developers

I’m a technology writer with a passion for AI and digital marketing. I create engaging and useful content that bridges the gap between complex technology concepts and digital technologies. My writing makes the process easy and curious. and encourage participation I continue to research innovation and technology. Let’s connect and talk technology!